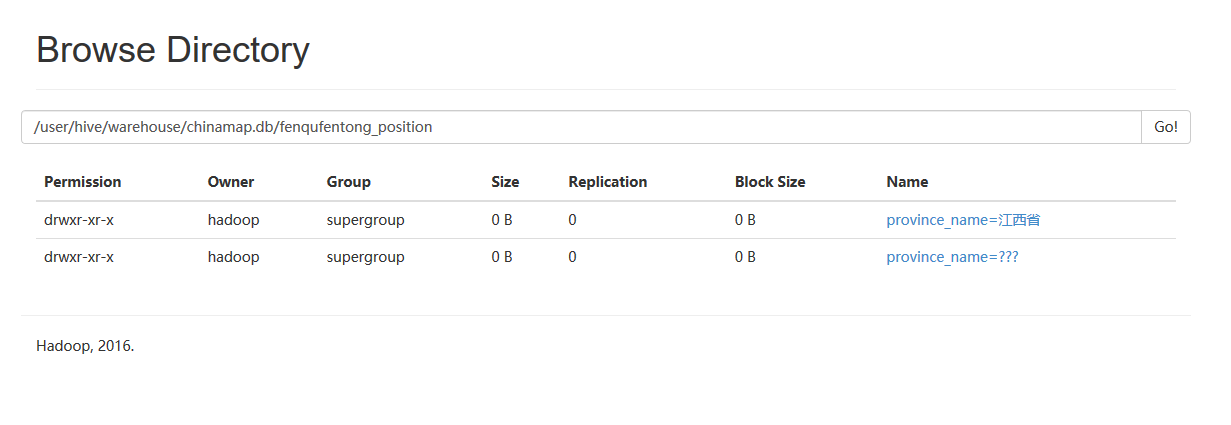

Will the information according to the provincial unit dynamic partitioning, barrel, and then set points in the final writes the result to HDFS file system failure, only generates a partition

Build predicate sentence:

The create table position_fenqufentong (id int, city_id bigint, city_name string, county_id bigint, county_name string, town_id bigint, town_name string, village_id bigint, village_name string) partitioned by (province_name string) clustered by (city_name) sorted by (city_id) into three buckets row format delimited fields terminated by '\ t' collection items terminated by '\ t' lines terminated by '\ n' stored as textfile location '/user/hive/warehouse/chinamap db/fenqufentong_position';

Load data

The from position_all insert into the table position_fenqufentong partition (province_name) select id, city_id, city_name, county_id, county_name, town_id, town_name, village_id, village_name, province_name,

The results

The Query ID=hadoop_20181205104902_9ed34916-2369-46 fa - 9186-41 a3d9f83911

The Total jobs=1

Launching Job 1 out of 1

The Number of reduce tasks determined at compile time: 3

In order to change the business, the load for a reducer (In bytes) :

Set hive. The exec. Reducers) bytes) per. Reducer=& lt; Number>

In order to limit the maximum number of reducers:

The set hive. The exec. Reducers. Max=& lt; Number>

In order to set a constant number of reducers:

The set graphs. Job. Reduces=& lt; Number>

Starting the Job=job_1543976396107_0001, Tracking URL=http://hadoop3:8088/proxy/application_1543976396107_0001/

Kill Command=/opt/modules/hadoop - 2.6.5/bin/hadoop job - Kill job_1543976396107_0001

Hadoop job information for Stage 1: number of mappers: 1; The number of reducers: 3

The 2018-12-05 10:49:43 map Stage 280-1=0%, reduce 0%=

The 2018-12-05 10:50:17 map Stage 859-1=67%, reduce=0%, the Cumulative CPU 9.04 SEC

The 2018-12-05 10:50:18 map Stage 908-1=100%, reduce=0%, the Cumulative CPU 9.09 SEC

The 2018-12-05 10:51:19 map Stage 553-1=100%, reduce=0%, the Cumulative CPU 9.09 SEC

The 2018-12-05 10:51:56 map Stage 830-1=100%, reduce=22%, the Cumulative CPU 10.1 SEC

The 2018-12-05 10:52:04 map Stage 219-1=100%, reduce=44%, the Cumulative CPU 11.81 SEC

The 2018-12-05 10:52:12 map Stage 488-1=100%, reduce=56%, the Cumulative CPU 19.93 SEC

The 2018-12-05 10:52:25 map Stage 281-1=100%, reduce=67%, the Cumulative CPU 28.27 SEC

The 2018-12-05 10:52:39 map Stage 520-1=100%, reduce=78%, the Cumulative CPU 29.07 SEC

The 2018-12-05 10:52:41 map Stage 611-1=100%, reduce=89%, the Cumulative CPU 29.78 SEC

The 2018-12-05 10:53:12 map Stage 294-1=100%, reduce=100%, the Cumulative CPU 37.19 SEC

Graphs of Total cumulative CPU time: 37 seconds 190 msec

Ended Job=job_1543976396107_0001

Loading data to the table chinamap. Position_fenqufentong partition (province_name=null)

Failed with exception org. Apache. Hadoop. Hive. Ql. Metadata. HiveException: Unable to alter partition. The alter is not possible

FAILED: Execution Error, the return code from 1 org.. Apache hadoop. Hive. Ql. Exec. MoveTask

Graphs Jobs Launched:

Stage - a Stage 1: the Map: Reduce 1:3 Cumulative CPU: 38.31 SEC HDFS Read: 82878437 HDFS Write: 72514675 SUCCESS

Total graphs of CPU Time Spent: 38 seconds 310 msec

CodePudding user response:

Partition field still dare to null, your heart is big enough, IFNULL (partition_column, 'unknown') should be easy to pleaseCodePudding user response:

Dynamic partitioning is based on the specified partition field province_name dynamically generated, the data source position_all province_name fields in the table are empty, so I don't know the null come fromCodePudding user response:

How do I solve Unable to alter partition. The alter is not possible, I am write the partition table with the spark, the same data performed twice, the second is to the wrong,