The code is as follows:

import datetime

The import requests

The import hashlib

The import json

The from bs4 import BeautifulSoup # web information data acquisition data resolution (web page)

Import # re - text matching regular expressions (web data extraction)

The import XLWT # for excel operation (data into excel)

# define routes list

One_way=[' SZX - CGO ', 'SZX - CGQ', 'SZX - CKG', 'SZX - CTU', 'SZX - CZX', 'SZX - DLC', 'SZX - coated,

'SZX - HET', 'SZX - HFE', 'SZX - HGH', 'SZX - HRB', 'SZX - INC', 'SZX - KHN', 'SZX - KMG', 'SZX - LHW,

'SZX LJG -', 'SZX - LYI', 'SZX - MIG', 'SZX - NGB', 'SZX - NKG', 'SZX - NNG', 'SZX - the NTG', 'SZX - PEK,

'SZX - PKX', 'SZX - PVG', 'SZX - SHA', 'SZX - SHE', 'SZX - SJW', 'SZX - SYX', 'SZX - TAO', 'SZX - TCZ,

'SZX - TNA', 'SZX - passes on', 'SZX - TYN', 'SZX - URC', 'SZX - WNZ', 'SZX - WUH', 'SZX WUX -', 'SZX - XIY'

'SZX - XNN', 'SZX - YIH', 'SZX YNT -', 'SZX - ZHA]

# define date section list

Datelist=[]

For I in range (1, 14) :

Datelist. Append ((datetime. Datetime. Now () + datetime. Timedelta (days=I)). The strftime (' Y - m - % d % %))

# main program

Def the main () :

Baseurl='https://flights.ctrip.com/international/search/' # foundation link

# get page parameter, transactionid

GetData (baseurl)

# get web transactionID, signID, parameter

Def getData (baseurl) :

# for I in range (0, len (one_way) :

For I in range (0, 1) :

# for j in range (0, len (datelist) :

For j in range (0, 1) :

Url=baseurl + 'oneway -' + STR (one_way [I]) + '?='+ STR (depdate datelist [j] +' & amp; Cabin=Y_S_C_F ') # generate complete foundation link url

The response=requests. Get (url, headers={' the user-agent ':' Mozilla/5.0 (Windows NT 10.0; Win64. X64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.114 Safari/537.36 '}) # for base web content

# a basic web page parameter

Data=https://bbs.csdn.net/topics/re.findall (r 'GlobalSearchCriteria=(. +); 'and the response. The text) [0]. Encode (' utf-8') # a basic web page parameter

# to get basic web transactionid

TransactionId=json. Loads (data). The get (" transactionId ")

# to get basic web signID

Sign_value=https://bbs.csdn.net/topics/transactionId + one_way one_way [I] [3-0] + [I] [- 3:] + datelist [j]

SignID=hashlib. The md5 ()

SignID. Update (sign_value. Encode (' utf-8))

Askurl (transactionId, data, signID)

Def askurl (transactionId, data, signID) :

Headers={

'the user-agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64. X64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.114 Safari/537.36 ',

'the content-type' : 'application/json. Charset=utf-8 ',

'transactionid: transactionid,

'sign' : signID hexdigest ()

} # simulation header information, disguised as a browser

The response=requests. Post (

Url="https://flights.ctrip.com/international/search/api/search/batchSearch",

Headers=headers,

data=https://bbs.csdn.net/topics/data

)

The response. The encoding="utf-8"

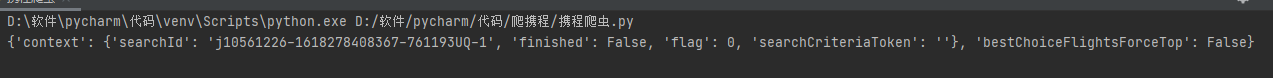

Print (response. Json (). The get (" data "))

# main program entrance

If __name__=="__main__ ':

The main ()