Home >

other > Why am I the crawler can only climb to a data

Why am I the crawler can only climb to a data

The code is as follows:

The import scrapy

The from bs4 import BeautifulSoup

The import re

The class StockSpider (scrapy. Spiders) :

Name='stock'

# allowed_domains=[' quote.eastmoney.com ']

Start_urls=[' http://quote.eastmoney.com/stock_list.html ']

Def parse (self, response) :

For href in response. The CSS (' a: : attr (href) '). The extract () :

Try:

Stock=re search (r "[s] [hz] \ d {6}", href). The group (0)

Stock=stock. The upper ()

Url='https://xueqiu.com/S/' + stock

Yield scrapy. Request (url, the callback=self. Parse_stock)

Except:

The continue

Def parse_stock (self, response) :

InfoDict={}

If the response=="" :

The exit ()

Try:

Name=re search (r '& lt; Div & gt; (. *?)

'and the response. The text). Group (1)

InfoDict. Update ({' stock name: the name. The __str__ ()})

TableHtml=re search (r '" tableHtml ":" (. *?) ", ', the response text.) group (1)

Soup=BeautifulSoup (tableHtml "HTML parser")

Table=soup. Table

For I in table. Find_all (" td ") :

The line=i.t ext

L=line. The split of the colon (" : ") # here for Chinese a colon (:)!!! Rather than in English (:)

InfoDict. Update ({l [0]. The __str__ () : l [1]. The __str__ ()})

Yield infoDict

Except:

Print (" error ")

Also, before you have a look at other people's crawler, run on my computer, pycharm anyway even the local database,

Will and I was using a bedroom WiFi?

CodePudding user response:

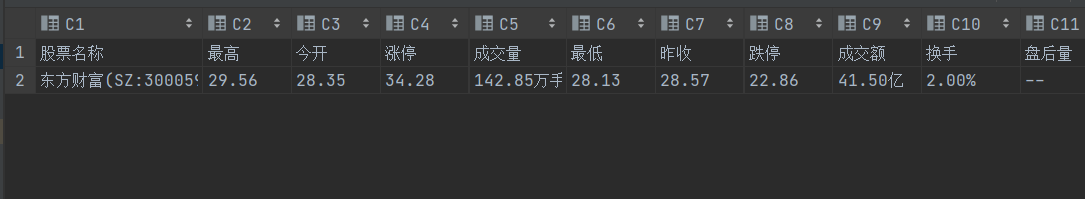

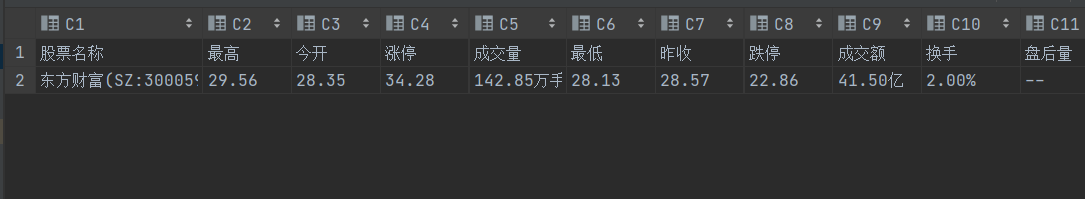

The result is as follows