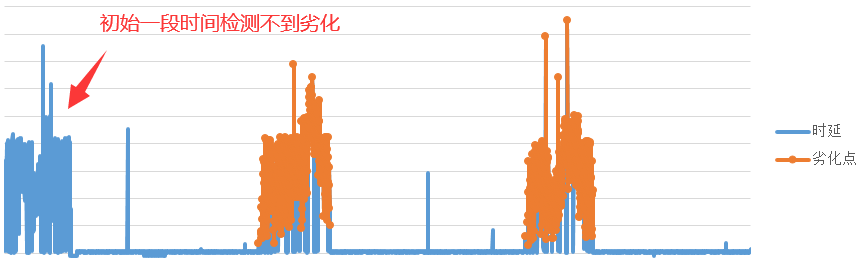

Scenario 1: initial period, the data itself is deteriorating, later returned to normal, this kind of scenario, the initial period of degradation is undetectable, as the chart, the most on the left side of the timeline for a period of time for the start time, during this time of the initial algorithm does not output is unusual, after a period of time, the algorithm can output the second, third degradation is abnormal,

Scenario 2: time delay from the start, has been deteriorating, the algorithm does not output is unusual, this algorithm has not learn the characteristics of the normal, I want to solidify the experience as a formula, to judge, but didn't think of the practice of specific