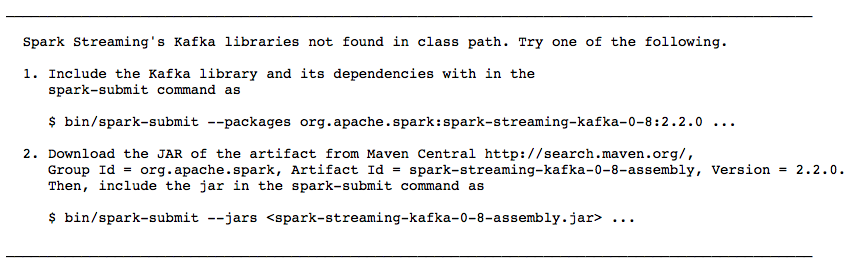

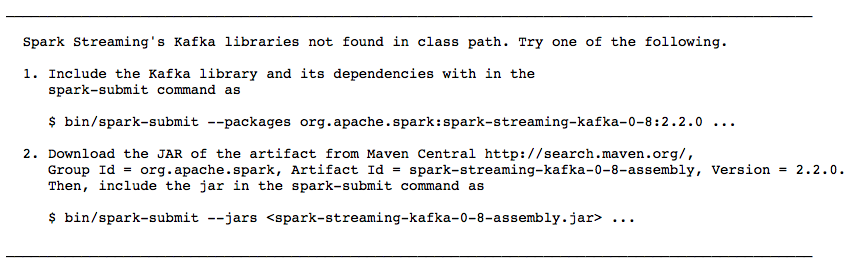

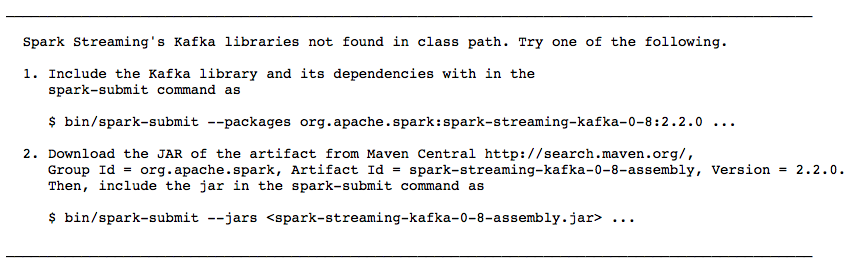

I submit kafka streaming tasks, the script is submitted: submit - jars spark - streaming - kafka - 0-8 - assembly_2. 11-2.0.2. Jar - master mesos://HOST: 7077 - deploy - mode cluster - executor - 2 g memory - total - executor - 50 cores - the conf spark. The default. The parallelism=600 - conf spark. Streaming. Kafka. MaxRatePerPartition=30 http://HOST:PORT/main.py, then run time will be submitted to the missing jars:

I try to put the jar packages directly to $SPARK_HOME/lib directory, will be submitted to the other error when creating the SparkContext, strives for the great god give advice or comments!