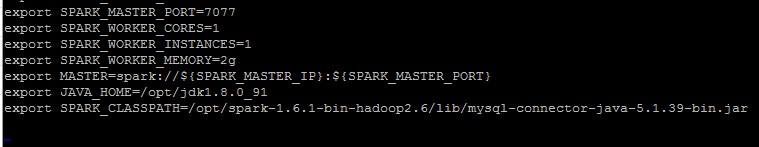

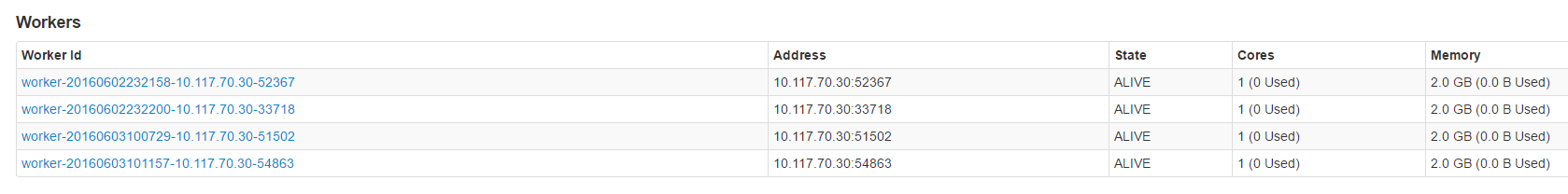

Great god help me to look at configuration worker_instances=1, but with the/sbin/start - all. Sh created four worker node starts, this is why, and I start way about?

CodePudding user response:

Take a look at your $SPARK_HOME/conf/configuration of the slavesCodePudding user response:

The building Lord how to solve? I met the same problem now, I didn't set this parameter, startup are two workers, run the script into fourCodePudding user response:

Hello, I also met, could you tell me how to solve? What place is wrong?CodePudding user response:

I have encountered this problem close spark cluster start anew, the reasons for this problem is likely due to the spark to start the cluster, launched two times in different nodes, normal request only the primary node startup time, (this is aimed at building the spark cluster used to specify the master node of reason) if it is configured spark HA cluster, and I am not sure