can I build ,push(to gitlab registry) and deploy the image (to aws EC2) using this CI/CD configuration?

stages:

- build

- deploy

build:

# Use the official docker image.

image: docker:latest

stage: build

services:

- docker:dind

before_script:

- docker login -u "$CI_REGISTRY_USER" -p "$CI_REGISTRY_PASSWORD" $CI_REGISTRY

# Default branch leaves tag empty (= latest tag)

# All other branches are tagged with the escaped branch name (commit ref slug)

script:

- |

if [[ "$CI_COMMIT_BRANCH" == "$CI_DEFAULT_BRANCH" ]]; then

tag=""

echo "Running on default branch '$CI_DEFAULT_BRANCH': tag = 'latest'"

else

tag=":$CI_COMMIT_REF_SLUG"

echo "Running on branch '$CI_COMMIT_BRANCH': tag = $tag"

fi

- docker build --pull -t "$CI_REGISTRY_IMAGE${tag}" .

- docker push "$CI_REGISTRY_IMAGE${tag}"

# Run this job in a branch where a Dockerfile exists

deploy:

stage: deploy

before_script:

- echo "$SSH_PRIVATE_KEY" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

script:

- ssh -o StrictHostKeyChecking=no [email protected] "sudo docker login -u $CI_REGISTRY_USER --password-stdin $CI_REGISTRY; sudo docker pull $CI_REGISTRY_IMAGE${tag}; cd /home/crud_app; sudo docker-compose up -d"

after_script:

- sudo docker logout

rules:

- if: $CI_COMMIT_BRANCH

exists:

- Dockerfile

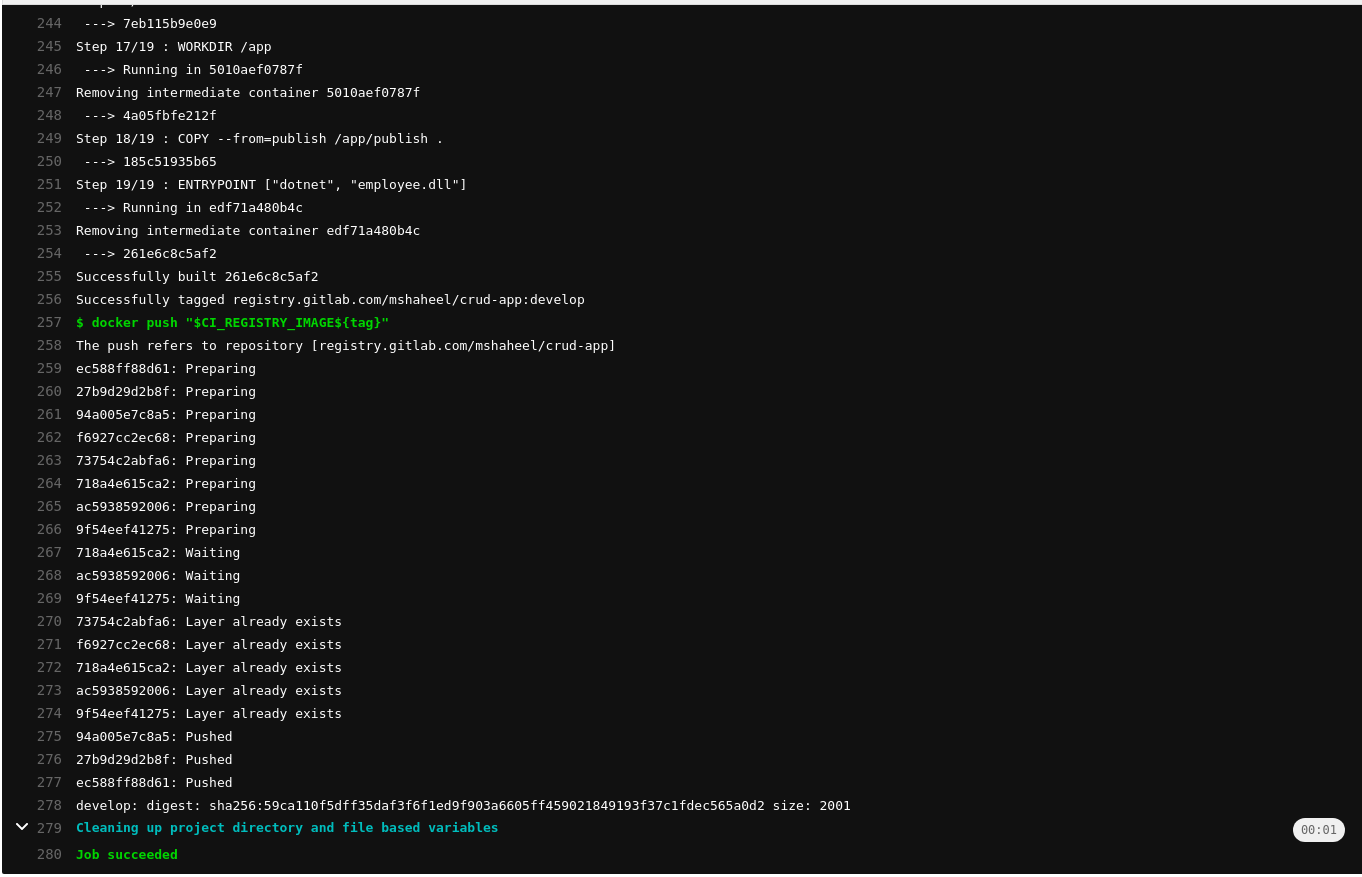

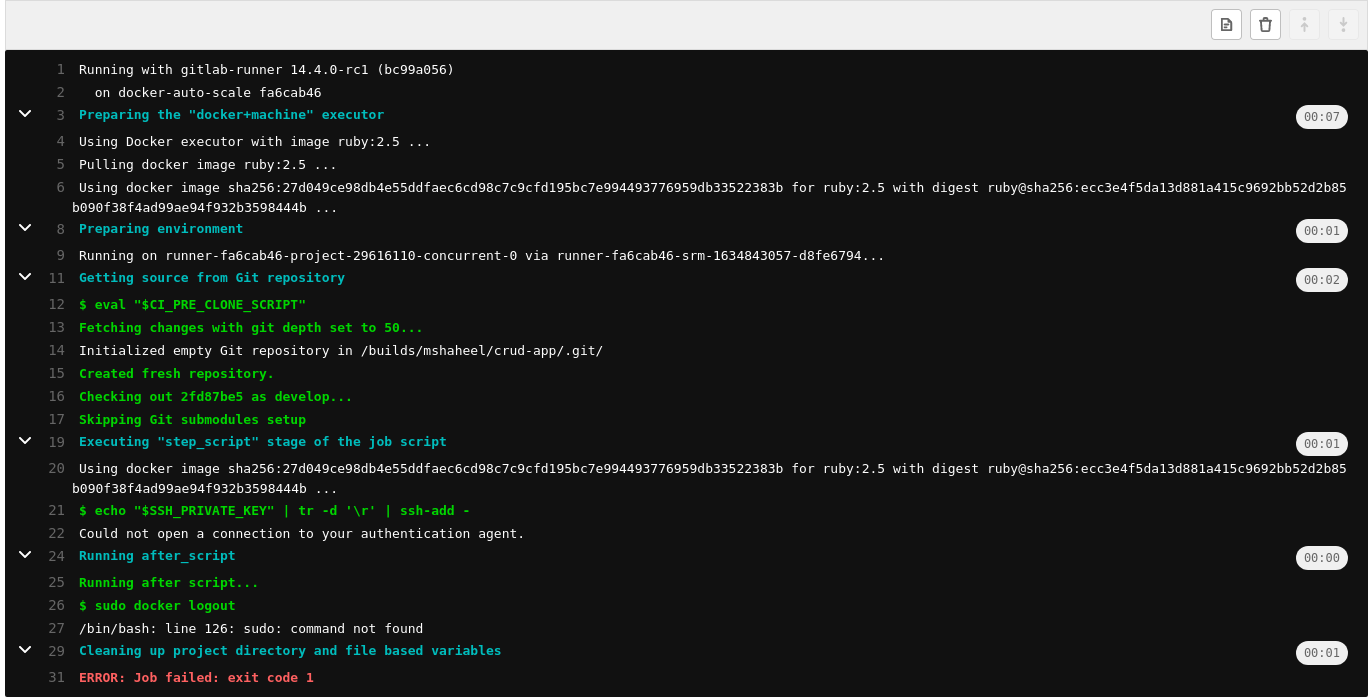

after the script build is getting suceed, deploy gets fail.

the configuration must be build and deploy the image

CodePudding user response:

There are a couple of errors, but the overall Pipeline seems good.

- You cannot use

ssh-addwithout having the agent running - Why you create the .ssh folder manually if afterwards you're explicitly ignoring the key that is going to be stored under

known_hosts? - Using

StrictHostKeyChecking=nois dangerous and totally unrecommended.

On the before_script add the following:

before_script:

- eval `ssh-agent`

- echo "$SSH_PRIVATE_KEY" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- ssh-keyscan -H 18.0.0.82 >> ~/.ssh/known_hosts

Also, don't use sudo on your ubuntu user, better add it to the docker group or connect through SSH to an user that is in the docker group.

In case you don't have already a docker group in your EC2 instance, now it's a good moment to configure it:

Access to your EC2 instance and create the docker group:

$ sudo groupadd docker

Add the ubuntu user to the docker group:

$ sudo usermod -aG docker ubuntu

Now change your script to:

script:

- echo $CI_REGISTRY_PASSWORD > docker_password

- scp docker_password [email protected]:~/tmp/docker_password

- ssh [email protected] "cat ~/tmp/docker_password | docker login -u $CI_REGISTRY_USER --password-stdin $CI_REGISTRY; docker pull $CI_REGISTRY_IMAGE${tag}; cd /home/crud_app; docker-compose up -d; docker logout; rm -f ~/tmp/docker_password"

Also, remember that in the after_script you aren't in the EC2 instance but within the runner image so you don't need to logout, but it would be good to kill the SSH agent tho.

Final Job

deploy:

stage: deploy

before_script:

- eval `ssh-agent`

- echo "$SSH_PRIVATE_KEY" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- ssh-keyscan -H 18.0.0.82 >> ~/.ssh/known_hosts

script:

- echo $CI_REGISTRY_PASSWORD > docker_password

- scp docker_password [email protected]:~/tmp/docker_password

- ssh [email protected] "cat ~/tmp/docker_password | docker login -u $CI_REGISTRY_USER --password-stdin $CI_REGISTRY; docker pull $CI_REGISTRY_IMAGE${tag}; cd /home/crud_app; docker-compose up -d; docker logout; rm -f ~/tmp/docker_password"

after_script:

- kill $SSH_AGENT_PID

- rm docker_password

rules:

- if: $CI_COMMIT_BRANCH

exists:

- Dockerfile