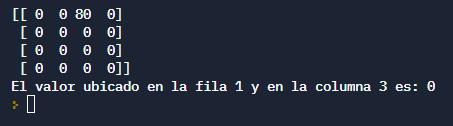

I got this code which prints a zero matrix given the size (m x n). But i wanna extract a specific value in a specific location of that matrix but i've tried everything i know and it's still not working. Here's my code:

import numpy as np

class Matrix:

def __init__(self, m, n):

self.row = m

self.column = n

def define_matrix(self):

return np.zeros((self.row, self.column), dtype=int)

def __str__(self):

return self.define_matrix()

def get(self, a, b):

try:

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

print(

'El valor ubicado en la fila {} '

'y en la columna {} es: {}'

.format(a, b, self.define_matrix()[a - 1][b - 1]))

except:

print('Ingrese datos válidos')

def set(self, value, a, b):

matrix1 = self.define_matrix()

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

matrix1[a-1][b-1] = value

return matrix1

a = Matrix(4, 4) # matrix could be any size

a.set(80, 1, 3)

a.get(1, 3)

and here´s what i get:

CodePudding user response:

import numpy as np

class Matrix:

def __init__(self, m, n):

self.row = m

self.column = n

def define_matrix(self):

return np.zeros((self.row, self.column), dtype=int)

def __str__(self):

return self.define_matrix()

def get(self, a, b):

try:

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

print(

'El valor ubicado en la fila {} '

'y en la columna {} es: {}'

.format(a, b, self.define_matrix()[a - 1][b - 1]))

# is a matrix of zeros.

except:

print('Ingrese datos válidos')

def set(self, value, a, b):

matrix1 = self.define_matrix()

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

matrix1[a-1][b-1] = value

return matrix1 # outputs matrix, but is never saved in Matrix object

# to solve this, you need to save matrix1 in a variable in Matrix

# instead of returning it

a = Matrix(4, 4) # matrix could be any size

a.set(80, 1, 3) # not saved in a variable - code executes but return value is discarded

a.get(1, 3)

Here is the modified code:

import numpy as np

class Matrix:

def __init__(self, m, n):

self.row = m

self.column = n

self.matrix1 = np.zeros((self.row, self.column), dtype=int)

def __str__(self):

return str(self.matrix1) # EDIT: forgot to modify this line.

# fatal mistake on my part :(

def get(self, a, b):

try:

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

print(

'El valor ubicado en la fila {} '

'y en la columna {} es: {}'

.format(a, b, self.matrix1[a - 1][b - 1]))

except:

print('Ingrese datos válidos')

def set(self, value, a, b):

if not a in range(self.row 1):

print('El valor deseado no pertenece a la matriz.')

elif not b in range(self.column 1):

print('El valor deseado no pertenece a la matriz.')

else:

self.matrix1[a-1][b-1] = value

return self.matrix1

a = Matrix(4, 4) # matrix could be any size

a.set(80, 1, 3)

a.get(1, 3)

I apologize if I got anything wrong as I don't understand Spanish.

CodePudding user response:

Your problem comes from the fact that you are calling self.define_matrix()[a - 1][b - 1]) within the get method.

Because of that, no matter which value you insert in your matrix using the set method, what you end up showing is the '0' created upon instantiating the matrix with np.zeros((self.row, self.column), dtype=int).