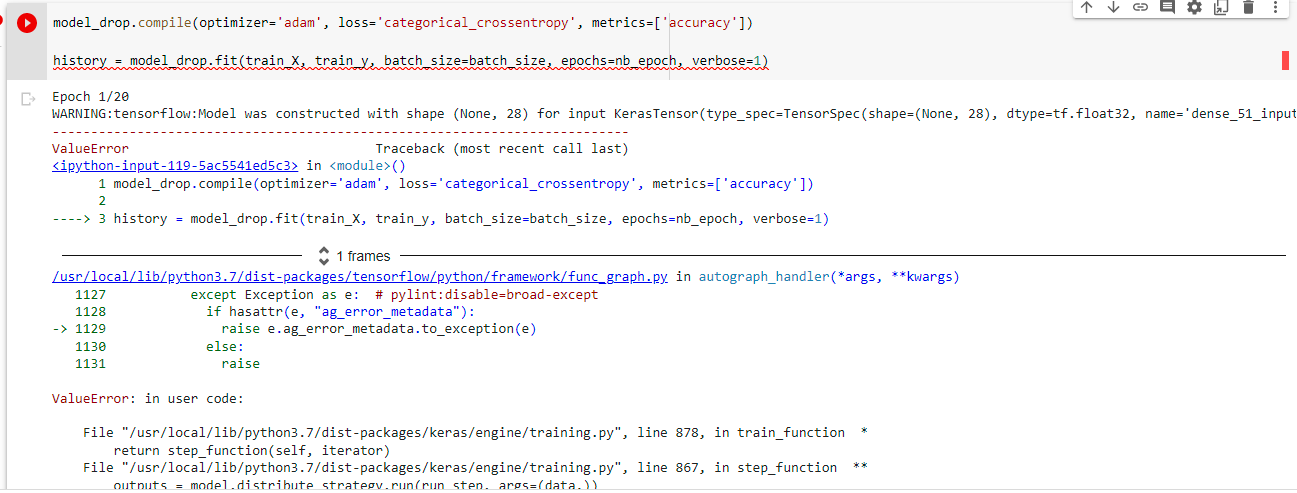

I am getting an error. It says:

Model was constructed with shape (None, 28) for input KerasTensor(type_spec=TensorSpec(shape=(None, 28), dtype=tf.float32, name='dense_45_input'), name='dense_45_input', description="created by layer 'dense_45_input'"), but it was called on an input with incompatible shape (None, 28, 28).

Mycode is here:

from keras.utils import np_utils

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, BatchNormalization, Dropout, Activation

import seaborn as sns

from keras.initializers import RandomNormal

from keras.initializers import he_normal

import matplotlib.pyplot as plt

(train_X, train_y), (test_X, test_y) = mnist.load_data()

output_dim = 10

input_dim = train_X.shape[1]

batch_size = 128

nb_epoch = 20

model_drop = Sequential()

model_drop.add(Dense(512, activation='relu', input_shape=(input_dim,),kernel_initializer=he_normal(seed=None)))

model_drop.add(BatchNormalization())

model_drop.add(Dropout(0.5))

model_drop.add(Dense(128, activation= 'relu', kernel_initializer=he_normal(seed=None)))

model_drop.add(BatchNormalization())

model_drop.add(Dropout(0.5))

model_drop.add(Dense(output_dim, activation = 'softmax'))

model_drop.summary()

model_drop.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

history = model_drop.fit(train_X, train_y, batch_size=batch_size, epochs=nb_epoch, verbose=1)

How can I fix this? Also I am adding error photo..

CodePudding user response:

Your input dimensions in building the dense layer are wrong, if the image is 28x28 you need to be able to receive all the pixels (i.e. you need 28*28=784 input connections). To really get this working you also need to one-hot encode the y variables as well as reshape the images.

(train_X, train_y), (test_X, test_y) = mnist.load_data()

output_dim = 10

input_dim = train_X.shape[1]

batch_size = 128

nb_epoch = 20

model_drop = Sequential()

# see input_dim edit here

model_drop.add(Dense(512, activation='relu', input_shape=(input_dim*input_dim,),kernel_initializer=he_normal(seed=None)))

model_drop.add(BatchNormalization())

model_drop.add(Dropout(0.5))

model_drop.add(Dense(128, activation= 'relu', kernel_initializer=he_normal(seed=None)))

model_drop.add(BatchNormalization())

model_drop.add(Dropout(0.5))

model_drop.add(Dense(output_dim, activation = 'softmax'))

model_drop.summary()

model_drop.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# encode Y_train and also shape X_train so it can feed to dense layer

Y_train = np_utils.to_categorical(train_y, num_classes=10)

X_train = train_X.reshape((-1, 28*28))

history = model_drop.fit(X_train, Y_train, batch_size=batch_size, epochs=nb_epoch, verbose=1)