I have an existing pandas dataframe below:

id time c d

0 1 1 2 3

1 1 3 1 6

2 2 2 3 2

3 2 3 8 6

I also have values stored in a list such as:

list = [0.4, 0.6]

I want to create a new column in the existing dateframe and assign each list element in the first row for each group (id) such as:

id time c d new_col

0 1 1 2 3 0.4

1 1 3 1 6

2 2 2 3 2 0.6

3 2 3 8 6

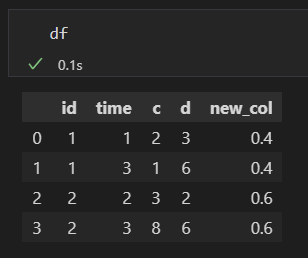

CodePudding user response:

Does this help solve your problem? (I can't see any benefit to only putting that value in the first occurrence of the group and not the other values)

mapping = {1: 0.4, 2: 0.6}

df["new_col"] = df['id'].map(mapping)

Result:

Note: If all of your ids are sequential integers starting at 1 and all of the values in your list are also in that correct order you could convert it to a mapping dict using:

mapping = {i 1: value for i, value in enumerate(your_list)}

CodePudding user response:

Group the dataframe by id and use cumcount to create a sequential counter, then use boolean indexing with loc to assign the list values where the value of the counter is 0

lst = [0.4, 0.6]

df.loc[df.groupby('id').cumcount().eq(0), 'new_col'] = lst

id time c d new_col

0 1 1 2 3 0.4

1 1 3 1 6 NaN

2 2 2 3 2 0.6

3 2 3 8 6 NaN

CodePudding user response:

One way using pandas.DataFrame.groupby.ngroup with numpy.take:

l = [0.4, 0.6]

gid = df.groupby("id").ngroup()

df["new_col"] = np.where(gid.duplicated(), "", np.take(l, gid))

Output:

id time c d new_col

0 1 1 2 3 0.4

1 1 3 1 6

2 2 2 3 2 0.6

3 2 3 8 6

Explain:

The difficulty here is to apply only to the first row of each group.

Applying duplicated to the taken value is dangerous since it will remove values in another group should the values happened to duplicate (e.g. if l == [0.4, 0.6, 0.4]).

So duplicated is applied to gid to assert we extract the first row of each group.

df.groupby("id").ngroup(): enumerates the group to create indices. Note that this is invulnerable to the type ofdf["id"], such as["a", "a", "b", "b"].In case

df["id"]is always numeric and starts from1, you can replace this withgid = df["id"] - 1np.take(l, gid): With the generated indices, take the list elements.np.where(gid.duplicated, ...): To ensure the taken values fromlis only applied to the first rows of each group (a.k.a. non-duplicates), and the rest gets filled with"".

CodePudding user response:

Use Series.map with dictionary created by enumerate and set values only first per groups in Series.mask with Series.duplicated:

L=[0.4, 0.6]

df["new_col"] = df['id'].sub(1).map(dict(enumerate(L))).mask(df['id'].duplicated())

print (df)

id time c d new_col

0 1 1 2 3 0.4

1 1 3 1 6 NaN

2 2 2 3 2 0.6

3 2 3 8 6 NaN

L=[0.4, 0.6]

df["new_col"] = df['id'].sub(1).map(dict(enumerate(L))).mask(df['id'].duplicated(),'')

print (df)

id time c d new_col

0 1 1 2 3 0.4

1 1 3 1 6

2 2 2 3 2 0.6

3 2 3 8 6

If possible any groups in id, e.g. 10, 20 use GroupBy.ngroup:

L=[0.4, 0.6]

df["new_col"] = (df.groupby('id').ngroup().map(dict(enumerate(L)))

.mask(df['id'].duplicated(),''))

print (df)

id time c d new_col

0 10 1 2 3 0.4

1 10 3 1 6

2 20 2 3 2 0.6

3 20 3 8 6