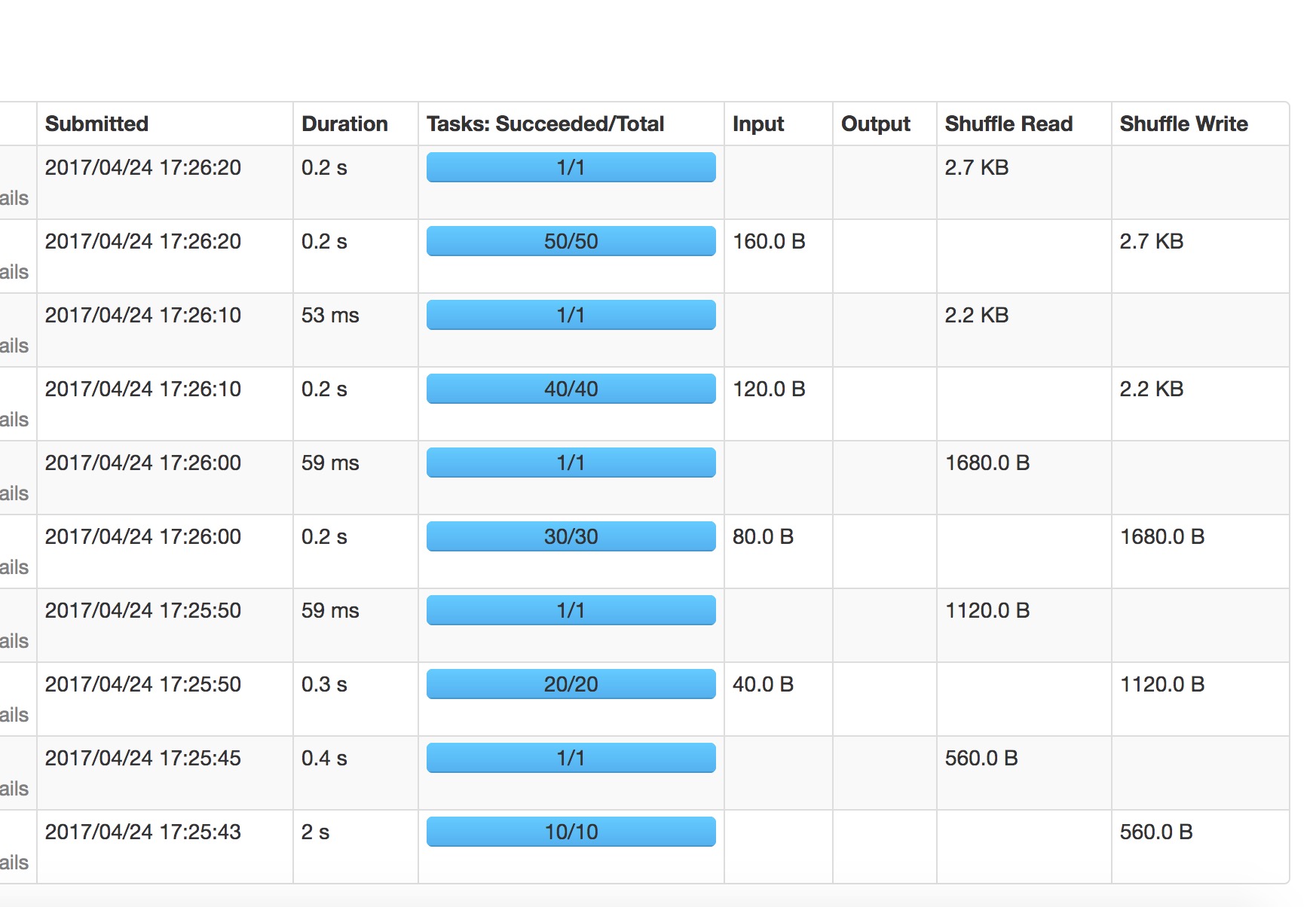

My Settings window is the total length of 1 day, 10 seconds sliding time, but each sliding window operation task will lead to increase in the number, the execution time is more and more long, this is what reason, I want to have the task of each stage number set to fixed, but why can't I set the attribute value to take effect,

Set (" spark. SQL. Shuffle. Partitions ", "30")

Set (" spark. Default. Parallelism ", "30");

Even if I didn't pass data task number is growing, 10, and 20, 30,,,,,,,, etc.

And I didn't pass data into the spark but why input value or has been increased,

CodePudding user response:

Window length is one day, ten seconds once, but each will increase the number of block, task followed increase thereby, execution speed is slow,How to combine block? Generated so much every time it is no use block,

CodePudding user response:

You didn't complete data within the window? Data have been growing, the block number of natural growthCodePudding user response:

, data has also been growing, but I'm into task createStream ways to receive data can be secured as I configuration parameters, but not a createDriectStream ways, as long as the data window is not complete would have been increase the number of task, set parameters also can't control,CodePudding user response: