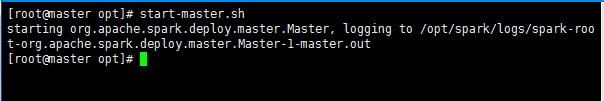

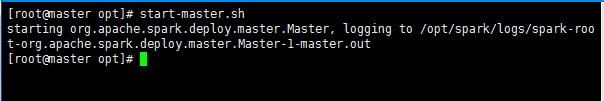

When I build the spark cluster environment, configured according to the document file, send to each slave node from the Master node, but I run at startup start - all. Sh startup is hadoop, each process of the JPS after without the Master and the worker process, I can only from the Master node separate start start - Master. Sh and start from the node - slave. Sh, start from the Master node displayed after the successful

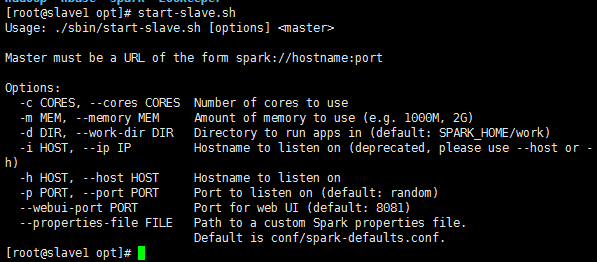

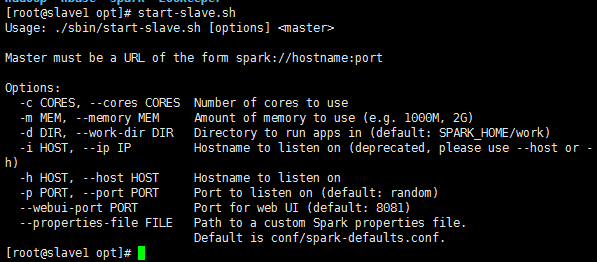

but from the very start from node error gave a hint of

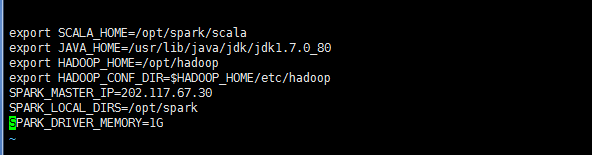

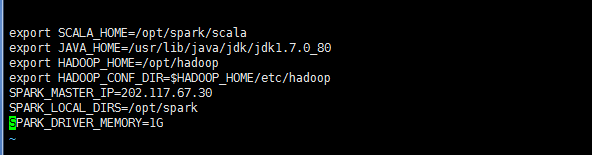

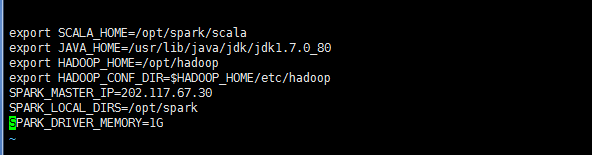

won't start, our great god what is this reason, I slaves files with good slave node name, spark - env. Sh file configuration below

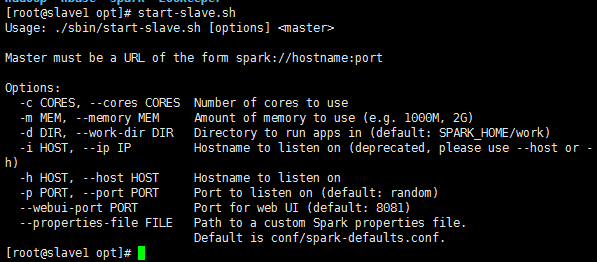

I where this error?

but from the very start from node error gave a hint of

but from the very start from node error gave a hint of  won't start, our great god what is this reason, I slaves files with good slave node name, spark - env. Sh file configuration below

won't start, our great god what is this reason, I slaves files with good slave node name, spark - env. Sh file configuration below  I where this error?

I where this error?

but from the very start from node error gave a hint of

but from the very start from node error gave a hint of  won't start, our great god what is this reason, I slaves files with good slave node name, spark - env. Sh file configuration below

won't start, our great god what is this reason, I slaves files with good slave node name, spark - env. Sh file configuration below  I where this error?

I where this error?