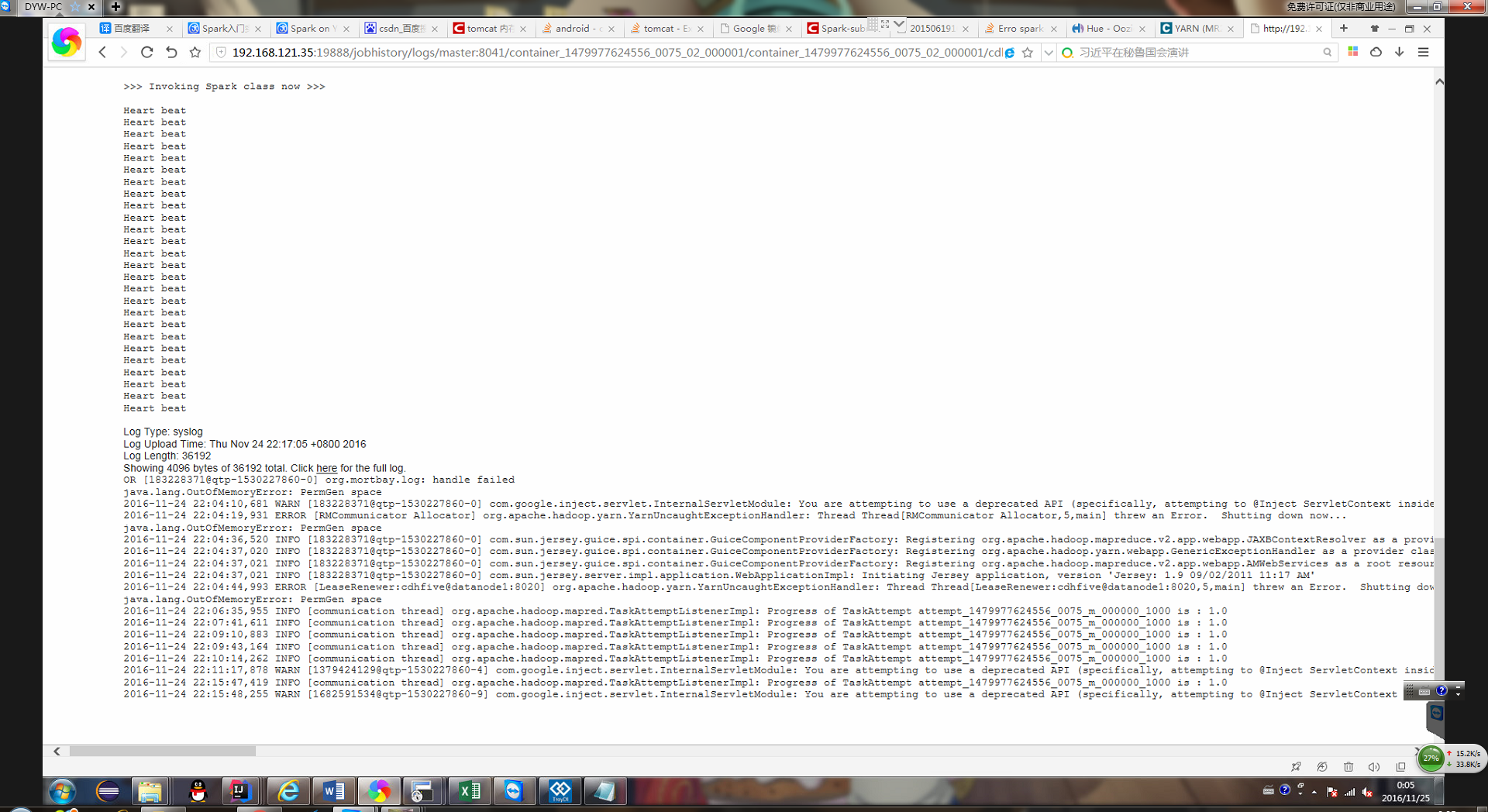

Online said 浭 water spark - defaults. The spark inside the conf. Driver. ExtraJavaOptions - XX: PermSize=128 m - XX: MaxPermSize=256 m, I changed to or wrong,

The log content is as follows:

CodePudding user response:

I'm sorry, because of personal ability is limited, can't help you,

CodePudding user response:

Cluster on each node how much is allocated memory space, generally the default is 1 g, also need to check the driver set the size of the memoryCodePudding user response:

Driver is generally 512 m - 1 g, executor formula can be calculated, is the core number commonly: memory is 1:2 or 1:4, a single Worker node can be allocated more Worker instance, generally is the core number/4, in the spark - env. Sh configuration insideIn addition you what is this complex model? Article 100 can run so long,,, consider optimizing code