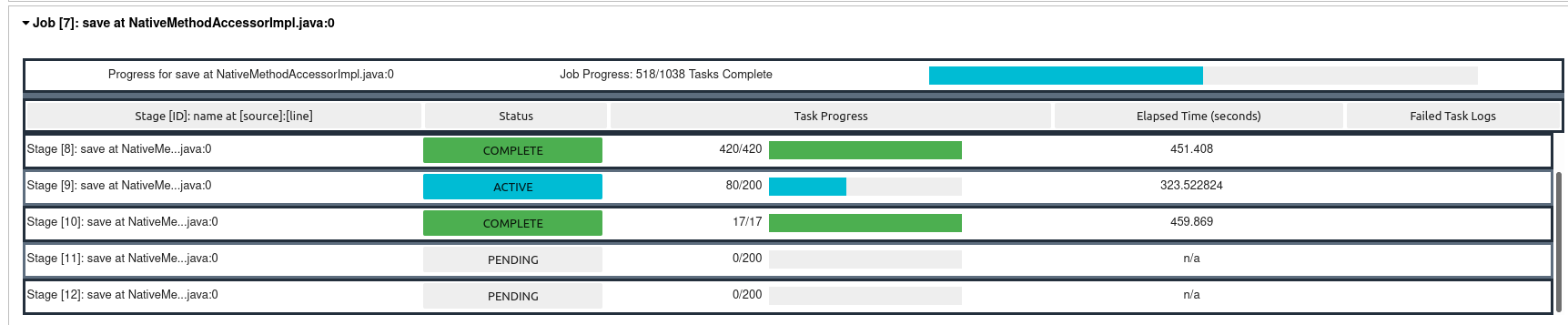

I moved recently from AWS to GCP and of course they have some similarities and some differences. One of them is that in AWS EMR notebook one could see Spark Jobs/stages/tasks and their progress as the code runs:

However, in GCP notebook what I see is only number of the stage and its progress:

Is there a way to show it the way it's shown on AWS, or is it platform-bound?

CodePudding user response:

While there is no out-of-the-box solution, you can use monitoring dashboard as described in this documentation.

There's also a similar question answered here.

If you want a realtime progress bar, you can file a Feature Request on the Public Issue Tracker.