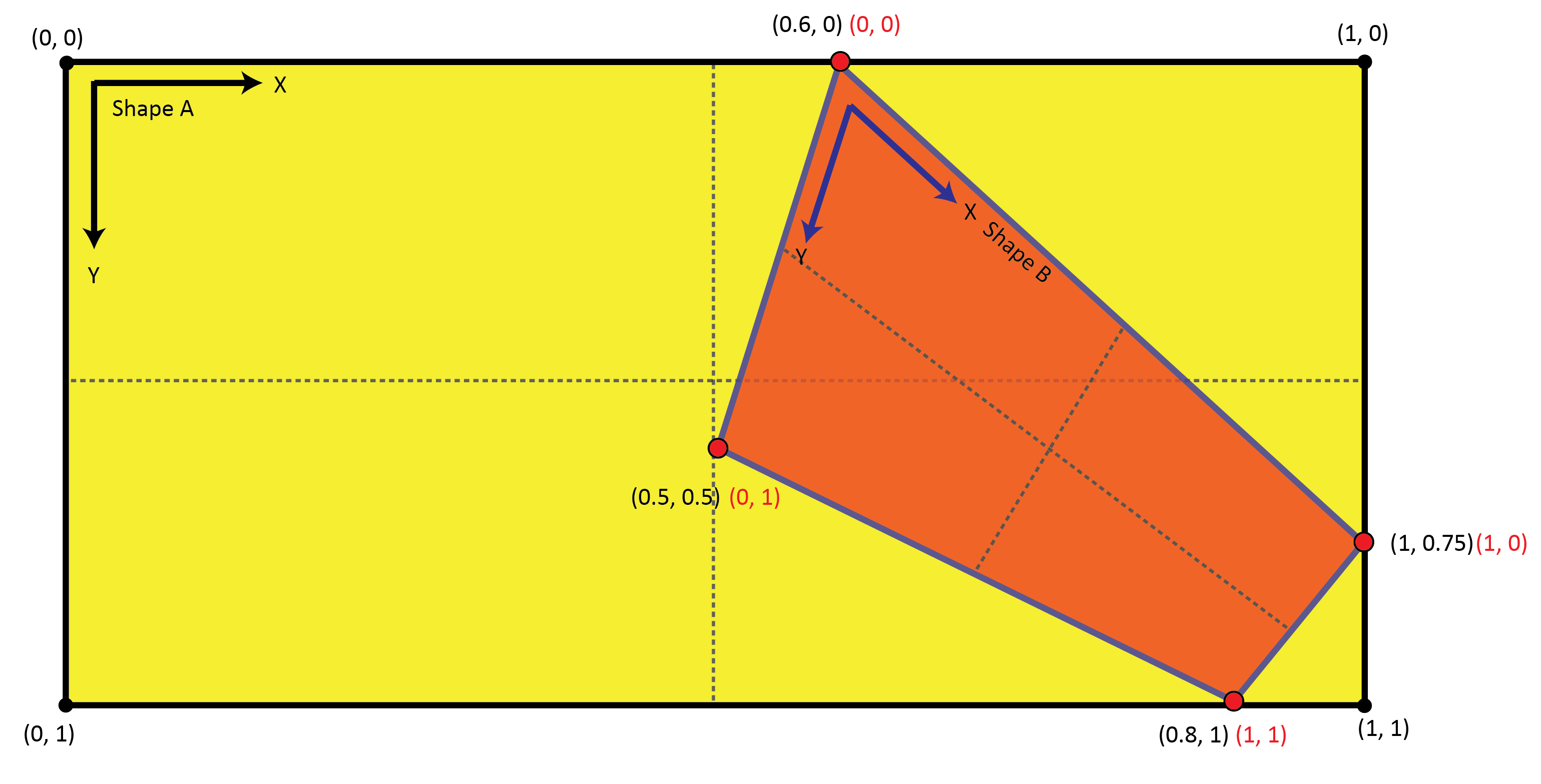

I have two shapes or coordinate systems, and I want to be able to transform points from one system onto the other.

I have found that if the shapes are quadrilateral and I have 4 pairs of corresponding points then I can calculate a transformation matrix and then use that matrix to calculate any point in Shape B onto it's corresponding coordinates in Shape A.

Here is the working python code to make this calculation:

import numpy as np

import cv2

shape_a_points = np.array([

[0.6, 0],

[1, 0.75],

[0.8, 1],

[0.5, 0.6]

], dtype="float32")

shape_b_points = np.array([

[0, 0],

[1, 0],

[1, 1],

[0, 1],

], dtype="float32")

test_points = [0.5, 0.5]

matrix = cv2.getPerspectiveTransform(shape_b_points, shape_a_points)

print(matrix)

result = cv2.perspectiveTransform(np.array([[test_points]], dtype="float32"), matrix)

print(result)

If you run this code you'll see that the test point of (0.5, 0.5) on Shape B (right in the middle), comes out as (0.73, 0.67) on Shape A, which visually looks correct.

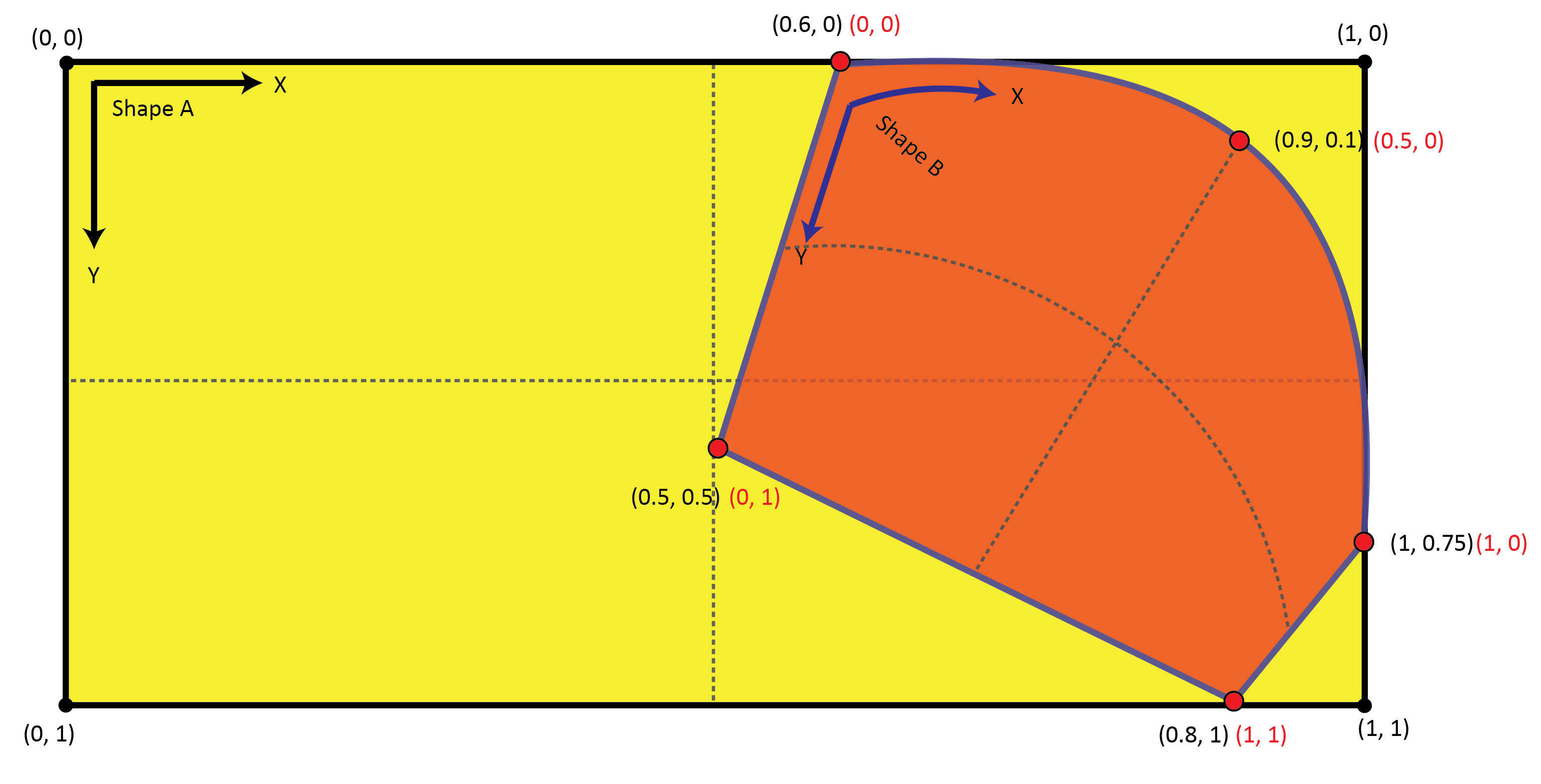

However what can I do if the shape is more complex. Such as 4 N vertices, and 4 N pairs of corresponding points? Or even more complex, what if there are curves in the shapes?

CodePudding user response:

If the two shapes are related by a perspective transformation, then any four points will lead to the same transformation, at least as long as no the of them are collinear. In theory you might pick any four such points and the rest should just work.

In practice, numeric considerations might come into play. If you pick for points very close to one another, then small errors in their positions would lead to much larger errors further away from these points. You could probably do some sophisticated analysis involving error intervals, but as a rule of thumb I'd try to aim for large distances between any two points both on the input and on the output side of the transformation.

An answer from me on Math Exchange explains a bit of the kind of computation that goes into the definition of a perspective transformation given for pairs of points. It might be useful for understanding where that number 4 is coming from.

If you have more than 4 pairs of points, and defining the transformation using any four of them does not correctly translate the rest, then you are likely in one of two other use cases.

Either you are indeed looking for a perspective transformation, but have poor input data. You might have positions from feature detection, and the might be imprecise. Some features might even be matched up indirectly. So in this case you would be looking for the best transformation to describe your data with small errors. Your question doesn't sound like this is your use case, so I'll not go into detail.

Our you have a transformation that is not a perspective transformation. In particular anything that turns a straight line into a bent curve or vice versa is not a perspective transformation any more. You might be looking for some other class of transformation, or for something like a piecewise projective transformation. Without knowing more about your use case, it's very hard to suggest a good class of transformations for this.

CodePudding user response:

Thanks @christoph-rackwitz for pointing me in the right direction.

I have found very good results for transformations using the OpenCV ThinPlateSplineShapeTransformer.

Here is my example script below. Note that I have 7 pairs of points. The "matches" is just a list of 7 (telling the script point #1 from Shape A matches to point #1 from Shape B...etc..)

import numpy as np

import cv2

number_of_points = 7

shape_a_points = np.array([

[0.6, 0],

[1, 0.75],

[0.8, 1],

[0.5, 0.6],

[0.75, 0],

[1, 0],

[1, 0.25]

], dtype="float32").reshape((-1, number_of_points, 2))

shape_b_points = np.array([

[0, 0],

[1, 0],

[1, 1],

[0, 1],

[0.25, 0],

[0.5, 0],

[0.75, 0]

], dtype="float32").reshape((-1, number_of_points, 2))

test_points = [0.5, 0.5]

matches = [cv2.DMatch(i, i, 0) for i in range(number_of_points)]

tps = cv2.createThinPlateSplineShapeTransformer()

tps.estimateTransformation(shape_b_points, shape_a_points, matches)

M = tps.applyTransformation(np.array([[test_points]], dtype="float32"))

print(M[1])

I do not know why you need to reshape the arrays; "you just do" or it will not work.

I have also put it into a simple class if anyone wants to use it:

import cv2

import numpy as np

class transform:

def __init__(self, points_a, points_b):

assert len(points_a) == len(points_b), "Number of points in set A and set B should be same count"

matches = [cv2.DMatch(i, i, 0) for i in range(len(points_a))]

self.tps = cv2.createThinPlateSplineShapeTransformer()

self.tps.estimateTransformation(np.array(points_b, dtype="float32").reshape((-1, len(points_a), 2)),

np.array(points_a, dtype="float32").reshape((-1, len(points_a), 2)), matches)

def transformPoint(self, point):

result = self.tps.applyTransformation(np.array([[point]], dtype="float32"))

return result[1][0][0]