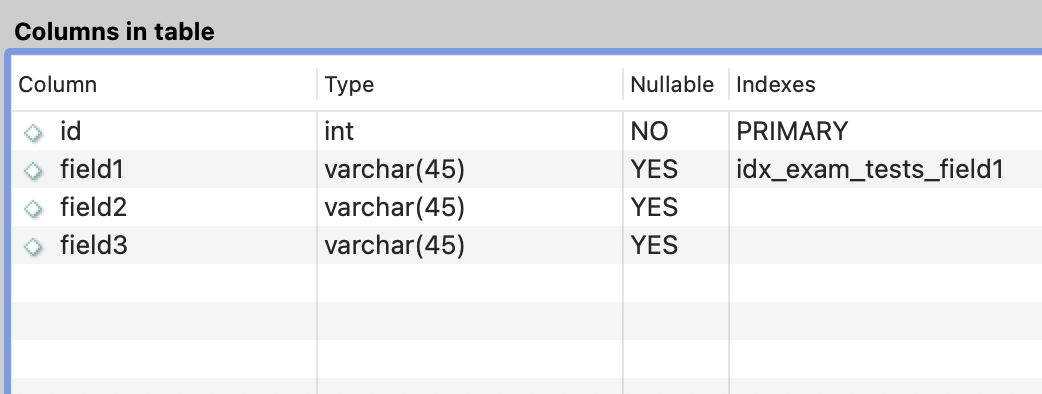

I have a table with the following structure:

The table has around 25000 rows.

Since I have an index on the column, field1, I see fewer scanned rows while running the explain query. My question, however, is after using limit (without an offset), why would it scan every matched row in the case of filtering with an indexed column instead of just the limit (in the examples below, which is 10)?

Here are the queries I ran and their corresponding results:

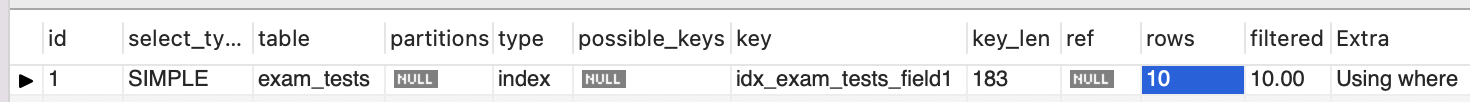

- Query 3:

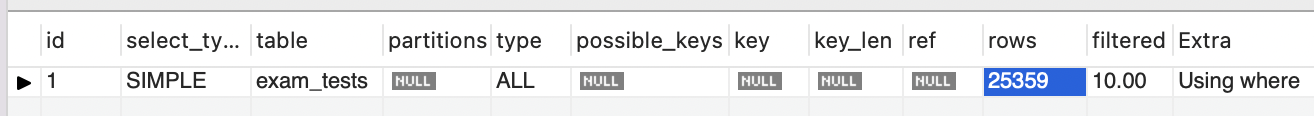

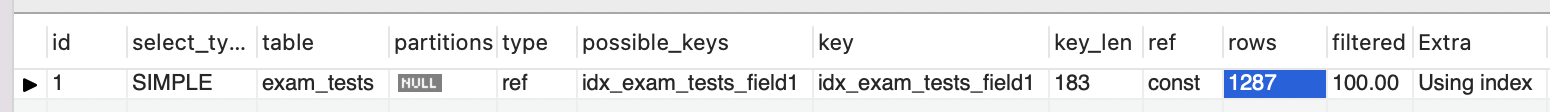

explain SELECT id, field1 from exam_tests where field2 = 'val9' order by field1 limit 10; - Result:

- Query 4:

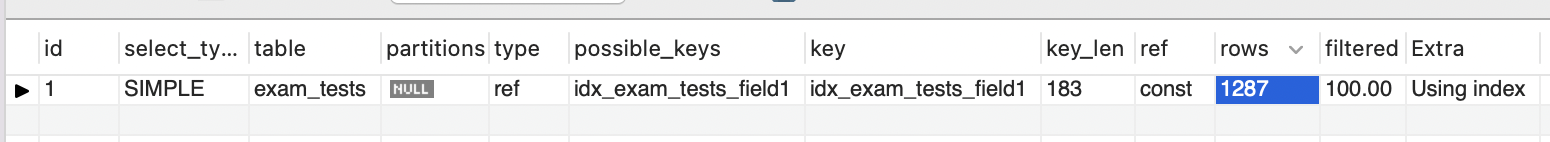

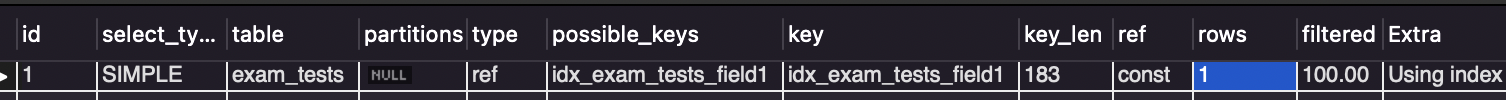

explain SELECT id, field1 from exam_tests where field1 = 'val9' order by field1 limit 10; - Result:

Here are my questions:

- On Query1, Even with an indexed column (on which the filtering happens) and a limit applied, why does the optimizer consider scanning every matched result? There are indeed 1287 rows with field1 = 'val9'. In addition, I also noticed that, if I try to filter field1 to a non-existent value, the explain returns rows=1! eg:

explain SELECT id, field1 from exam_tests where field1 = 'val11' order by field1 limit 10;Here, there's no row with value,val11forfield1column. And in this case, I get the following result:

- On Query3 Just because we add an order by indexed column, how would the query optimizer be able to get the required results without scanning the entire table (the filtered column,

field2, doesn't have an index)?

CodePudding user response:

The Rows in EXPLAIN

- is an estimate, often a very crude estimate; and

- usually does not take into account

LIMIT.

Query 1 -- The query was performed using just the index's BTree. (clue: "Using index"). It probably touched only 10 rows. (No clue available in Explain)

Query 2 -- Full table scan (Clues: All and Nulls). It read all the rows, but may have delivered only a fraction of the rows (Clue: Filter 10.00% -- again a crude estimate)

Query 3 -- Hmmm... It picked the wrong [my opinion] way to perform the query; it decided to use the index without realizing that it might have to go through the entire table to find 10 rows. INDEX(field2, field1), in this order would be optimal.

Query 4 -- (Perhaps you typed it wrong? The ORDER BY is unnecessary since the output has all rows with the same value.)

Other helpers:

If you want to know exactly how many rows were touched, do this:

FLUSH STATUS; SELECT ... ; SHOW SESSION STATUS LIKE 'Handler%';EXPLAIN FORMAT=JSON SELECT ...The "Optimizer trace".

The slowlog records "rows examined".

Index Cookbook (It includes the 'Handler' and Optimizer Trace tips.)

Perhaps the following addresses your final question. If there are no matching rows in the table, there still has to be a probe to discover that fact. That increases some actual row counts by 1, including 0 to 1. Similarly, if ther are only 6 rows matching the WHERE (and less than the LIMIT), it will 'examine' 7 rows to realize it is time to stop.