I'm trying to get my output to look like the following in json format.

[{"title": "Test", "kategorie": "abc", "url": "www.url.com"},

{"title": "Test", "kategorie": "xyz", "url": "www.url.com"},

{"title": "Test", "kategorie": "sca", "url": "www.url.com"}]

but after using Items I see some of the values but not all of them are stored in a list:

[{"title": ["Test"], "kategorie": ["abc"], "url": "www.url.com"},

{"title": ["Test"], "kategorie": ["xyz"], "url": "www.url.com"},

{"title": ["Test"], "kategorie": ["sca"], "url": "www.url.com"}]

This is my items.py

class MyItem(scrapy.Item):

title = scrapy.Field()

kategorie = scrapy.Field()

url = scrapy.Field()

This is my pipelines.py which is enabled in settings.py.

class MyPipeline(object):

file = None

def open_spider(self, spider):

self.file = open('item.json', 'wb')

self.exporter = JsonItemExporter(self.file)

self.exporter.start_exporting()

def close_spider(self, spider):

self.exporter.finish_exporting()

self.file.close()

def process_item(self, item, spider):

self.exporter.export_item(item)

return item

This is the parse method in my spider.py. All xpath-methods return a list of scraped values. After it they are put together and iteratively create a dictionary that will end up in the exported file as json.

def parse(self, response):

item = MyItem()

title = response.xpath('//h5/text()').getall()

kategorie = response.xpath('//span[@]//text()').getall()

url = response.xpath('//div[@]//a/@href').getall()

data = zip(title, kategorie, url)

for i in data:

item['title'] = i[0],

item['kategorie'] = i[1],

item['url'] = i[2]

yield item

This is how I start the crawling process:

scrapy crawl spider_name

If I don't use Items and Pipelines it works fine using:

scrapy crawl spider_name -o item.json

I am wondering why some of the values are stored in a list and some other are not. If someone has an approach it would be really great.

CodePudding user response:

Using scrapy FEEDS and Item you can directly yield the item objects from the parse method without the need for pipelines or ziping the lists first. See below sample

import scrapy

class MyItem(scrapy.Item):

title = scrapy.Field()

kategorie = scrapy.Field()

url = scrapy.Field()

class SampleSpider(scrapy.Spider):

name = 'sample'

start_urls = ['https://brownfield24.com/grundstuecke']

custom_settings = {

"FEEDS": {

"items.json":{

"format": "json"

}

}

}

def parse(self, response):

for property in response.xpath("//*[contains(@class,'uk-link-reset')]"):

item = MyItem()

item['title'] = property.xpath(".//h5/text()").get()

item['url'] = property.xpath(".//a/@href").get()

item['kategorie'] = property.xpath(".//div[@class='uk-card-body']/p/span/text()").get()

yield item

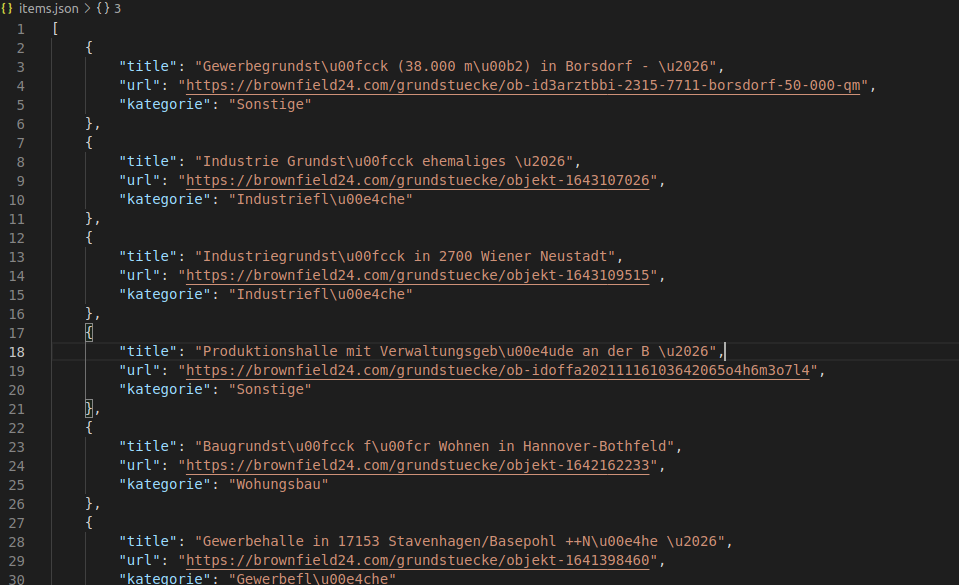

Running the spider using scrapy crawl sample will obtain below output.