1, data collection class

import OS;

The import torch. Utils. Data as the data;

The import numpy as np;

Import the torch;

The import torchvision. Transforms as transforms;

The from PIL import Image

Data_transform=transforms.Com pose ([

Transforms. ToTensor ()

]);

IMAGE_H=200;

IMAGE_W=200;

Class DogCatDataset (data. The Dataset) :

Def __init__ (self, mode, dir) :

The self. The mode=mode;

Self. List_img=[];

Self. List_label=[];

Self. Data_size=0;

The self. The transform=data_transform;

If the self. The mode=='train' :

Dir=dir + '/train/';

For file in OS. Listdir (dir) :

Self. List_img. Append (dir + file);

Self. Data_size +=1;

Name=file. The split (sep='. ');

If the name [0]=='cat' :

Self. List_label. Append (0);

The else:

Self. List_label. Append (1);

Elif self. Mode=='test' :

Dir=dir + '/test/';

For file in OS. Listdir (dir) :

Self. List_img. Append (dir + file);

Self. Data_size +=1;

Self. List_label. Append (2);

The else:

Return the print (' undefined dataset ');

Def __getitem__ (self, item) :

If the self. The mode=='train' :

Img=Image. Open (self. List_img [items]) # to open the picture

Img=img. Resize ((IMAGE_H IMAGE_W)) # resize the picture into a unified size

[img=np. Array (img) :, :, : 3] # data into numpy array form

Label=self. List_label/item # for image matching label

Return the self. The transform (img), torch LongTensor ([label])

Elif self. Mode=='test' :

Img=Image. Open (self. List_img [items]);

Img=img. Resize ((IMAGE_W IMAGE_H));

[img=np. Array (img) :,,,, 3].

Return the self. The transform (img);

The else:

Print (' None ');

Def __len__ (self) :

Return the self. Data_size;

Neural network structure:

import torch;

The import torch. Nn as nn;

The import torch. Utils. Data;

The import torch. Nn. The functional as F;

Class.net (nn Module) :

Def __init__ (self) :

The self, super (Net) __init__ ();

The self. The conv1=torch. Nn. Conv2d (3, 3, 16, padding=1)

Self. Conv2=torch. Nn. Conv2d (16, 16, 3, padding=1)

The self. The fc1=nn. Linear (50 * 50 * 16, 128)

The self. The fc2=nn. Linear (128, 64)

The self. The fc3=nn. Linear (64, 2)

Def forward (self, x) :

X=self. Conv1 (x)

X=F.r elu (x)

X=f. may ax_pool2d (x, 2)

X=self. Conv2 (x)

X=F.r elu (x)

X=f. may ax_pool2d (x, 2)

X=x.v iew (x.s considering () [0], 1)

X=F.r elu (self. (fc1) (x))

X=F.r elu (self fc2 (x))

X=self. Fc3 (x)

Return F.s oftmax (x, dim=1)

# x=F.r elu (self. Fc2 (x));

# x=F.r elu (self fc3 (x));

# x=F.r elu (self fc4 (x));

# x=self. Fc5 (x);

# return F.s oftmax (x, dim=1);

Training data set categories:

the from DogCatImageDataset import DogCatDataset as DVCD;

From the torch. Utils. Data import DataLoader as DataLoader;

The from TrainNet import Net;

Import the torch;

From the torch. Autograd import Variable;

The import torch. Nn as nn;

The import torchvision. Transforms as transforms;

The import matplotlib. Pyplot as PLT;

Dataset_dir='./data/';

Model_cp='./model/';

Workers=10;

Batch_size=50;

Lr=0.0001;

Epochs=10;

Def train () :

Transform_train=transforms.Com pose ([

Transforms the Normalize (STD=(0.5, 0.5, 0.5), mean=(0.5, 0.5, 0.5))

]);

Datafile=DVCD (' train 'dataset_dir);

Dataloader=dataloader (datafile, batch_size=batch_size, shuffle=True, num_workers=workers);

Print (' the Dataset of the loaded! The length of train set is {0} '. The format (len (datafile)));

The model=Net ();

Optimizer=torch. Optim. SGD (model. The parameters (), lr=lr, momentum=0.9).

Creiterion=torch. Nn. CrossEntropyLoss ();

CNT=0;

Losses=[];

For I in range (epochs) :

# model. The train ();

Print (' epochs: {0} '. The format (I));

For j, (img, label) in enumerate (dataloader) :

Img, label=Variable (img), Variable (label);

Optimizer. Zero_grad ();

Out=model (img);

Loss=creiterion (out, label. Squeeze ());

Loss. Backward ();

Optimizer. Step ();

# train_loss=loss/batch_size;

If j %==0:

Losses. Append (loss. The float ());

Print (' [epochs - {0} {1}/{2}] loss: {3} '. The format (I, j, len (datafile), loss. The float ()));

PLT. CLF ();

PLT. The plot (losses);

PLT. Pause (0.01);

# CNT +=1;

Frame # print (' {0}, train_loss: {1} '. The format (* batch_size CNT, loss/batch_size));

The torch. The save (model. State_dict (), '{0} model. The PTH'. The format (model_cp));

If __name__=="__main__ ':

Train ();

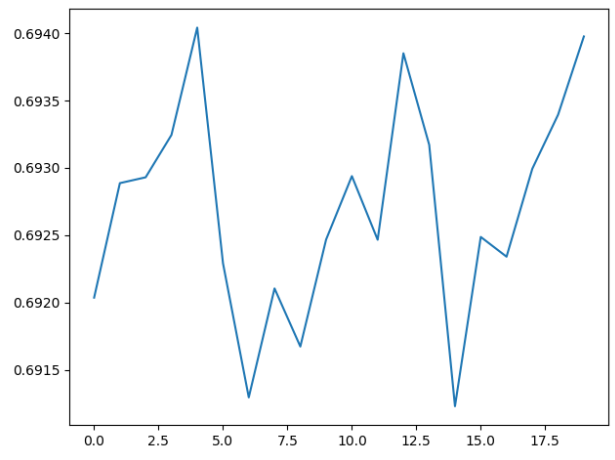

Loss curve:

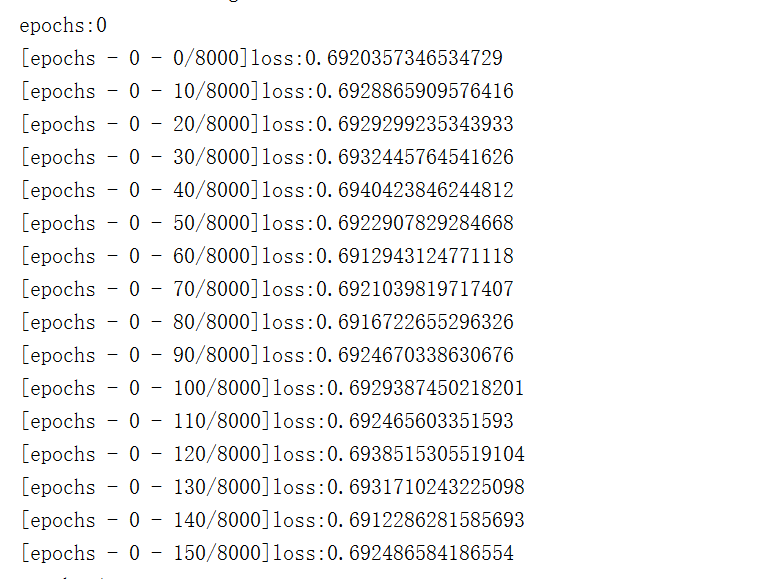

Loss of log:

The great god, consult

CodePudding user response:

Feel the need to adjust the vector, and it didn't start, you wait and see more...Or SGD with rough Adam, please have a look at the effect of

Another: the convolution can be stack of layers, two layers of a little bit too less

CodePudding user response:

nullnullnullnullnullnullnullnullnullnull