I have an Azure Storage account where I upload a file. The file upload takes place in an Azure Function (Blob trigger).

Everything works as expected, however, if I trigger the Function, three times, the file is appended with previous three sessions data. I don't think I am disposing of the stream correctly.

public void Run(

[BlobTrigger("raw/{name}")] Stream input,

[Blob("data/{name}", FileAccess.Write)] TextWriter output,

string name,

ILogger log)

I am then writing to the output as follows:

output.WriteLine(data);

I am not sure how to wrap this in a using statement.

Can anyone advise?

CodePudding user response:

Here is the code that worked for me where each time I'm triggering the function the file is getting overwritten with the current triggered data.

using System;

using System.IO;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

namespace FunctionAppTW

{

public static class Function1

{

[FunctionName("Function1")]

public static void Run([BlobTrigger("raw/{name}", Connection = "")] Stream input, [Blob("data/{name}", FileAccess.Write)] TextWriter output, string name, ILogger log)

{

StreamReader reader = new StreamReader(input);

string data = reader.ReadToEnd();

output.WriteLine(data);

}

}

}

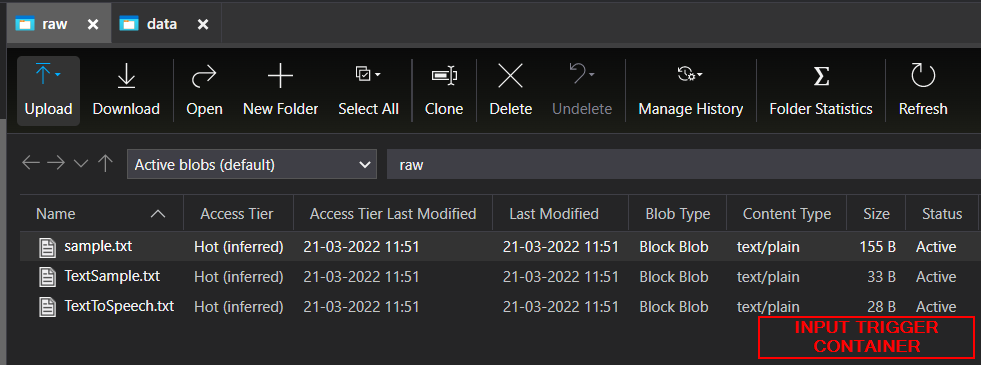

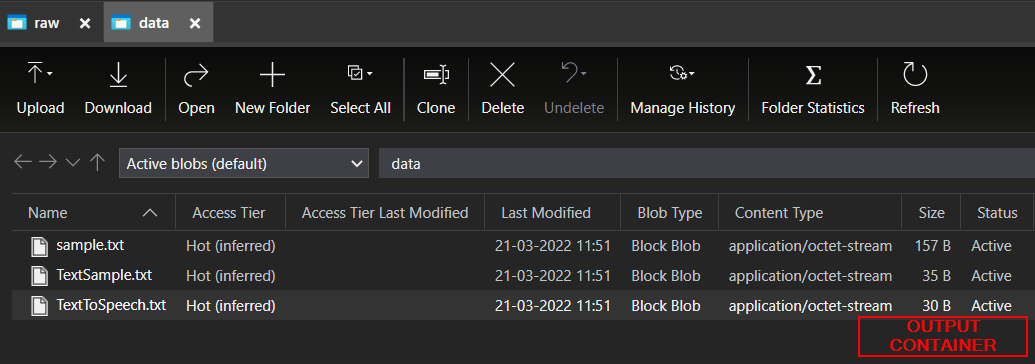

RESULT:

CodePudding user response:

I've run into this issue before myself. I can't recall but I used dependency injection in my functions app. My implementation code kept the stream alive and would aggregate previous runs when using just the output.writeline() statement.

I had to close and dispose of the previous session before each and every method call.