From Azure Databricks I would like to insert some dataframes as tables in a sql database. How can I do to connect Azure Databricks with Azure SQL Database using service principal with python ?

I searched something similar with:

jdbcHostname = "..."

jdbcDatabase = "..."

jdbcPort = ...

jdbcUrl = "jdbc:sqlserver://{0}:{1};database={2}".format(jdbcHostname, jdbcPort, jdbcDatabase)

connectionProperties = {

"user" : "...",

"password" : "...",

"driver" : "com.microsoft.sqlserver.jdbc.SQLServerDriver"

}

But found nothing to do with Python. How can I do it ? Maybe with pyspark like below ?

hostname = "<servername>.database.windows.net"

server_name = "jdbc:sqlserver://{0}".format(hostname)

database_name = "<databasename>"

url = server_name ";" "databaseName=" database_name ";"

print(url)

table_name = "<tablename>"

username = "<username>"

password = dbutils.secrets.get(scope='', key='passwordScopeName')

CodePudding user response:

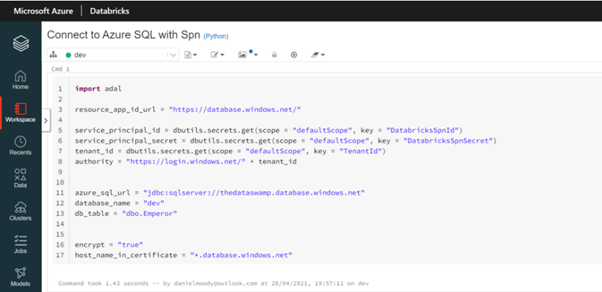

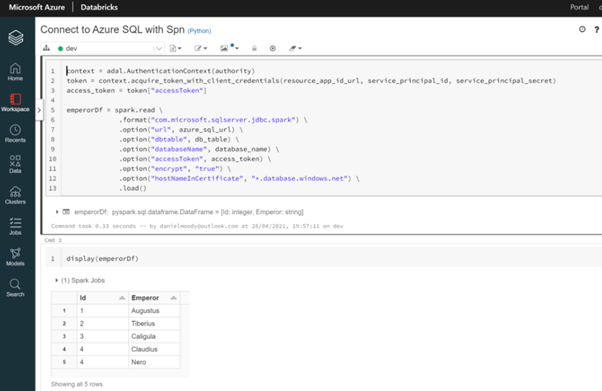

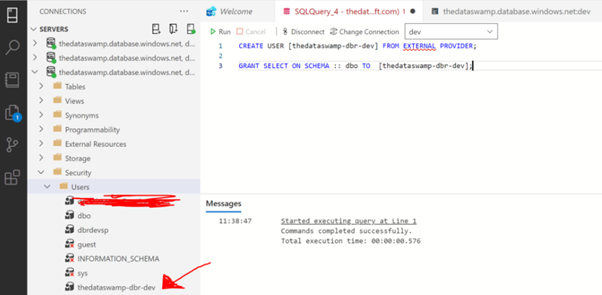

To connect to Azure SQL Database, you will need to install the

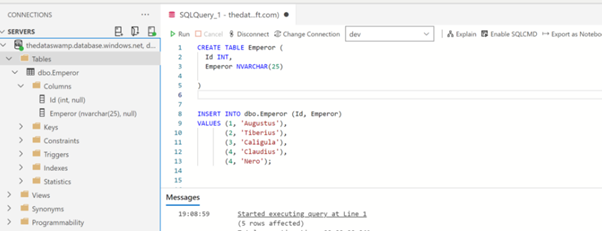

We will also create a table in the database

Azure SQL Snippet:

Reference:

https://www.thedataswamp.com/blog/databricks-connect-to-azure-sql-with-service-principal

https://docs.microsoft.com/en-us/sql/connect/spark/connector?view=sql-server-ver15