So, my development team was trying to migrate from GCE to GCR and we have succeeded in deploying the Cloud Run service and The CI/CD using github actions. But we encountered an issue which is the amount of request the Cloud Run service can handle is not more than 100 request concurrently. So our base framework for the app is php/codeigniter and the web server we are using is apache2 webserver, along with sql server as our database that we already included in our dockerfile

FROM php:7.4.22-apache

USER root

RUN apt-get update && apt-get upgrade -y

RUN apt-get update && apt-get install -y gnupg2

RUN apt-get install libcurl4-openssl-dev

RUN apt-get install zlib1g-dev

RUN apt-get install libpng-dev -y

RUN docker-php-ext-install curl

RUN docker-php-ext-install gd

RUN curl https://packages.microsoft.com/keys/microsoft.asc | apt-key add -

RUN curl https://packages.microsoft.com/config/debian/10/prod.list > /etc/apt/sources.list.d/mssql-release.list

RUN apt-get update

RUN apt-get install wget

RUN wget http://ftp.de.debian.org/debian/pool/main/g/glibc/multiarch-support_2.28-10 deb10u1_amd64.deb

RUN dpkg -i multiarch-support_2.28-10 deb10u1_amd64.deb

RUN apt-get install -y libodbc1

RUN apt-get install -y unixodbc-dev

RUN pecl install sqlsrv

RUN pecl install pdo_sqlsrv

RUN echo "extension=pdo_sqlsrv.so" >> `php --ini | grep "Scan for additional .ini files" | sed -e "s|.:\s||"`/30-pdo_sqlsrv.ini

RUN echo "extension=sqlsrv.so" >> `php --ini | grep "Scan for additional .ini files" | sed -e "s|.:\s||"`/30-sqlsrv.ini

RUN rm multiarch-support_2.28-10 deb10u1_amd64.deb

# RUN ACCEPT_EULA=Y apt-get -y install mssql-tools

RUN ACCEPT_EULA=Y apt-get install msodbcsql17

COPY 000-default.conf /etc/apache2/sites-available/000-default.conf

COPY apache2.conf /etc/apache2/apache2.conf

COPY openssl.cnf /etc/ssl/openssl.cnf

COPY php.ini /etc/php/7.4/apache2/php.ini

RUN a2enmod rewrite

RUN /etc/init.d/apache2 restart

and this is the dockerfile that we used

FROM jamesjones/test-base:latest

USER root

COPY . /var/www/html

RUN cd /var/www/html

RUN chown -R www-data:www-data /var/www/html

COPY v1/application/config/config.prod.php /var/www/html/v1/application/config/config.php

COPY v1/application/config/database.prod.php /var/www/html/v1/application/config/database.php

COPY v1/application/config/routes.prod.php /var/www/html/v1/application/config/routes.php

COPY v2/application/config/config.prod.php /var/www/html/v2/application/config/config.php

COPY v2/application/config/database.prod.php /var/www/html/v2/application/config/database.php

COPY v2/application/config/routes.prod.php /var/www/html/v2/application/config/routes.php

COPY .htaccess.prod /var/www/html/.htaccess

VOLUME /var/www/html

i have tried this steps and it appears that the problem still persist

https://cloud.google.com/blog/topics/developers-practitioners/3-ways-optimize-cloud-run-response-times

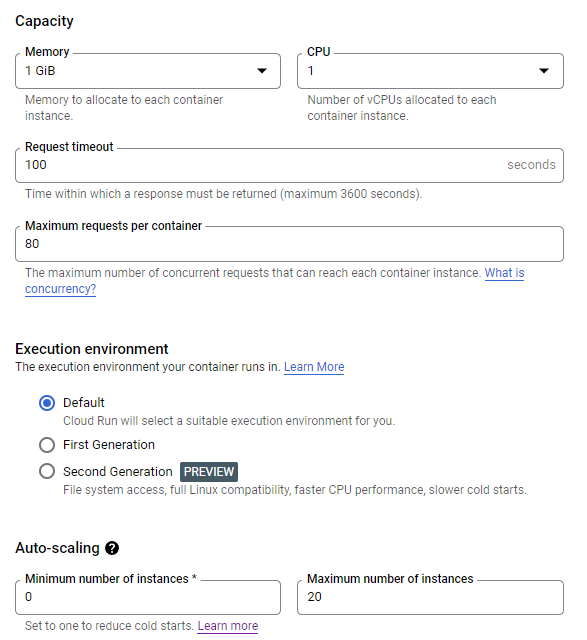

this is our cloud run specification

i have also tried to increase the minimum number of instance in autoscaling to 10 but there seems to be no difference.

are there any alternatives to this issue ?

CodePudding user response:

It turns out that the problem was vpc connector, because our database is connected using tcp/ip and we have to whitelist a public ip to be able to access it securely and that is why we are using vpc, so we are using mikrotik to bind our ip instead of using vpc connector. and the gcr service can finally handle 10000 request.