I'm importing a big CSV file (500M, ~2.000.000 lines, 50 columns) into a PostgreSQL (14.2) table using Laravel 9 (PHP 8.1), it takes around one hour on a dedicated server (Ubuntu 22.04) with 4 CPU and 8G of RAM.

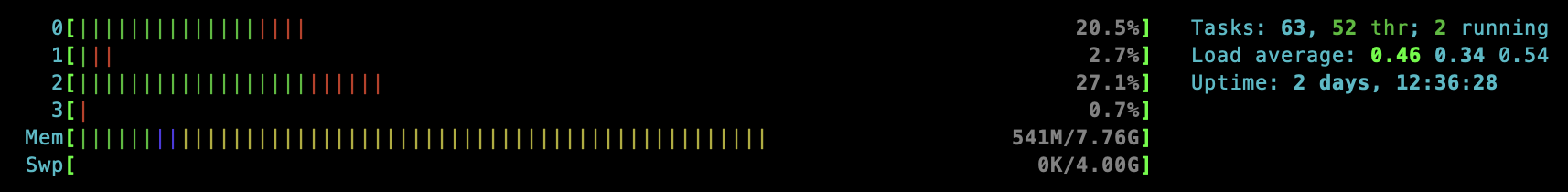

During the import, I can see with htop that only two CPUs are being used and never at full speed:

Is there a way to make a better usage of the server in order to speed up this daily import ?

CodePudding user response:

The only way to use more than a single CPU core is to open several database connecions and use them in parallel. However, even with a single core you can be much faster if you

run many inserts in a single database transaction

use prepared statements to reduce planning overhead

have as few indexes as possible

use

COPYrather thanINSERTfor bulk loading