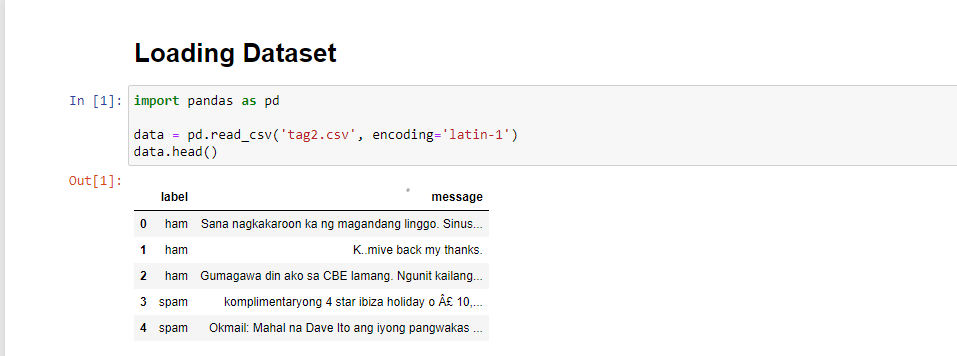

My dataset is Spam and Ham Filipino Message

I divided my dataset into 60% training, 20% testing and 20%validation

Split data into testing, training and Validation

from sklearn.model_selection import train_test_split

data['label'] = (data['label'].replace({'ham' : 0,

'spam' : 1}))

X_train, X_test, y_train, y_test = train_test_split(data['message'],

data['label'], test_size=0.2, random_state=1)

X_train, X_val, y_train, y_val = train_test_split(X_train, y_train, test_size=0.25, random_state=1) # 0.25 x 0.8 = 0.2

print('Total: {} rows'.format(data.shape[0]))

print('Train: {} rows'.format(X_train.shape[0]))

print(' Test: {} rows'.format(X_test.shape[0]))

print(' Validation: {} rows'.format(X_val.shape[0]))

Train a MultinomialNB from sklearn

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

import numpy as np

naive_bayes = MultinomialNB().fit(train_data,

y_train)

predictions = naive_bayes.predict(test_data)

Evaluate the Model

from sklearn.metrics import (accuracy_score,

precision_score,

recall_score,

f1_score)

accuracy_score = accuracy_score(y_test,

predictions)

precision_score = precision_score(y_test,

predictions)

recall_score = recall_score(y_test,

predictions)

f1_score = f1_score(y_test,

predictions)

My problem is in Validation. The error says

warnings.warn("Estimator fit failed. The score on this train-test"

this is how I code my validation, don't know if I'm doing the right thing"

from sklearn.model_selection import cross_val_score

mnb = MultinomialNB()

scores = cross_val_score(mnb,X_val,y_val, cv = 10, scoring='accuracy')

print('Cross-validation scores:{}'.format(scores))

CodePudding user response:

I did not get any error or warning. Maybe it can be worked.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

import numpy as np

from sklearn.metrics import (accuracy_score,

precision_score,

recall_score,

f1_score)

from sklearn.model_selection import cross_val_score

from sklearn.feature_extraction.text import CountVectorizer

df = pd.read_csv("https://raw.githubusercontent.com/jeffprosise/Machine-Learning/master/Data/ham-spam.csv")

vectorizer = CountVectorizer(ngram_range=(1, 2), stop_words='english')

x = vectorizer.fit_transform(df['Text'])

y = df['IsSpam']

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=1)

X_train, X_val, y_train, y_val = train_test_split(X_train, y_train, test_size=0.25, random_state=1) # 0.25 x 0.8 = 0.2

print('Total: {} rows'.format(data.shape[0]))

print('Train: {} rows'.format(X_train.shape[0]))

print(' Test: {} rows'.format(X_test.shape[0]))

print(' Validation: {} rows'.format(X_val.shape[0]))

naive_bayes = MultinomialNB().fit(X_train, y_train)

predictions = naive_bayes.predict(X_test)

accuracy_score = accuracy_score(y_test,predictions)

precision_score = precision_score(y_test, predictions)

recall_score = recall_score(y_test, predictions)

f1_score = f1_score(y_test, predictions)

mnb = MultinomialNB()

scores = cross_val_score(mnb,X_val,y_val, cv = 10, scoring='accuracy')

print('Cross-validation scores:{}'.format(scores))

Result:

Total: 1000 rows

Train: 600 rows

Test: 200 rows

Validation: 200 rows

Cross-validation scores:[1. 0.95 0.85 1. 1. 0.9 0.9 0.8 0.9 0.9 ]

CodePudding user response:

First, it is worth noting that because it's called cross validation doesn't mean you have to use a validation set as you have done in your code, to do the crossval. There are a number of reasons why you would perform cross validation which include:

- Ensuring that all your dataset is used in training as well as evaluating the performance of your model

- To perform hyperparameter tuning.

Hence, your case here lean toward the first use case. As such you don't need to first perform a split of train, val, and test. Instead you can perform the 10-fold cross validation on your entire dataset.

If you are doing hyparameterization, then you can have a hold-out set of say 30% and use the remaining 70% for cross validation. Once the best parameters have been determined, you can then use the hold-out set to perform an evaluation of the model with the best parameters.

Some refs:

https://www.analyticsvidhya.com/blog/2021/11/top-7-cross-validation-techniques-with-python-code/

https://towardsdatascience.com/train-test-split-and-cross-validation-in-python-80b61beca4b6