Here's a very simple demo:

class ViewController: UIViewController {

override func viewDidLoad() {

super.viewDidLoad()

for i in 0..<500000 {

DispatchQueue.global().async {

print(i)

}

}

}

}

When I run this demo from my simulator, the memory usage goes up to ~17MB and then drops to ~15MB in the end. However, if I comment out the dispatch code and only keeps the print() line, the memory usage is only ~10MB. The increase amount varies whenever I change the loop count.

Is there a memory leak? I tried Leaks and didn't find anything.

CodePudding user response:

Memory used is not memory leaked.

There is a certain amount of overhead associated with certain OS services. I remember answering a similar question posed by someone using a WebView. There are global caches. There is simply code that has to be paged in from disk to memory (which is big with WebKit), and once the code is paged in, it's incredibly unlikely to ever be paged out.

I've not looked at the libdispatch source code lately, but GCD maintains one or more pools of threads that it uses to execute the blocks you enqueue. Every one of those threads has a stack. The default thread stack size on macOS is 8MB. I don't know about iOS's default thread stack size, but it for sure has one (and I'd bet one stiff drink that it's 8MB). Once GCD creates those threads, why would it shut them down? Especially when you've shown the OS that you're going to rapidly queue 500K operations?

The OS is optimizing for performance/speed at the expense of memory use. You don't get to control that. It's not a "leak" which is memory that's been allocated but has no live references to it. This memory surely has live references to it, they're just not under your control. If you want more (albeit different) visibility into memory usage, look into the vmmap command. It can (and will) show you things that might surprise you.

CodePudding user response:

When looking at memory usage, one has to run the cycle several times before concluding that there is a “leak”. It could just be caching.

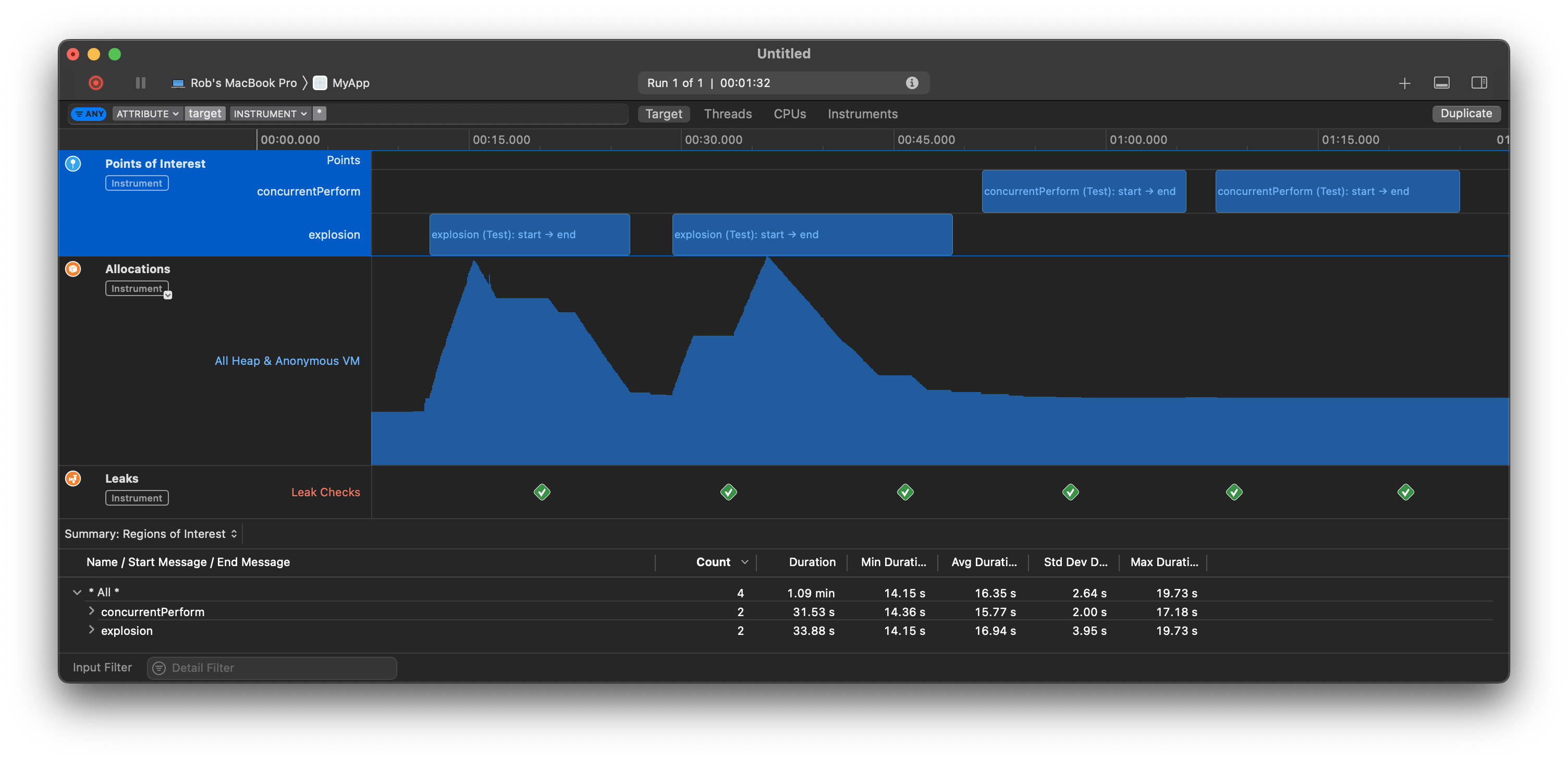

In this particular case, you might see memory growth after the first iteration, but it will not continue to grow on subsequent iterations. If it was a true leak, the post-peak baseline would continue to creep up. But it does not. Note that the baseline after the second peak is basically the same as after the first peak.

As an aside, the memory characteristics here are a result of the thread explosion (which you should always avoid). Consider:

for i in 0 ..< 500_000 {

DispatchQueue.global().async {

print(i)

}

}

That dispatches half a million work items to a queue that can only support 64 worker threads at a time. That is “thread-explosion” exceeding the worker thread pool.

You should instead do the following, which constraints the degree of concurrency with concurrentPerform:

DispatchQueue.global().async {

DispatchQueue.concurrentPerform(iterations: 500_000) { i in

print(i)

}

}

That achieves the same thing, but limits the degree of concurrency to the number of available CPU cores. That avoids many problems (specifically it avoids exhausting the limited worker pool thread which could deadlock other systems), but it also avoids the spike in memory, too. (See the above graph, where there is no spike after either of the latter two concurrentPerform processes.)

So, while the thread-explosion scenario does not actually leak, it should be avoided at all costs because of both the memory spike and the potential deadlock risks.