Hi All i have list of links, through curl I am getting their response by using this command

curl -K file.txt.

I am saving response to file.

But the problem is I am getting rough response(collectively all curl request result in one file), I want to filter the response in two possible ways.

1 - End result in file should be organized like this URL against each response or maybe if other possible organized way. e.g.

CodePudding user response:

This example is assuming that file.txt exist.

It will test the existence of HelloWorld in the response.

The If statements had been written on 3 lines for readability.

The responses.txt will contain the result.

An intermediary file is used to store each http response in turn: /var/tmp/response-tmp.txt

Give a try to this:

regex="HelloWorld"

rm -f responses.txt 2>/dev/null

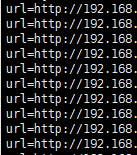

for url in $(sed -n 's/^[[:blank:]]*url[[:blank:]]*=[[:blank:]]*//gIp' file.txt); do

curl -w "http_code %{http_code}" -Ns --url "${url}" > /var/tmp/response-tmp.txt 2>/dev/null

if [[ $? -eq 0 ]] ; then

if tail -1 /var/tmp/response-tmp.txt 2>/dev/null | grep -aq "http_code 2[0-9][0-9]$" 2>/dev/null ; then

if grep -aq "${regex}" /var/tmp/response-tmp.txt 2>/dev/null ; then

printf "Heading %s\nResponse " "${url}"

head -c -13 response-tmp.txt

fi

fi

fi

done >> responses.txt