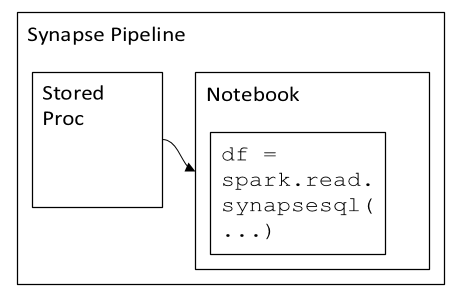

I wanted to know how we can run stored procedure in spark pool (azure synapse) which i have created in dedicated SQL pool. Also can we run SQL queries to access data in ddsql pool in notebook.

CodePudding user response:

It is possible to do this (eg using an ODBC connection as described

Is there a particular reason you are copying existing data from the sql pool into Spark? I do a very similar pattern but reserve it for things I can't already do in SQL, such as sophisticated transform, RegEx, hard maths, complex string manipulation etc