I am building a Deep Learning model for regression:

model = keras.Sequential([

keras.layers.InputLayer(input_shape=np.shape(X_train)[1:]),

keras.layers.Conv1D(filters=30, kernel_size=3, activation=tf.nn.tanh),

keras.layers.Dropout(0.1),

keras.layers.AveragePooling1D(pool_size=2),

keras.layers.Conv1D(filters=20, kernel_size=3, activation=tf.nn.tanh),

keras.layers.Dropout(0.1),

keras.layers.AveragePooling1D(pool_size=2),

keras.layers.Flatten(),

keras.layers.Dense(30, tf.nn.tanh),

keras.layers.Dense(20, tf.nn.tanh),

keras.layers.Dense(10, tf.nn.tanh),

keras.layers.Dense(3)

])

model.compile(loss='mse', optimizer='adam', metrics=['mae'])

model.fit(

X_train,

Y_train,

epochs=300,

batch_size=32,

validation_split=0.2,

shuffle=True,

callbacks=[early_stopping]

)

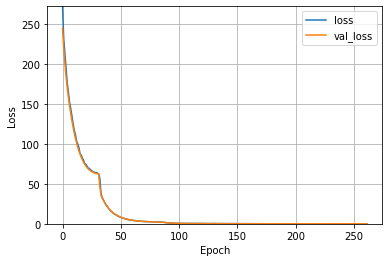

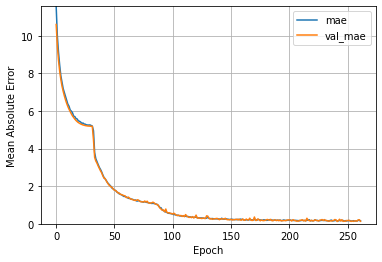

During training, the loss function (and MAE) exhibit this strange behavior:

What does this trend indicate? Could it mean that the model is overfitting?

CodePudding user response:

It looks to me that your optimiser changes (decreases) the learning rate at those sudden change curvy points.

CodePudding user response:

I think, There is an issue with your dataset. I have seen that your training and validation losses are precisely the same value, which is practically not possible.

Please check your dataset and shuffle it before splitting.