I am working with sklearn.metrics mean_squared_error and I want to use the mse function in a dataframe.

I want to calculate the mse for each element between two columns from two different df.

When I try this:

mean_squared_error(train_df_test.iloc[:,:1], ideal_df_test.iloc[:,:1])

I simply get one value as a return. I would have expected to get one individual value for each row for 1 column.

Like it would work here:

train_df_test.iloc[:,:1] * ideal_df_test.iloc[:,:1]

Output:

| x | y1|

| ----- | - |

| -20.00| 10|

| -19.99| 20|

What am I doing wrong here?

CodePudding user response:

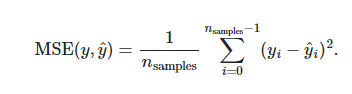

The MSE uses the errors of your model, namely the difference between the real values and the predicted ones, square them and then it takes the avarage.

It is correct obtainin a scalar value as output and not a vector of values.

This is the formula:

If you want as output a vector of squared errors (not sure which could be the scope) you can use the following function that takes as input 2 columns:

def squared_errors(col_true, col_pred):

return (col_true - col_pred)**2