I wonder how postgresql query can generate a column, say 'has_alarm1', that will have values "1", "0", or "null" whenever, respectively, i) any row of a certain GROUP of rows has value > 0 in an existing column, say 'alarm1'; ii) no row of the GROUP has 'alarm1' value > 0 and at least one row with 'alarm1' value = "0"; or all rows of the GROUP have 'alarm1' value = "null".

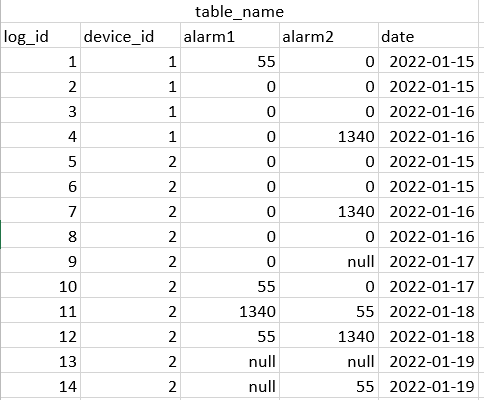

Let me further illustrate that with an example and images:

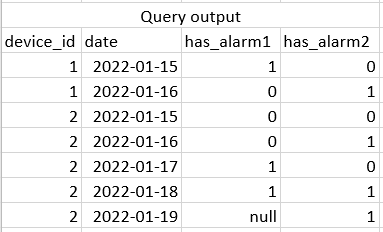

Here is the intended query output:

i) First and foremost, observe that the rows in 'table_name' (the input table: 1st figure) are at a level below the device_id-date (they are at, what I call, 'log level'), meaning there is more than 1 register for each device_id-date ( e.g. device_id = "1" AND date = "2022-01-16" has 2 rows or logs (log_id's = "3", "4"); device_id = "2" AND date = "2022-01-16" has 2 rows (log_id's = "7", "8") ). Meanwhile, rows in the intended query output (2nd figure) are at the device_id-date level, meaning each device_id-date has a unique row in the output table ( e.g. device_id = "1" AND date = "2022-01-16" has only 1 row (the 2nd register); device_id = "2" AND date = "2022-01-16" has only 1 row (the 4th register));

ii) Observe that the groups are formed by each 'device_id' value. So in the example, there are two groups: device_id = "1" and device_id = "2". I want to aggregate from log level (log_id) to group-date level (i.e. device_id-date) in the output table, as you can see in the 2nd figure.

iii) "Condition1": whenever the device_id-date has at least one log-row with column 'alarm_number' value > "0" (greater than zero), the query will output only one row for that device_id-date with new column 'has_alarm_number' = "1";

iv) "Condition2": whenever the device_id-date has all log rows with column 'alarm_number' value = "Null", the query will output only one row for that device_id-date with new column 'has_alarm_number' = "Null";

v) "Condition2.1": whenever the device_id-date has no log rows with column 'alarm_number' value > "0" AND at least one log row with column 'alarm_number' value = "0", the query will output only one row for that device_id-date with new column 'has_alarm_umber' = "0".

Example:

For example, observe in the 1st figure that device_id = "1" on date "2022-01-15" provides alarm1 = "55" (log_id = "1") and alarm1 = "0" (log_id = "2"). The query then outputs a single row with has_alarm1 = "1" for that device_id-date (see output), because "condition1" is met for that device_id-date. However, when all log rows with device_id = "2" on date "2022-01-19" provide alarm1 = "Null" (log_id's = "13", "14"), the query outputs a single row with has_alarm1 = "Null" for that device_id-date, because "condition2" is met for that device_id-date. Eventually, observe that device_id = "1" on "2022-01-16" provides alarm1 = 0" (log_id's = "3", "4"). The query returns a single row with has_alarm1 = "0" for that device_id-date, because now "condition2.1" is met for that device_id-date. Likewise, when device_id = "2" on "2022-01-17" provides alarm2 = "Null" (log_id = "9") and alarm2 = "0" (log_id = "10"), as we can see in column 'alarm2' of the input table, the query returns a single row with has_alarm2 = "0" for that device_id-date, because "condition2.1" is met again.

I hope I have made this case well structured and clear in this post, but please let me know if any further explanations are required. Any solutions or suggestions are truly appreciated. Sincerely,

Nigel.

CodePudding user response:

Use sum() in groups by device_id and date.

select

device_id,

date,

(sum(alarm1) > 0)::int as has_alarm1,

(sum(alarm2) > 0)::int as has_alarm2

from my_table

group by 1, 2

order by 1, 2

Note, that sum() is null when all its arguments are null.