I have the following function:

brt <- gbm.step(data = train,

gbm.x = 5:9, # column indices for covariates

gbm.y = 4, # column index for response (presence-absence)

family = "bernoulli",

tree.complexity = ifelse(prNum < 50, 1, 5),

learning.rate = 0.001,

bag.fraction = 0.75,

max.trees = ifelse(s == "Jacaranda.puberula" | s == "Cestrum.intermedium", 50, 10000),

n.trees = 50,

n.folds = 5, # 5-fold cross-validation

silent = TRUE) # avoid printing the cv results

I'm trying to run the abovementioned function but they way I'm using the ifelse inside the max.trees argument returns an error. Is that the right way of coding it? What I'm trying to accomplish is simply using 50, instead of 10000 max.trees for those two species: Jacaranda.puberula or Cestrum.intermedium. Just to inform, the object s comes from another vector called sp, which is a list of species names. Each s is a different species that I'm looping in this function.

Is the problem on the way I am writing the statement or in the fact that I'm using OR as the logical?

EDIT: I think that may be something related to the function. I re-runned the analysis here and it returns:

Error in UseMethod("predict") :

no applicable method for 'predict' applied to an object of class "NULL"

In addition: There were 50 or more warnings (use warnings() to see the first 50)

CodePudding user response:

Generalized Boosted Regression Trees (gbm) does not seem to have any max.trees hyperparameter, you can use the condition for the hyperparameter n.trees instead, which works (or do you mean max_depth)?

n.trees = ifelse(s == "Jacaranda.puberula" | s == "Cestrum.intermedium", 50, 10000)

EDIT:

Without having access to your data, it's difficult to guess exactly what is going wrong. Last time I assumed that package you used is gbm. However, with a sample dataset (BostonHousing from the package mlbench), the below example shows how the gbm implementations from both the gbm and dismo packages work as expected:

library(mlbench)

data(BostonHousing)

head(BostonHousing)

# crim zn indus chas nox rm age dis rad tax ptratio b lstat medv

#1 0.00632 18 2.31 0 0.538 6.575 65.2 4.0900 1 296 15.3 396.90 4.98 24.0

#2 0.02731 0 7.07 0 0.469 6.421 78.9 4.9671 2 242 17.8 396.90 9.14 21.6

#3 0.02729 0 7.07 0 0.469 7.185 61.1 4.9671 2 242 17.8 392.83 4.03 34.7

#4 0.03237 0 2.18 0 0.458 6.998 45.8 6.0622 3 222 18.7 394.63 2.94 33.4

#5 0.06905 0 2.18 0 0.458 7.147 54.2 6.0622 3 222 18.7 396.90 5.33 36.2

#6 0.02985 0 2.18 0 0.458 6.430 58.7 6.0622 3 222 18.7 394.12 5.21 28.7

library(gbm)

set.seed(102) # for reproducibility

# predict target medv given other predictors

gbm1 <- gbm(medv ~ ., data = BostonHousing, #var.monotone = c(0, 0, 0, 0, 0, 0),

distribution = "gaussian", shrinkage = 0.1,

n.trees = 100,

interaction.depth = 3, bag.fraction = 0.5, train.fraction = 0.5,

n.minobsinnode = 10, cv.folds = 5, keep.data = TRUE,

verbose = FALSE, n.cores = 1)

#predict(gbm1)

library(dismo)

# predict target medv given other predictors

gbm2 <- gbm.step(data=BostonHousing, gbm.x = 1:13, gbm.y = 14, family = "gaussian",

max.trees = 10000,

tree.complexity = 5, learning.rate = 0.01, bag.fraction = 0.5)

#predict(gbm2)

# compare predictions

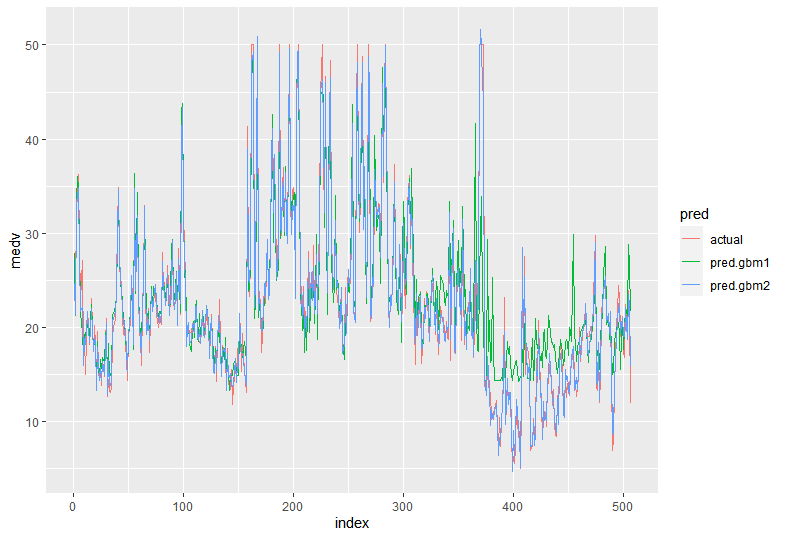

library(tidyverse)

data.frame(index=1:nrow(BostonHousing),

actual=BostonHousing$medv,

pred.gbm1=predict(gbm1),

pred.gbm2=predict(gbm2)) %>%

gather('pred', 'medv', -index) %>%

ggplot(aes(index, medv, group=pred, color=pred)) geom_line()