Generally speaking, we use the convolution operation, data abstraction and integrated, and then sent to all connected neural network for final calculations, essentially matrix operations such as mathematical calculations of a series of integrated operation,

Familiar with matrix friends all know that the matrix of each element, and the other elements are unrelated to the feature of natural way is suitable for the use of parallel computing to accelerate the calculation speed,

The key is coming! Parallel computing is the FPGA chip, such as strength, using FPGA to effectively accelerate the deep learning neural network computing (reasoning), the following is the specific operation show,

1. The deep learning of the neural network environment structures,

2. Install the OpenCL development environment, make the Intel Arria 10 FPGA as OpenCL device, pay attention to Ann Intel corresponding SDK

3. Using Tensorflow as framework, the realization of a simple full connection neural network

(provide a algorithm description example, use the Mnist data set as the training and testing)

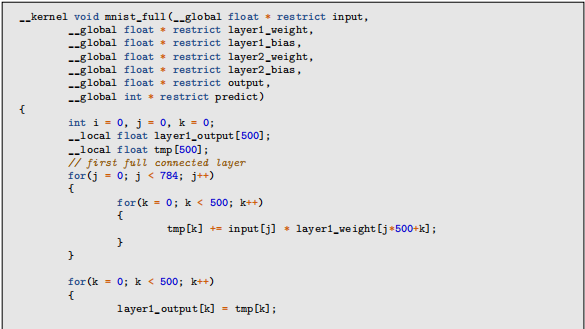

1) 28 x 28 Mnist dataset for each image pixels, each image pixel as input

2) the first set of hidden layers on the 500 neurons, 784 pixels to do calculation with the input image (matrix multiplication)

3) will be the result of using relu function as a result, only keep numerical & gt; 0 as a result, the other is set to 0

4) set 10 second hidden layer neurons, and the first calculated the output of the hidden layer (matrix multiplication), end up with 10 results as output

From the above description of the algorithm, we can see that in fact is the calculation process of several matrix: a [1784] and [784, 500] matrix multiplication, get a [1500] in the middle of the matrix. Then the [1500] in the middle of the matrix and matrix of [500, 10] calculated, end up with a matrix of [1, 10], this is our final result,

4. The neural network training, completing training after get model file

5. In certain operation way, extraction of model parameters of the model file

6. The model parameters as the input parameters, to accelerate the calculation of the FPGA, the realization of reasoning process

The OpenCL implementation of neural network algorithm, roughly as follows:

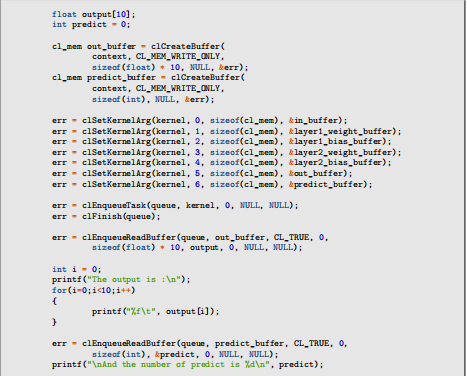

7. Finally using C/C + + program, CPU and FPGA to interact, to verify the correctness of the OpenCL algorithm,

The following is part of the CPU program code,

Through the above steps, we successfully use OpenCL on Intel FPGA implements a simple neural network inference speed,

CodePudding user response:

Thank LZ, has been learning

CodePudding user response:

Hard roof, the building Lord, to OpenCL is not ripe, convenient OpenCL based algorithm implementation details to show it to me?CodePudding user response:

Back upstairs, Intel China innovation center website related FPGA online training courses, have about OpenCL, can look

CodePudding user response:

The building Lord finishing well, continue to learn,CodePudding user response: