The import requests as rq

The from bs4 import BeautifulSoup as BS

The import jieba

The import time

The import sys

The import matplotlib as PLT

The import matplotlib. Pyplot as plt1

Import the random

The import OS

The import CSV

The import imageio

The import wordcloud

Def getHtml () :

# set the request header to prevent by the crawler test

Headers={

"The user-agent: Mozilla/5.0 (Windows NT 10.0; Win64. X64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36 ",

"Accept" : "*/*",

"Accept - the Encoding", "gzip, deflate",

"Connection" : "keep alive -}

"Url_python_list=[]

# url_java_list=[]

# url_php_list=[]

# if a. power ()=="python" :

{} url_python="https://www.lagou.com/zhaopin/Python//? FilterOption=2 & amp; Sid=9 f0a0fe0139a4177a5235849fc13c128 "# python job site format

{} url_java="https://www.lagou.com/zhaopin/Java//? FilterOption=2 & amp; Sid=fa689b8226cc43cda1fc58081041a81d "# Java position url format

Url_python_list=[url_python format (I) for I in range (1, 3)] # generate 30 pages before the url list

Test_web="HTTP://https://www.lagou.com/zhaopin/Python/1/? FilterOption=2 & amp; Sid=9 f0a0fe0139a4177a5235849fc13c128 "

# 30 pages before the crawl recruitment information

For I in url_python_list:

Rs=rq. Get (I, headers=headers)

StatusCode=rs. Status_code

If statusCode==200:

Print (" crawl web {} success ". The format (I))

Rs. Encoding='uft - 8'

# return rs. Text

Getdata (rs. Text)

The else:

Print (" crawl web {} failure ". The format (I))

"Return" Failed to get the url HTML

X=random. Randint (5, 10) # set random sleep seconds, prevent each request time interval by the same crawler detection (second layer)

Time. Sleep (x)

Def getdata (HTML) :

Soup=BS (HTML, "HTML parser") # get HTML

Salary_information=soup. Find_all (" span ", {" class ":" money "}) # get salary information

Address_information=soup. Find_all (" span ", {" class ":" add "}) # get company address information

Position_information=soup. Find_all (h3) # get jobs information

Salary_list=[]

Address_list=[]

Position_list=[]

Information_list=[]

# generate salary list

For salary in salary_information:

Sal=salary. The text

Salary_list. Append (sal)

# to generate address list

For the address in address_information:

The add=address. Text

Address_list. Append (add)

# generate jobs demand list

For the position in position_information:

Pos=position. The string

Position_list. Append (pos)

# information generated total list

For I in range (len (position_list) :

Information_list. Append ([address_list [I], position_list [I], salary_list [I]])

Writetxt (information_list)

Return information_list

# written to the file function

Def writetxt (txtList, fileName="information. TXT") :

OpenWay='a' if OS. Path. The exists (fileName) else 'w' # if an extra text file name already exists, whether does not exist to create a new file

With the open (fileName, openWay, encoding="utf-8") as fp:

For x in txtList:

The line=list (map (STR, x))

The line="^". Join (line) + '\ n'

Fp. Write (line)

Print (" {} file is written to success ". The format (fileName))

# write a CSV file

Def txt_to_csv (fileCSV, fileTXT='information. TXT') :

The dataList=[]

With the open (fileTXT, 'r', encoding="utf-8") as fp:

Lines=fp. Readlines ()

For the line in lines:

The line=line. Strip () # remove \ n

Row=line. The split (' ^ ') # split data

DataList. Append (row) # list

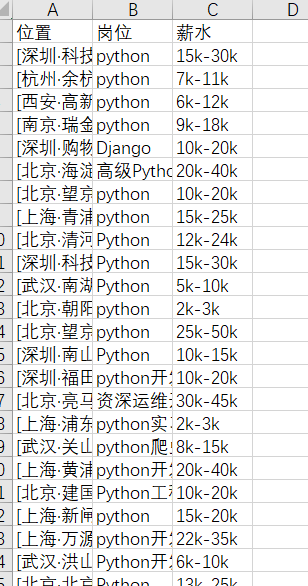

DataList. Insert (0, [" location ", "post", "salary"])

With the open (fileCSV, 'w', newline=', encoding="utf-8 - sig") as fp:

Writer.=the CSV writer (fp)

Writer. Writerows (dataList)

Print (' {} 'file is written to success. The format (fileCSV))

Return dataList

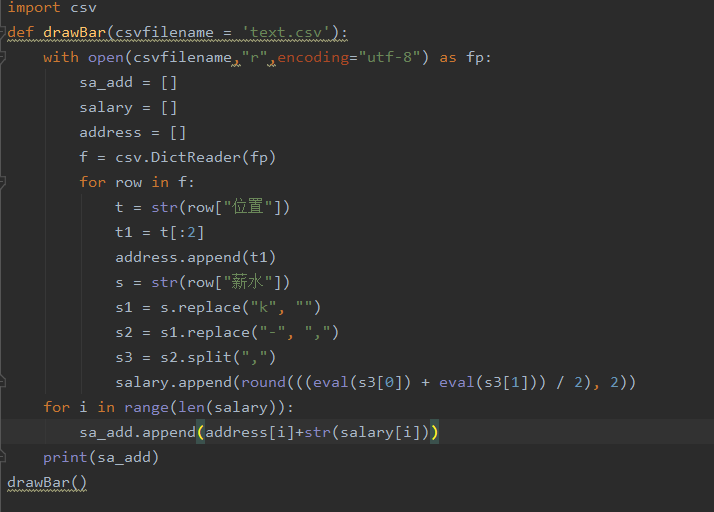

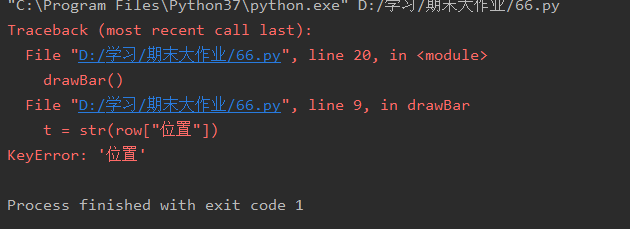

Def drawBar (csvfilename='text. CSV) :

With the open (csvfilename, "r", encoding="utf-8") as fp:

Sa_add=[]

Salary=[]

Address=[]

F=CSV. DictReader (fp)

For the row in f:

# s=STR (row [" salary "])

# s1=s.r eplace (" k ", "")

# s2=s1. Replace (" - ", ", ")

# s3=s2. The split () ", "

# salary. Append (round (((eval (s3 [0]) + eval (s3) [1])/2), 2))

T=STR (row [" location "])

T1=t [: 2)

Address. Append (t1)

For I in range (len (salary) :

Sa_add. Append (address [I] + STR (salary) [I])

# def drawPie () :

# def drawScatter () :

X=getHtml ()

Y=txt_to_csv (" text. CSV "