EDIT/UPDATE: I finally found a solution - the following worked:

df=df.replace(r'^\s*$', np.nan, regex=True)

I am trying to replace ' ' values with null values in python. Essentially, I am converting an text file to Python using substrings. In the file, all rows have the same number of characters but only one column - I need to convert this to distinct columns each with row values - example below:

df['column1'] = df['data'].str[0:2]

df['column2'] = df['data'].str[5:14]

In cases where a column's row value should be null, it is instead a space or a series of spaces (' '). I have tried the following:

df=df.replace(' ', "Null")

df=df.replace(' ', None)

df=df.replace('', None)

df=df.replace(r'\s*', None, regex=True)

This has not worked for me (it doesn't even change cases when the entire cell value is ' '); the space values remain, both for cells with spaces in between numbers (like ' 1' rather than '1') and for cells which should be empty. How can I solve this?

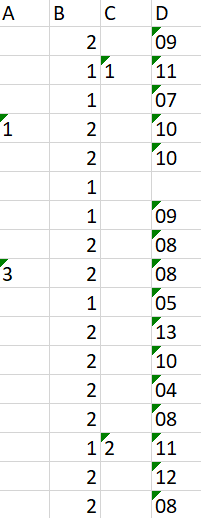

Example of data is below. Where there appears to be a blank value, it is actually one or two spaces (depending on the number of spaces of the cell):\

CodePudding user response:

You should maybe use an empty string, not space:

df.replace('', None)

If you're unsure, you can go for:

df.replace(r'\s*', None, regex=True)

Or, to restrict to full matches:

df.replace(r'^\s*$', None, regex=True)

CodePudding user response:

Try using strip to remove both leading and trailing spaces.

df['column1'] = df['data'].str[0:2]

df['column1'] = df['column1'].str.strip()

df['column2'] = df['data'].str[5:14]

df['column2'] = df['column2'].str.strip()