Ambari: against 2.4.1

The Spark: 2.0.0

Hadoop: 2.7.3

ZooKeeper: 3.4.6

Submitted after the installation is complete with yarn run Spark program is no problem, because the project need, now want to use the Spark send own Standalone task, can you tell me the need after configuration can be implemented? Own way according to the independent Spark configuration, run Spark - shell and their application has been an error:

The ERROR StandaloneSchedulerBackend: Application has had been killed. Reason: the Master removed our Application: FAILED

The currently active SparkContext was created at:

(No active SparkContext.)

Java. Lang. An IllegalStateException: always call the methods on a stopped SparkContext.

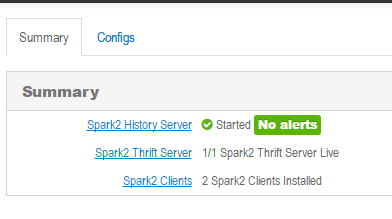

Ambari install spark results:

CodePudding user response:

I also encountered this problem, your problem solved, strives for the solutionCodePudding user response:

1) You need to start the Spark Master demon.2) You need to start the Spark Workers on all your slave nodes.

3) Submit your application using "-- master spark://master: 7077", or point to this master URL in your application. The

4) Last but no further, according to the do you want to use standalone mode, if you already have YARN? This is a strange requirement

CodePudding user response:

The building Lord, your problem is how to solveCodePudding user response:

Enter the directory on the master: :/usr/HDP/2.../spark2/sbin, run:/start - all. Sh, can start standlone mode, only in the configuration file can't ambari unified managementCodePudding user response:

This is due to the use of remote connection is relatively small, in addition to being used for testing, rarely need to use the standalone mode, so the communication between ambari default cluster through yarn management, standalone requires management by the users themselves, it is easy to understand, is the translation of standalone mode independent model