This is the TableViewController with the list of the audios. It successfully grabs all data from firebase and displays in a table. I include a screenshot

import UIKit

import AVKit

import AVFoundation

import FirebaseFirestore

import Combine

import SDWebImage

class ListOfAudioLessonsTableViewController: UIViewController, UITableViewDelegate, UITableViewDataSource {

@IBOutlet var table: UITableView!

let placeHolderImage = UIImage(named: "placeHolderImage")

private var viewModel = AudiosViewModel()

private var cancellable: AnyCancellable?

override func viewDidLoad() {

super.viewDidLoad()

self.viewModel.fetchData()

self.title = "Audio Lessons"

table.delegate = self

table.dataSource = self

cancellable = viewModel.$audios.sink { _ in

DispatchQueue.main.async{

self.table.reloadData()

}

} // Do any additional setup after loading the view.

}

// Table

func tableView(_ tableView: UITableView, numberOfRowsInSection section: Int) -> Int {

print("audios count = ", viewModel.audios.count)

return viewModel.audios.count

}

func tableView(_ tableView: UITableView, cellForRowAt indexPath: IndexPath) -> UITableViewCell {

let cell = tableView.dequeueReusableCell(withIdentifier: "Cell", for: indexPath)

let song = viewModel.audios[indexPath.row]

tableView.tableFooterView = UIView()

cell.textLabel?.text = song.albumName

cell.detailTextLabel?.text = song.name

cell.accessoryType = .disclosureIndicator

let imageURL = song.audioImageName

cell.imageView?.sd_imageIndicator = SDWebImageActivityIndicator.gray

cell.imageView?.sd_setImage(with: URL(string: imageURL),

placeholderImage: placeHolderImage,

options: SDWebImageOptions.highPriority,

context: nil,

progress: nil,

completed: { downloadedImage, downloadException, cacheType, downloadURL in

if let downloadException = downloadException {

print("error downloading the image: \(downloadException.localizedDescription)")

} else {

print("successfuly downloaded the image: \(String(describing: downloadURL?.absoluteString))")

}

})

cell.textLabel?.numberOfLines = 0

cell.detailTextLabel?.numberOfLines = 0

let backgroundView = UIView()

backgroundView.backgroundColor = UIColor(named: "AudioLessonsCellHighlighted")

cell.selectedBackgroundView = backgroundView

cell.textLabel?.font = UIFont(name: "Helvetica-Bold", size: 14)

cell.detailTextLabel?.font = UIFont(name: "Helvetica", size: 12)

return cell

}

func tableView(_ tableView: UITableView, heightForRowAt indexPath: IndexPath) -> CGFloat {

return 100

}

func tableView(_ tableView: UITableView, didSelectRowAt indexPath: IndexPath) {

tableView.deselectRow(at: indexPath, animated: true)

// present the player

let position = indexPath.row

//lessons

guard let vc = storyboard?.instantiateViewController(identifier: "AudioPlayer") as? AudioPlayerViewController else {

return

}

vc.paragraphs = viewModel.audios

vc.position = position

present(vc, animated: true)

}

func tableView(_ tableView: UITableView, didHighlightRowAt indexPath: IndexPath) {

if let cell = tableView.cellForRow(at: indexPath) {

cell.contentView.backgroundColor = UIColor(named: "AudioLessonsHighlighted")

cell.textLabel?.highlightedTextColor = UIColor(named: "textHighlighted")

cell.detailTextLabel?.highlightedTextColor = UIColor(named: "textHighlighted")

}

}

func tableView(_ tableView: UITableView, didUnhighlightRowAt indexPath: IndexPath) {

if let cell = tableView.cellForRow(at: indexPath) {

cell.contentView.backgroundColor = nil

}

}

}

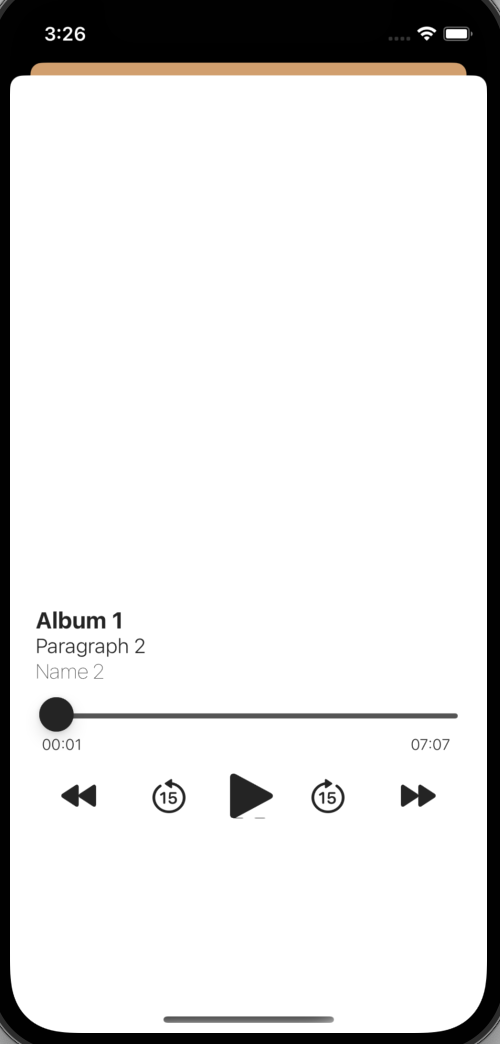

This is the AudioPlayerViewController. It successfully plays audio from link grabbed from firbase. It shows all the labels but doesn't display the image. The image also should be downloaded from firebase according to the row selected by the user. I succeeded to download and display image in tableviewcontroller but in cannot display AudioPlayerViewController and my question is: How to display image from firebase in AlbumImageview in AudioPlayerViewController? I include a screenshot

import UIKit

import AVFoundation

import MediaPlayer

import AVKit

import Combine

import SDWebImage

class AudioPlayerViewController: UIViewController {

private var viewModel = AudiosViewModel()

public var position: Int = 0

public var paragraphs: [Audio] = []

let placeHolderImage = UIImage(named: "placeHolderImage")

@IBOutlet var holder: UIView!

var player: AVPlayer?

var playerItem: AVPlayerItem?

var isSeekInProgress = false

var chaseTime = CMTime.zero

fileprivate let seekDuration: Float64 = 15

var playerCurrentItemStatus: AVPlayerItem.Status = .unknown

// User Interface elements

private let albumImageView: UIImageView = {

let imageView = UIImageView()

imageView.contentMode = .scaleAspectFill

return imageView

}()

private let paragraphNumberLabel: UILabel = {

let label = UILabel()

label.textAlignment = .left

label.font = .systemFont(ofSize: 16, weight: .light)

label.numberOfLines = 0 // allow line wrap

label.textColor = UIColor(named: "PlayerColors")

return label

}()

private let albumNameLabel: UILabel = {

let label = UILabel()

label.textAlignment = .left

label.font = .systemFont(ofSize: 18, weight: .bold)

label.numberOfLines = 0 // allow line wrap

label.textColor = UIColor(named: "PlayerColors")

return label

}()

private let songNameLabel: UILabel = {

let label = UILabel()

label.textAlignment = .left

label.font = .systemFont(ofSize: 16, weight: .ultraLight)

label.numberOfLines = 0 // allow line wrap

label.textColor = UIColor(named: "PlayerColors")

return label

}()

private let elapsedTimeLabel: UILabel = {

let label = UILabel()

label.textAlignment = .left

label.font = .systemFont(ofSize: 12, weight: .light)

label.textColor = UIColor(named: "PlayerColors")

label.text = "00:00"

label.numberOfLines = 0

return label

}()

private let remainingTimeLabel: UILabel = {

let label = UILabel()

label.textAlignment = .left

label.font = .systemFont(ofSize: 12, weight: .light)

label.textColor = UIColor(named: "PlayerColors")

label.text = "00:00"

label.numberOfLines = 0

return label

}()

private let playbackSlider: UISlider = {

let v = UISlider()

v.addTarget(AudioPlayerViewController.self, action: #selector(progressScrubbed(_:)), for: .valueChanged)

v.minimumTrackTintColor = UIColor.lightGray

v.maximumTrackTintColor = UIColor.darkGray

v.thumbTintColor = UIColor(named: "PlayerColors")

v.minimumValue = 0

v.isContinuous = true

return v

}()

let playPauseButton = UIButton()

override func viewDidLoad() {

super.viewDidLoad()

let panGesture = UIPanGestureRecognizer(target: self, action: #selector(panGesture(gesture:)))

self.playbackSlider.addGestureRecognizer(panGesture)

let audioSession = AVAudioSession.sharedInstance()

do {

try audioSession.setCategory(AVAudioSession.Category.playback)

}

catch{

print(error)

}

}

override func viewDidLayoutSubviews() {

super.viewDidLayoutSubviews()

if holder.subviews.count == 0 {

configure()

}

}

func configure() {

// set up player

let song = paragraphs[position]

let url = URL(string: song.trackURL)

let playerItem: AVPlayerItem = AVPlayerItem(url: url!)

do {

try AVAudioSession.sharedInstance().setMode(.default)

try AVAudioSession.sharedInstance().setActive(true, options: .notifyOthersOnDeactivation)

guard url != nil else {

print("urls string is nil")

return

}

player = AVPlayer(playerItem: playerItem)

let duration : CMTime = playerItem.asset.duration

let seconds : Float64 = CMTimeGetSeconds(duration)

remainingTimeLabel.text = self.stringFromTimeInterval(interval: seconds)

let currentDuration : CMTime = playerItem.currentTime()

let currentSeconds : Float64 = CMTimeGetSeconds(currentDuration)

elapsedTimeLabel.text = self.stringFromTimeInterval(interval: currentSeconds)

playbackSlider.maximumValue = Float(seconds)

player!.addPeriodicTimeObserver(forInterval: CMTimeMakeWithSeconds(1, preferredTimescale: 1), queue: DispatchQueue.main) { (CMTime) -> Void in

if self.player!.currentItem?.status == .readyToPlay {

let time : Float64 = CMTimeGetSeconds(self.player!.currentTime());

self.playbackSlider.value = Float(time)

self.elapsedTimeLabel.text = self.stringFromTimeInterval(interval: time)

}

let playbackLikelyToKeepUp = self.player?.currentItem?.isPlaybackLikelyToKeepUp

if playbackLikelyToKeepUp == false{

print("IsBuffering")

self.playPauseButton.isHidden = true

} else {

// stop the activity indicator

print("Buffering completed")

self.playPauseButton.isHidden = false

}

}

playbackSlider.addTarget(self, action: #selector(AudioPlayerViewController.progressScrubbed(_:)), for: .valueChanged)

self.view.addSubview(playbackSlider)

//subroutine used to keep track of current location of time in audio file

guard let player = player else {

print("player is nil")

return

}

player.play()

}

catch {

print("error accured")

}

// set up user interface elements

//album cover

albumImageView.frame = CGRect(x: 20,

y: 20,

width: holder.frame.size.width - 40,

height: holder.frame.size.width - 40)

albumImageView.image = UIImage(named: song.audioImageName)

holder.addSubview(albumImageView)

//Labels Song name, album, artist

albumNameLabel.frame = CGRect(x: 20,

y: holder.frame.size.height - 300,

width: holder.frame.size.width - 40,

height: 20)

paragraphNumberLabel.frame = CGRect(x: 20,

y: holder.frame.size.height - 280,

width: holder.frame.size.width-40,

height: 20)

songNameLabel.frame = CGRect(x: 20,

y: holder.frame.size.height - 260,

width: holder.frame.size.width-40,

height: 20)

playbackSlider.frame = CGRect(x: 20,

y: holder.frame.size.height - 235,

width: holder.frame.size.width-40,

height: 40)

elapsedTimeLabel.frame = CGRect(x: 25,

y: holder.frame.size.height - 200,

width: holder.frame.size.width-40,

height: 15)

remainingTimeLabel.frame = CGRect(x: holder.frame.size.width-60,

y: holder.frame.size.height - 200,

width: holder.frame.size.width-20,

height: 15)

songNameLabel.text = song.name

albumNameLabel.text = song.albumName

paragraphNumberLabel.text = song.paragraphNumber

holder.addSubview(songNameLabel)

holder.addSubview(albumNameLabel)

holder.addSubview(paragraphNumberLabel)

holder.addSubview(elapsedTimeLabel)

holder.addSubview(remainingTimeLabel)

//Player controls

let nextButton = UIButton()

let backButton = UIButton()

let seekForwardButton = UIButton()

let seekBackwardButton = UIButton()

//frames of buttons

playPauseButton.frame = CGRect(x: (holder.frame.size.width - 40) / 2.0,

y: holder.frame.size.height - 172.5,

width: 40,

height: 40)

nextButton.frame = CGRect(x: holder.frame.size.width - 70,

y: holder.frame.size.height - 162.5,

width: 30,

height: 20)

backButton.frame = CGRect(x: 70 - 30,

y: holder.frame.size.height - 162.5,

width: 30,

height: 20)

seekForwardButton.frame = CGRect(x: holder.frame.size.width - 140,

y: holder.frame.size.height - 167.5,

width: 30,

height: 30)

seekBackwardButton.frame = CGRect(x: 110,

y: holder.frame.size.height - 167.5,

width: 30,

height: 30)

let volumeView = MPVolumeView(frame: CGRect(x: 20,

y: holder.frame.size.height - 80,

width: holder.frame.size.width-40,

height: 30))

holder.addSubview(volumeView)

//actions of buttons

playPauseButton.addTarget(self, action: #selector(didTapPlayPauseButton), for: .touchUpInside)

backButton.addTarget(self, action: #selector(didTapBackButton), for: .touchUpInside)

nextButton.addTarget(self, action: #selector(didTapNextButton), for: .touchUpInside)

seekForwardButton.addTarget(self, action: #selector(seekForwardButtonTapped), for: .touchUpInside)

seekBackwardButton.addTarget(self, action: #selector(seekBackwardButtonTapped), for: .touchUpInside)

//styling of buttons

playPauseButton.setBackgroundImage(UIImage(systemName: "pause.fill"), for: .normal)

nextButton.setBackgroundImage(UIImage(systemName: "forward.fill"), for: .normal)

backButton.setBackgroundImage(UIImage(systemName: "backward.fill"), for: .normal)

seekForwardButton.setBackgroundImage(UIImage(systemName: "goforward.15"), for: .normal)

seekBackwardButton.setBackgroundImage(UIImage(systemName: "gobackward.15"), for: .normal)

playPauseButton.tintColor = UIColor(named: "PlayerColors")

nextButton.tintColor = UIColor(named: "PlayerColors")

backButton.tintColor = UIColor(named: "PlayerColors")

seekForwardButton.tintColor = UIColor(named: "PlayerColors")

seekBackwardButton.tintColor = UIColor(named: "PlayerColors")

holder.addSubview(playPauseButton)

holder.addSubview(nextButton)

holder.addSubview(backButton)

holder.addSubview(seekForwardButton)

holder.addSubview(seekBackwardButton)

}

@objc func panGesture(gesture: UIPanGestureRecognizer) {

let currentPoint = gesture.location(in: playbackSlider)

let percentage = currentPoint.x/playbackSlider.bounds.size.width;

let delta = Float(percentage) * (playbackSlider.maximumValue - playbackSlider.minimumValue)

let value = playbackSlider.minimumValue delta

playbackSlider.setValue(value, animated: true)

}

@objc func progressScrubbed(_ playbackSlider: UISlider!) {

let seconds : Int64 = Int64(playbackSlider.value)

let targetTime:CMTime = CMTimeMake(value: seconds, timescale: 1)

player!.seek(to: targetTime)

if player!.rate == 0

{

player?.play()

}

}

func setupNowPlaying() {

// Define Now Playing Info

var nowPlayingInfo = [String : Any]()

nowPlayingInfo[MPMediaItemPropertyTitle] = "Unstoppable"

if let image = UIImage(named: "artist") {

nowPlayingInfo[MPMediaItemPropertyArtwork] = MPMediaItemArtwork(boundsSize: image.size) { size in

return image

}

}

nowPlayingInfo[MPNowPlayingInfoPropertyElapsedPlaybackTime] = player?.currentTime

nowPlayingInfo[MPMediaItemPropertyPlaybackDuration] = playerItem?.duration

nowPlayingInfo[MPNowPlayingInfoPropertyPlaybackRate] = player?.rate

// Set the metadata

MPNowPlayingInfoCenter.default().nowPlayingInfo = nowPlayingInfo

}

func updateNowPlaying(isPause: Bool) {

// Define Now Playing Info

var nowPlayingInfo = MPNowPlayingInfoCenter.default().nowPlayingInfo!

nowPlayingInfo[MPNowPlayingInfoPropertyElapsedPlaybackTime] = player?.currentTime

nowPlayingInfo[MPNowPlayingInfoPropertyPlaybackRate] = isPause ? 0 : 1

// Set the metadata

MPNowPlayingInfoCenter.default().nowPlayingInfo = nowPlayingInfo

}

func setupNotifications() {

let notificationCenter = NotificationCenter.default

notificationCenter.addObserver(self,

selector: #selector(handleInterruption),

name: AVAudioSession.interruptionNotification,

object: nil)

notificationCenter.addObserver(self,

selector: #selector(handleRouteChange),

name: AVAudioSession.routeChangeNotification,

object: nil)

}

@objc func handleRouteChange(notification: Notification) {

guard let userInfo = notification.userInfo,

let reasonValue = userInfo[AVAudioSessionRouteChangeReasonKey] as? UInt,

let reason = AVAudioSession.RouteChangeReason(rawValue:reasonValue) else {

return

}

switch reason {

case .newDeviceAvailable:

let session = AVAudioSession.sharedInstance()

for output in session.currentRoute.outputs where output.portType == AVAudioSession.Port.headphones {

print("headphones connected")

DispatchQueue.main.sync {

player?.play()

}

break

}

case .oldDeviceUnavailable:

if let previousRoute =

userInfo[AVAudioSessionRouteChangePreviousRouteKey] as? AVAudioSessionRouteDescription {

for output in previousRoute.outputs where output.portType == AVAudioSession.Port.headphones {

print("headphones disconnected")

DispatchQueue.main.sync {

player?.pause()

}

break

}

}

default: ()

}

}

@objc func handleInterruption(notification: Notification) {

guard let userInfo = notification.userInfo,

let typeValue = userInfo[AVAudioSessionInterruptionTypeKey] as? UInt,

let type = AVAudioSession.InterruptionType(rawValue: typeValue) else {

return

}

if type == .began {

print("Interruption began")

// Interruption began, take appropriate actions

}

else if type == .ended {

if let optionsValue = userInfo[AVAudioSessionInterruptionOptionKey] as? UInt {

let options = AVAudioSession.InterruptionOptions(rawValue: optionsValue)

if options.contains(.shouldResume) {

// Interruption Ended - playback should resume

print("Interruption Ended - playback should resume")

player?.play()

} else {

// Interruption Ended - playback should NOT resume

print("Interruption Ended - playback should NOT resume")

}

}

}

}

@objc func didTapPlayPauseButton() {

if player?.timeControlStatus == .playing {

//pause

player?.pause()

//show play button

playPauseButton.setBackgroundImage(UIImage(systemName: "play.fill"), for: .normal)

//shrink image

UIView.animate(withDuration: 0.2, animations: {

self.albumImageView.frame = CGRect(x: 50,

y: 50,

width: self.holder.frame.size.width - 100,

height: self.holder.frame.size.width - 100)

})

}

else {

//play

player?.play()

//show pause button

playPauseButton.setBackgroundImage(UIImage(systemName: "pause.fill"), for: .normal)

//increase image size

UIView.animate(withDuration: 0.4, animations: {

self.albumImageView.frame = CGRect(x: 20,

y: 20,

width: self.holder.frame.size.width - 40,

height: self.holder.frame.size.width - 40)

})

}

}

private func setupView() {

setupConstraints()

}

private func setupConstraints() {

NSLayoutConstraint.activate([

holder.leadingAnchor.constraint(equalTo: view.leadingAnchor),

holder.trailingAnchor.constraint(equalTo: view.trailingAnchor),

holder.topAnchor.constraint(equalTo: view.topAnchor),

holder.bottomAnchor.constraint(equalTo: view.bottomAnchor),

])

}

override func viewDidAppear(_ animated: Bool) {

super.viewDidAppear(animated)

player?.play()

UIApplication.shared.isIdleTimerDisabled = true

}

override func viewDidDisappear(_ animated: Bool) {

super.viewDidDisappear(animated)

player?.pause()

UIApplication.shared.isIdleTimerDisabled = false

}

}

This is the structure of the Adio

import Foundation

struct Audio {

let name: String

let albumName: String

let paragraphNumber: String

let audioImageName: String

let trackURL: String

}

This is AudiosViewModel

import Foundation

import FirebaseFirestore

class AudiosViewModel: ObservableObject {

@Published var audios = [Audio]()

private var db = Firestore.firestore()

func fetchData() {

db.collection("audios").addSnapshotListener { [self] (querySnapshot, error) in

guard let documents = querySnapshot?.documents else {

print("No Documents")

return

}

self.audios = documents.map { (queryDocumentSnapshot) -> Audio in

let data = queryDocumentSnapshot.data()

let name = data["name"] as? String ?? ""

let albumName = data["albumName"] as? String ?? ""

let audioImageName = data["audioImageName"] as? String ?? ""

let paragraphNumber = data["paragraphNumber"] as? String ?? ""

let trackURL = data["trackURL"] as? String ?? ""

print(data)

return Audio(name: name, albumName: albumName, paragraphNumber: paragraphNumber, audioImageName: audioImageName, trackURL: trackURL)

}

}

}

}

CodePudding user response:

In the view model that populates the cells with data, add an image property.

var image: UIImage?

In cellForRowAt where you fetch the image, in addition to injecting the image into the cell, save it to the view model.

var song = viewModel.audios[indexPath.row]

song.image = fetchedImage

Add an image property to the AudioPlayerViewController view controller.

var mainImage = UIImage(named: "placeHolderImage")

In didSelectRowAt, inject the detail view controller with the image from the model before presenting it.

vc.mainImage = viewModel.image

present(vc, animated: true)

There is no need to download the image again if it was just downloaded by the previous view controller. Simply expand your view model to include this image and pass it forward when it comes time to present.