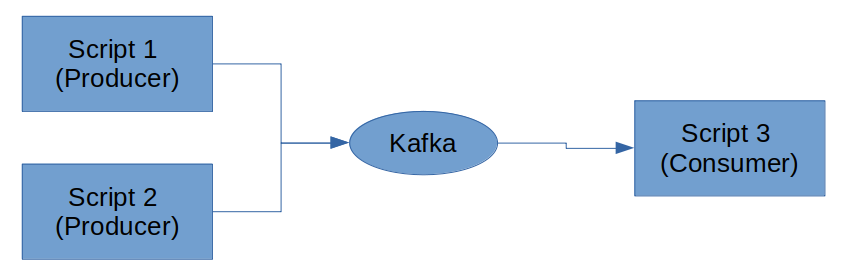

I have a project in which 2 scripts are generating data (24/7) and sending it to Kafka. At the same time a consumer/s script is consuming the data from Kafka and processing it.

My question is about how should I deploy this application, as I am quite new to docker. I have two ideas in mind, but not sure which should I use (or if any other should be use):

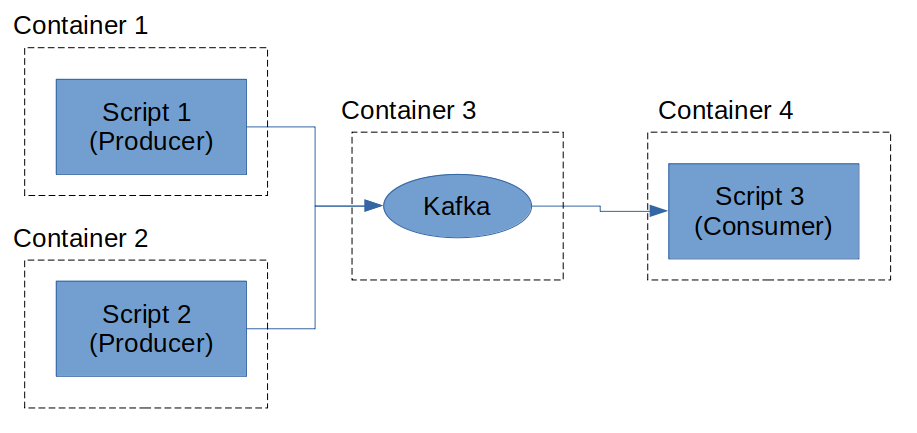

- Independent containers.

- Easier to scale.

Cons:

- More difficult to manage.

- More use of resources.

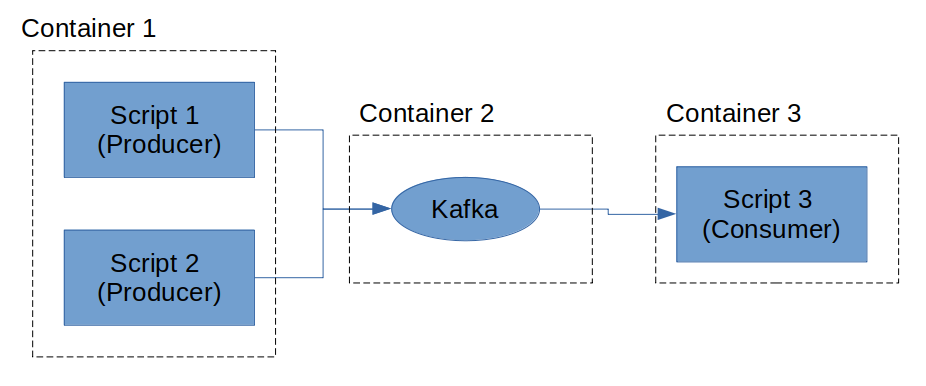

- Less use of resources.

Cons:

- More difficult to scale (as script 1 and 2 are in the same container).

- More use of resources.

P.S: Bonus points if somebody is also able to tell me if keeping the consumption script (Script3) in its own container makes sense if I plan to be able to scale it as the amount of producer increases.

CodePudding user response:

When trying to get something working, a useful maxim is:

Premature optimization is the root of all evil.

The right answer will depend on exactly how the two producer scripts work. But in general, Docker expects containers to run a single service process on a single port. So the 4 container approach is where you should start.