Map1. Put (1100);

Map1. Put (2150);

Map1. Put (3120);

HashMap

Map2. Put (2200);

Map2. Put (7, 20);

Map2. Put (3,66);

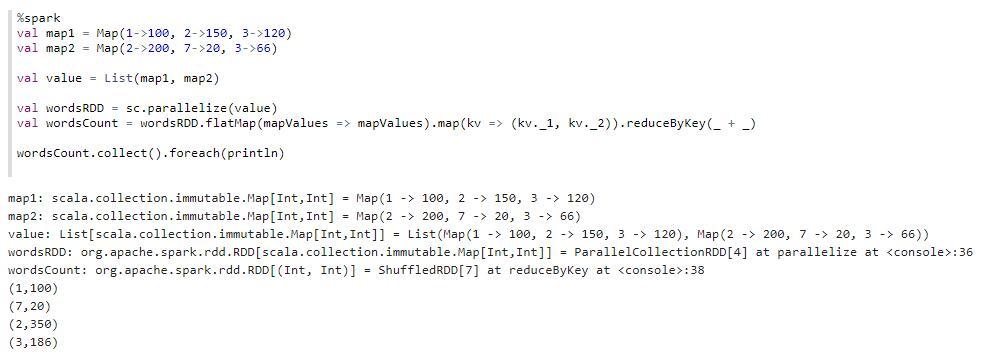

//may still has a lot of the map, and the key value is likely to be repeated, I put the map in the list

List

Then I use sparkcontext parallelize method how to word count?

CodePudding user response:

It is not convenient for export, the screenshots to see ~ ~ Scala version of the directly, instead of the Java version of the workload is not big,