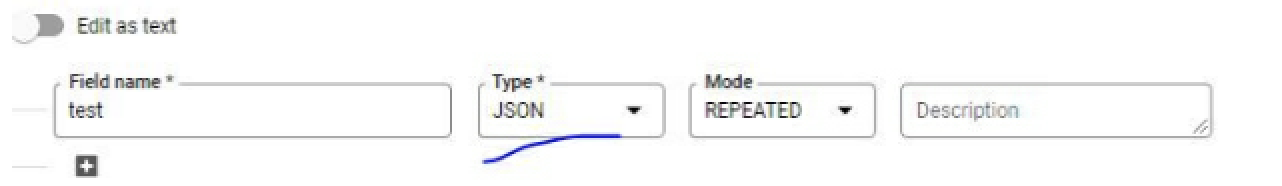

I have a column of type JSON in my BigQuery schema definition. I want to write to this from a Java Spark Pipeline but I cannot seem to find a way that this is possible.

If create a Struct of the JSON it results in a RECORD type.

And if I use to_json like below it turns converts into a STRING type.

dataframe = dataframe.withColumn("JSON_COLUMN, functions.to_json(functions.col("JSON_COLUMN)))

I know BigQuery has support for JSON columns but is there any way to write to them with Java Spark currently?

CodePudding user response:

As @DavidRabinowitz mentioned in the comment, feature to insert JSON type data into BigQuery using spark-bigquery-connector will be released soon.

All the updates regarding the BigQuery features will be updated in this document.

Posting the answer as community wiki for the benefit of the community that might encounter this use case in the future.

Feel free to edit this answer for additional information.