Public class TestUtil {

Public static void main (String [] args) {

System. SetProperty (" user name ", "root");

SparkConf conf=new SparkConf (). SetAppName (" Spark Java API learning ")

SetMaster (" spark://211.87.227.79:7077 ");

JavaSparkContext sc=new JavaSparkContext (conf);

JavaRDDThe users=sc. TextFile (" HDFS://211.87.227.79:8020/input/wordcount. TXT ");

System. Out. Println (users. The first ());

}

}

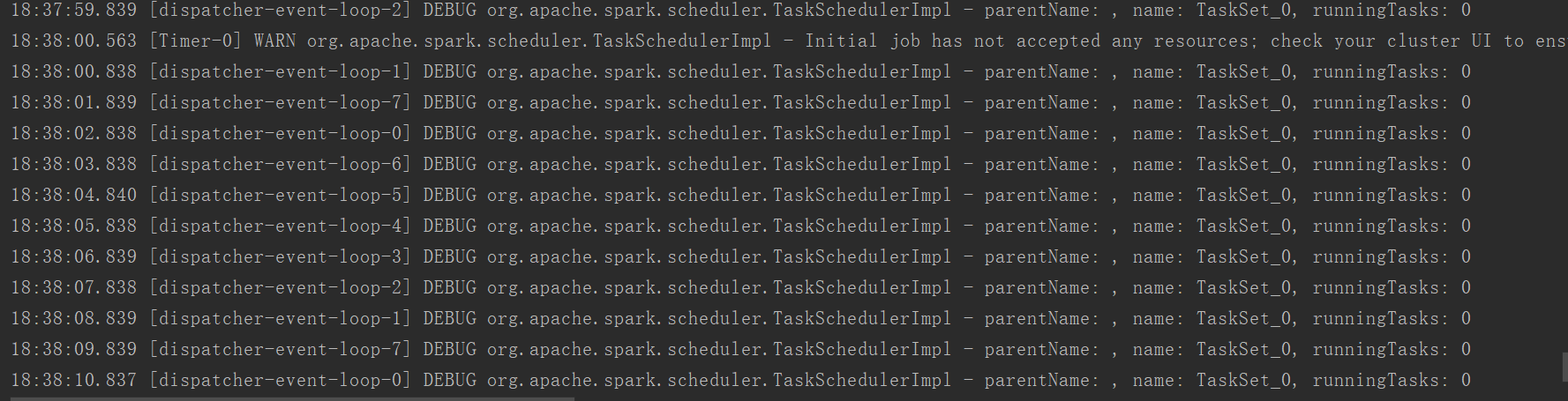

Watch the UI spark this task is also submitted, but the idea of the console has been repeatedly reported this paragraph,

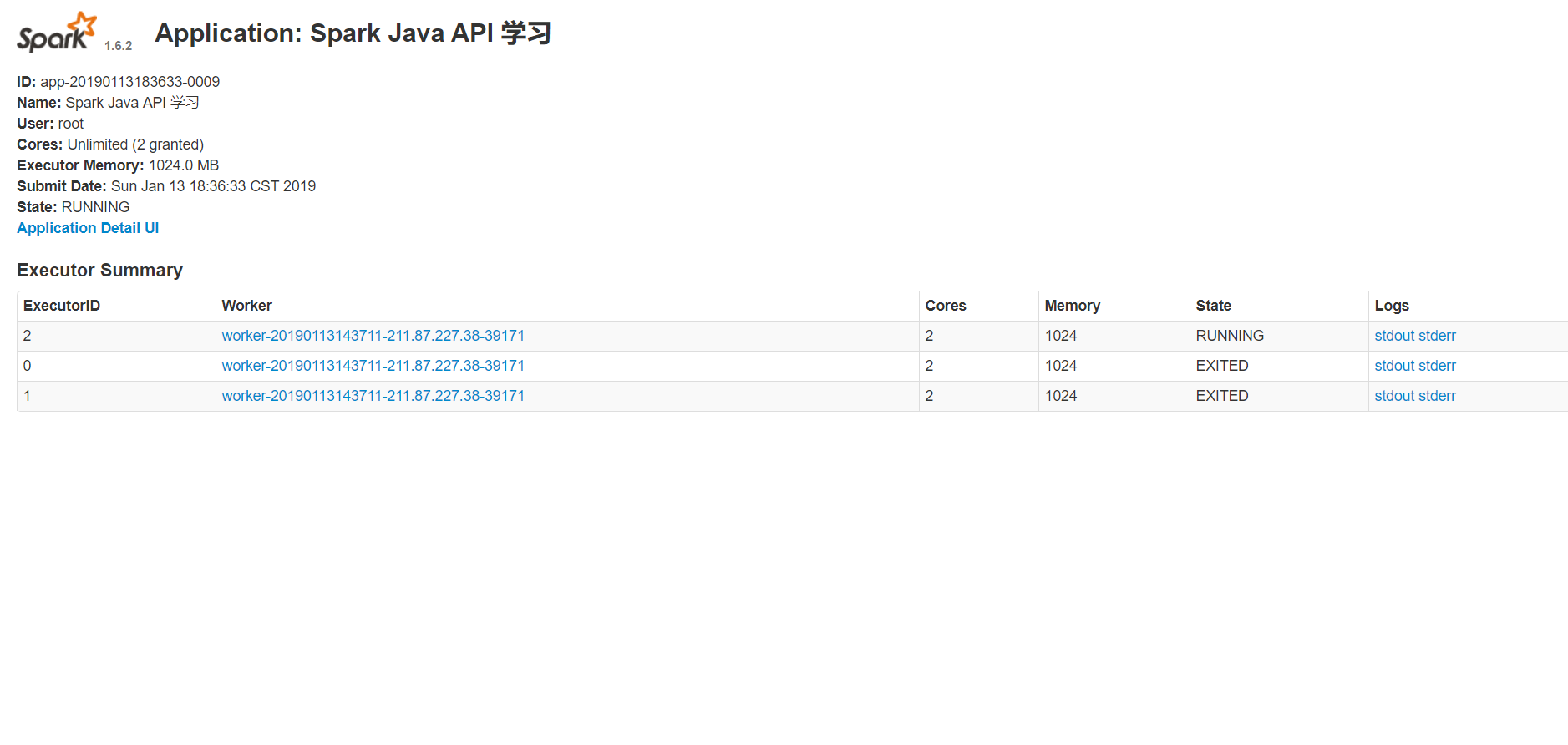

SparkUI as shown in figure

If there were the warrior can solve, can be paid, just leave a contact way,

CodePudding user response:

I have a plan, I haven't written a similar, but feel scheme is feasible, you add my QQ, 457259802, is a plan, will work, it is difficultCodePudding user response:

If is platform is usually write a simple Web system by uploading a jar package, backstage access spark service address submitted by way of restCodePudding user response:

Two problems: the first: you mean your program running on a distributed remote sent to cluster, or simply calls on a cluster of resources, and the actual operation is the local computer,The first category: you look up the spark - yarn operation mode, one is remote, this meaning is to run your program distributed remote submitted to cluster, the second word is simple, local [*]

CodePudding user response:

The original poster is to have a solution?CodePudding user response:

In local remote debugging Spark, you can use. SetJars directly submit you the generated jar package, very convenient,CodePudding user response: