I'm creating an application that uses manual calibration to align two images. I'm trying to align them almost pixel perfectly, so I'm not relying on automatic calibration as it did not work the best for this scenario. I'm doing it manually by choosing pixels. However, the result is not what I hoped for, and I do not know where I'm making a mistake. I feel like the computed points should place the image precisely on top of the other one, but for some reason, it does not. What am I doing wrong?

Results of homography:

[[ 7.43200521e-01 -1.79170744e-02 -1.76782990e 02]

[ 1.00046389e-02 7.84106136e-01 -3.22549155e 01]

[ 5.10695284e-05 -8.48641135e-05 1.00000000e 00]]

Manually picked points: RGB:

[[ 277 708]

[1108 654]

[ 632 545]

[ 922 439]

[ 874 403]

[ 398 376]

[ 409 645]

[ 445 593]

[ 693 342]

[ 739 244]

[ 505 234]

[ 408 275]

[ 915 162]

[1094 126]

[ 483 115]

[ 951 366]

[ 517 355]]

Thermal:

[[ 8 549]

[634 491]

[282 397]

[496 318]

[461 289]

[113 269]

[122 479]

[148 438]

[325 236]

[360 162]

[194 156]

[121 188]

[484 106]

[621 67]

[178 62]

[515 261]

[203 253]]

def manual_calibration(self, rgb: cv2.UMat, thermal: cv2.UMat) -> Tuple[cv2.UMat, Tuple[int, int, int, int]]:

rgb_gray = cv2.cvtColor(rgb, cv2.COLOR_BGR2GRAY)

thermal_gray = cv2.cvtColor(thermal, cv2.COLOR_BGR2GRAY)

h_rgb, w_rgb = rgb_gray.shape

h_th, w_th = thermal_gray.shape

thermal_gray = cv2.copyMakeBorder(thermal_gray, 0, h_rgb - h_th, 0, 0, cv2.BORDER_CONSTANT, value=[0, 0, 0])

merged = cv2.hconcat((rgb_gray, thermal_gray))

self.merged = cv2.cvtColor(merged, cv2.COLOR_GRAY2RGB)

def point_validation(ix, iy):

if ix > w_rgb:

ix -= w_rgb

return ix, iy

self.points_left = np.array([])

self.points_right = np.array([])

self.label = True

def select_point(event, x, y, flags, param):

if event == cv2.EVENT_LBUTTONDOWN: # captures left button double-click

ix, iy = x, y

cv2.circle(img=self.merged, center=(x,y), radius=5, color=(0,255,0),thickness=-1)

ix, iy = point_validation(ix, iy)

pt = np.array([ix, iy])

if self.label:

# self.points_left = np.vstack((self.points_left, pt))

self.points_left = np.vstack((self.points_left, pt)) if self.points_left.size else pt

self.label = False

else:

# self.points_right = np.vstack((self.points_right, pt))

self.points_right = np.vstack((self.points_right, pt)) if self.points_right.size else pt

self.label = True

print(ix, iy)

cv2.namedWindow('calibration')

cv2.setMouseCallback('calibration', select_point)

while True:

cv2.imshow("calibration", self.merged)

if cv2.waitKey(20) & 0xFF == 27:

break

cv2.destroyAllWindows()

print(self.points_left)

print(self.points_right)

### EDIT NEW POINT VALIDATION

rgb_gray_check = rgb_gray

thermal_gray_check = thermal_gray

for point in self.points_left:

cv2.circle(img=rgb_gray_check, center=point, radius=5, color=(0,255,0),thickness=-1)

for point in self.points_right:

cv2.circle(img=thermal_gray_check, center=point, radius=5, color=(0,255,0),thickness=-1)

cv2.imshow('rgb', rgb_gray_check)

cv2.imshow('thermal', thermal_gray_check)

cv2.waitKey(0)

### EDIT NEW POINT VALIDATION

# Compute homography

# 0 - a regular method using all the points

# CV_RANSAC - RANSAC-based robust method

# CV_LMEDS - Least-Median robust method

matrix, mask = cv2.findHomography(self.points_left, self.points_right, 0)

print(matrix)

# matrix[0][3] = (w_th/2)

# matrix[1][3] = (h_th/2)

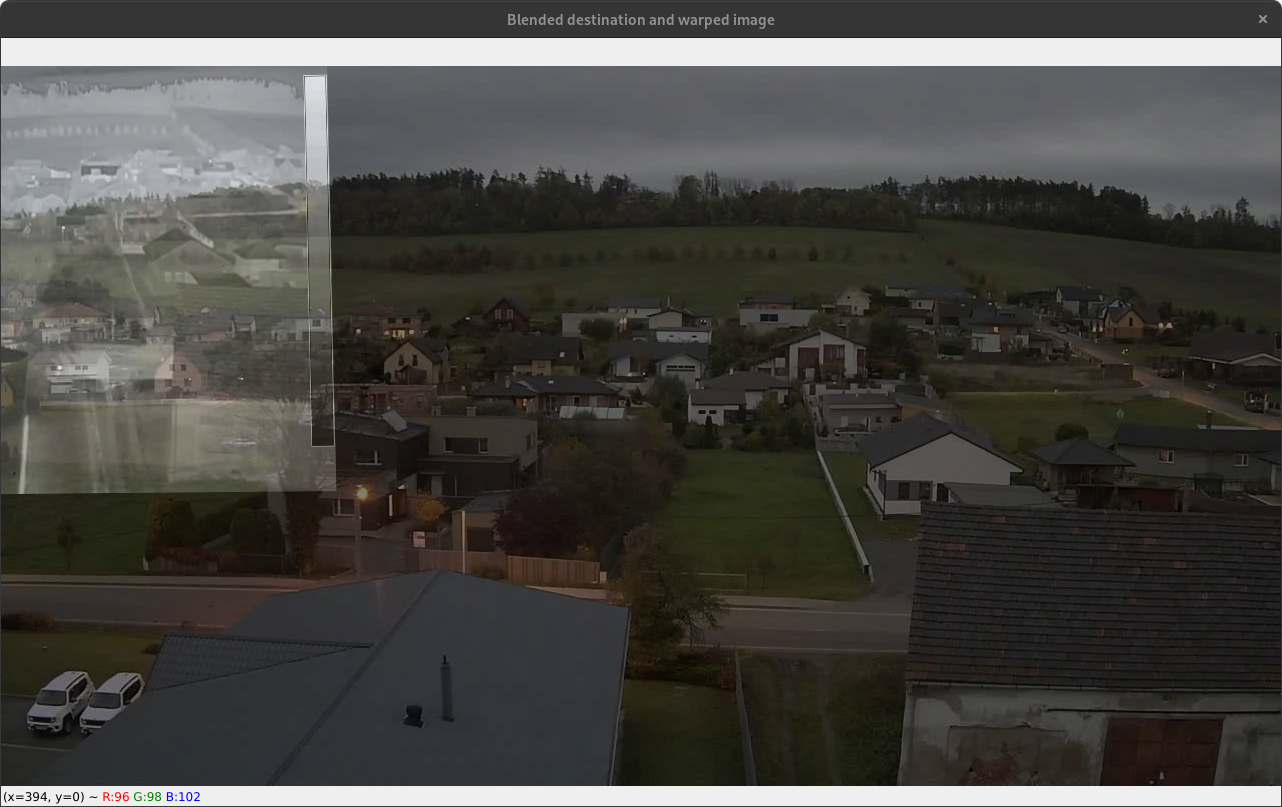

warp_src = cv2.warpPerspective(thermal, matrix, (rgb.shape[1], rgb.shape[0]))

alpha = 0.5

beta = (1.0 - alpha)

dst_warp_blended = cv2.addWeighted(rgb, alpha, warp_src, beta, 0.0)

cv2.imshow('Blended destination and warped image', dst_warp_blended)

cv2.waitKey(0)

CodePudding user response:

So I figured it out!

I accidentally flipped parameters in the findHomography function. So it should be

matrix, mask = cv2.findHomography(self.points_right, self.points_left, 0)

And, of course, delete the offset for the homography matrix.