I am trying to assign a higher weight to one feature above others. Here is my code.

## Assign weight to High Net Worth feature

cols = list(train_X.columns.values)

# 0 - 1163 --Other Columns

# 1164 --High Net Worth

#Create an array of feature weights

other_col_wt = [1]*1164

high_net_worth_wt = [5]

feature_wt = other_col_wt high_net_worth_wt

feature_weights = np.array(feature_wt)

# Initialize the XGBClassifier

xgboost = XGBClassifier(subsample = 0.8, # subsample = 0.8 ideal for big datasets

silent=False, # whether print messages during construction

colsample_bytree = 0.4, # subsample ratio of columns when constructing each tree

gamma=10, # minimum loss reduction required to make a further partition on a leaf node of the tree, regularisation parameter

objective='binary:logistic',

eval_metric = ["auc"],

feature_weights = feature_weights

)

# Hypertuning parameters

lr = [0.1,1] # learning_rate = shrinkage for updating the rules

ne = [100] # n_estimators = number of boosting rounds

md = [3,4,5] # max_depth = maximum tree depth for base learners

# Grid Search

clf = GridSearchCV(xgboost,{

'learning_rate':lr,

'n_estimators':ne,

'max_depth':md

},cv = 5,return_train_score = False)

# Fitting the model with the custom weights

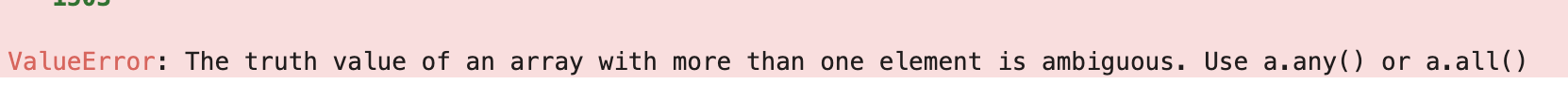

clf.fit(train_X,train_y, feature_weights = feature_weights)

clf.cv_results_

I went through the documentation

Could anyone please help me where I am doing it wrong? Thanks.

CodePudding user response:

I think you need to remove feature_weights from the init of XGBClassifier. At least, this works when I try your example.