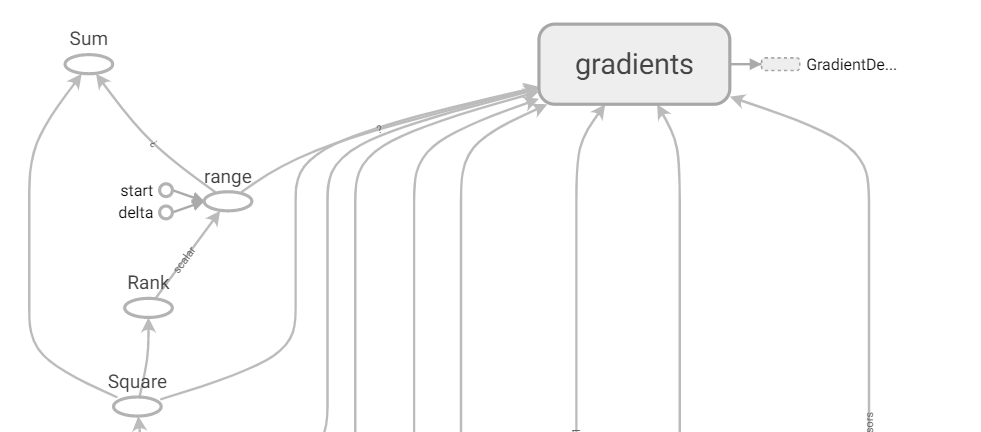

Why is this kind of situation, the range to gradient rather than the sum to gradient? Loss should be the sum?

CodePudding user response:

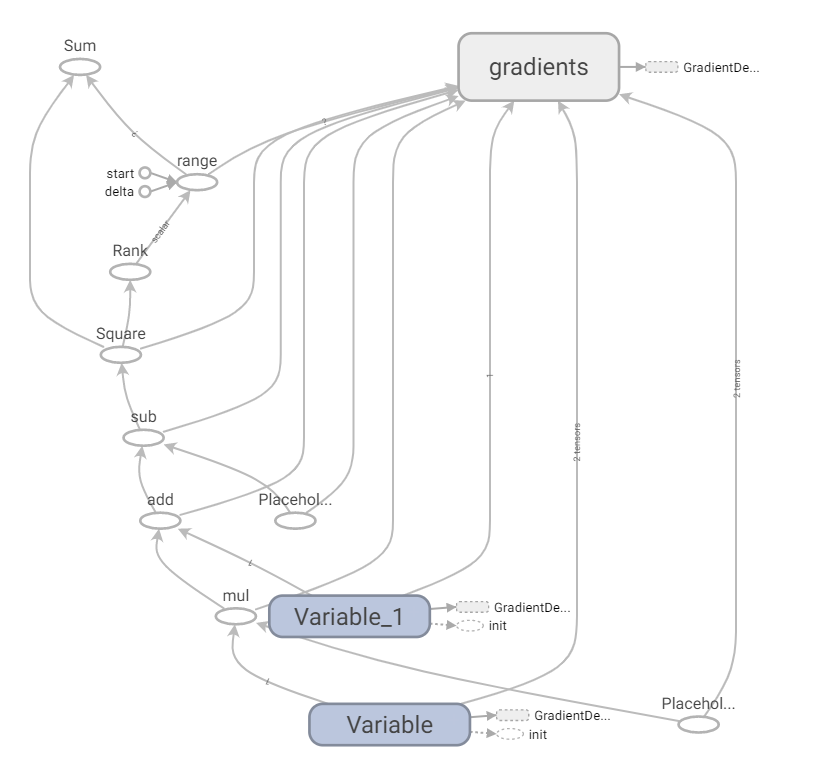

The second picture sent you a wrong, is this,

All code is as follows:

The import numpy as np

The import tensorflow.com pat. V1 as tf

Tf. Disable_v2_behavior ()

W=tf. Variable (. [3], dtype=tf float32)

B=tf. Variable ([- 3], dtype=tf. Float32)

# Model input and output

X=tf. Placeholder (tf. Float32)

Linear_model=W * x + b

Y=tf. Placeholder (tf. Float32)

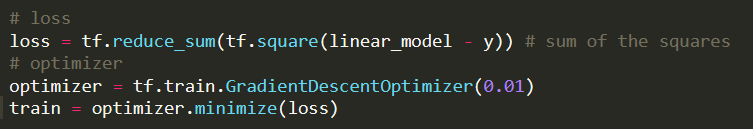

# loss

Loss=tf. Reduce_sum (tf) square (linear_model - y)) # the sum of the squares

# optimizer

Optimizer=tf. Train. GradientDescentOptimizer (0.01)

"Train"=optimizer. Minimize (loss)

# training data

X_train=[1, 2, 3, 4]

Y_train=[1, 2, 3, 4]

# training loop

Init=tf. Global_variables_initializer ()

Sess=tf. The Session ()

# output figure, into the folder, enter the DOS window tensorboard - logdir=board,

Output_graph=True

If output_graph:

Writer=tf. The summary. FileWriter (' board/' sess. Graph)

Sess. Run (init) # reset values to wrong

For I in range (100) :

Sess. Run (" train ", {x: x_train, y: y_train})

CodePudding user response:

CodePudding user response:

Because be reduce_sum, it don't have to wait for all the data are ready to do the sum operation, but rather to a number, you can add it