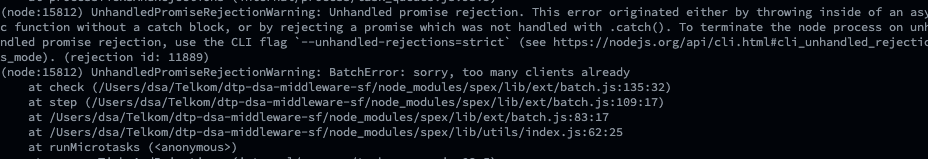

im trying to inserting hundred / thousand data based on selected data from others table, but i found an error "too many clients", here is the error

Im using pgp (pg promise) lib, here is my snippet

function call(){

for (let index = 0; index < 5; index ) {

getPendingData().then((result) => {

db.tx((t) => {

let counter = 0;

const queries = result.map((data) => {

counter ;

return db.none(`insert into test_data (id, isdeleted, parentid) values ('${uuidv1()}', 0, '${uuidv1()}x-${uuidv1()}' ) `);

});

return t.batch(queries);

});

});

}

}

let getPendingData = async () => {

return db.task('getPendingData', async (t) => {

return await t.any('select * from other_table');

});

}

(call())

im setup max pg conn is 100, any clue how to solved this without add max conn?

CodePudding user response:

There are too many problems in your code, unfortunately...

It is incorrect both in how you use promises, and in how you use pg-promise.

The issue with the promises is that you're supposed to chain them, that means using the result from db.tx (return db.tx(...)), which you're not doing, creating a loose promise, and as a result, a loose connection associated with the transaction. Also, return await is an anti-pattern.

The issue with pg-promise usage is that you're supposed to execute queries against the transaction/task context t that you are creating (as shown here). But you're executing each query against db - root connection instead, which spawns countless connection requests.

Also, creating a task to execute just one query doesn't make any sense.

If that's not bad enough, you're doing string concatenation for values, which is a strict no-no in query formatting.

And the last, multiple inserts should be executed as multi-row queries, not as separate queries, which is a waste of performance - see multi-row inserts.