When I execute my model.fit(x_train_lstm, y_train_lstm, epochs=3, shuffle=False, verbose=2)

I always get loss as nan:

Epoch 1/3

73/73 - 5s - loss: nan - accuracy: 0.5417 - 5s/epoch - 73ms/step

Epoch 2/3

73/73 - 5s - loss: nan - accuracy: 0.5417 - 5s/epoch - 74ms/step

Epoch 3/3

73/73 - 5s - loss: nan - accuracy: 0.5417 - 5s/epoch - 73ms/step

My x_training is shaped (2475, 48), y_train is shaped (2475,)

- I derive my input train set in (2315, 160, 48), so 2315 sets of training data, 160 as my loopback timewindow, 48 features

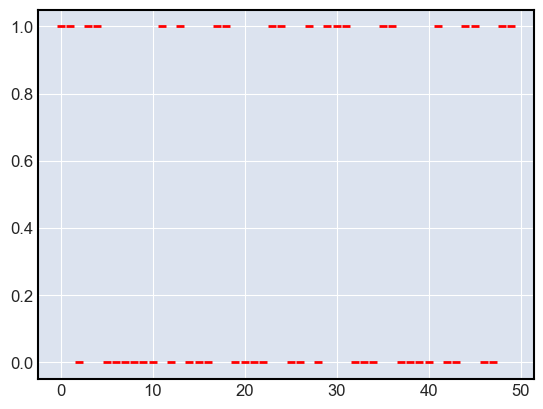

- crosspondingly, the test is just 0 or 1 in shape of (2315, 1)

My model is like this:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_6 (LSTM) (None, 160, 128) 90624

dropout_4 (Dropout) (None, 160, 128) 0

lstm_7 (LSTM) (None, 160, 64) 49408

dropout_5 (Dropout) (None, 160, 64) 0

lstm_8 (LSTM) (None, 32) 12416

dense_2 (Dense) (None, 1) 33

=================================================================

Total params: 152,481

Trainable params: 152,481

Non-trainable params: 0

- I tried different LSTM units: 48, 60, 128, 160, none of them work

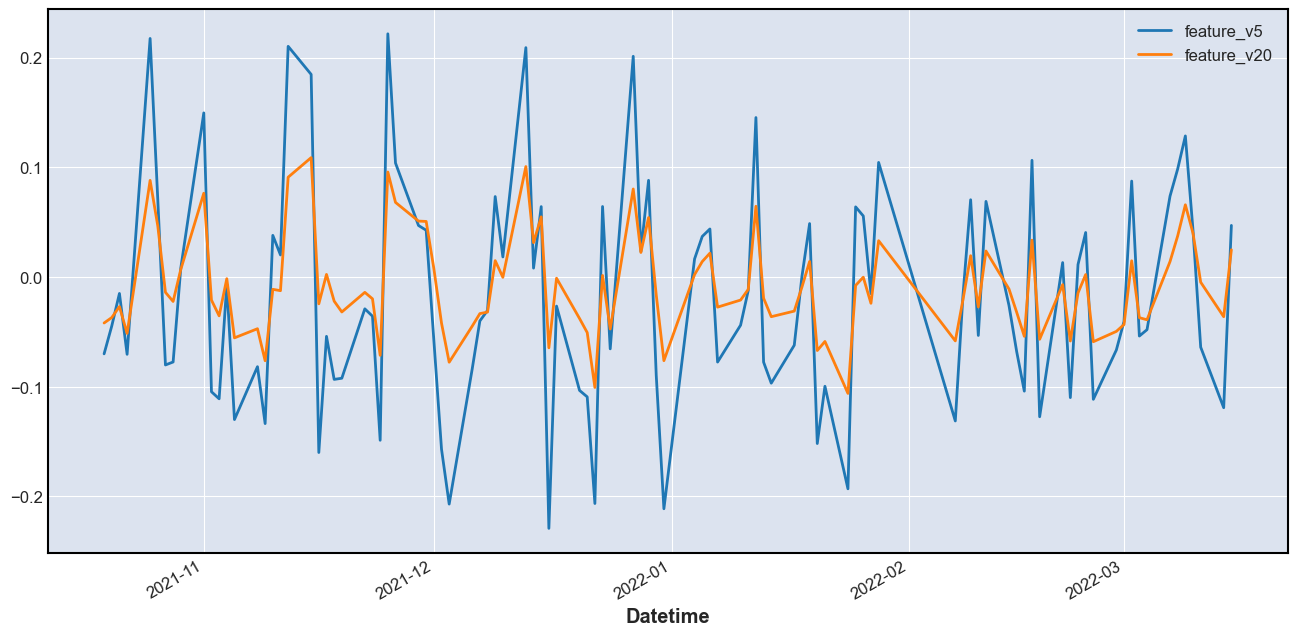

- I check my training data, all of them are in the range of (-1,1)

- There is no 'null' in my dataset,

x_train.isnull().values.any()outputs False

Now I have no clue where can I try more~

My model code is:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

from tensorflow.keras.layers import Dropout

def create_model(win = 100, features = 9):

model = Sequential()

model.add(LSTM(units=128, activation='relu', input_shape=(win, features),

return_sequences=True))

model.add(Dropout(0.1))

model.add(LSTM(units=64, activation='relu', return_sequences=True))

model.add(Dropout(0.2))

# no need return sequences from 'the last layer'

model.add(LSTM(units=32))

# adding the output layer

model.add(Dense(units=1, activation='sigmoid'))

# may also try mean_squared_error

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

return model

here I plot some train_y samples:

CodePudding user response:

Two things: try normalizing your time series data and using relu as the activation function for lstm layers is not 'conventional'. Check this