I am reading Agner Fog's optimization manuals - and I came across this example:

double data[LEN];

void compute()

{

const double A = 1.1, B = 2.2, C = 3.3;

int i;

for(i=0; i<LEN; i ) {

data[i] = A*i*i B*i C;

}

}

Agner indicates that there's a way to optimize this code - by realizing that the loop can avoid using costly multiplications, and instead use the "deltas" that are applied per iteration.

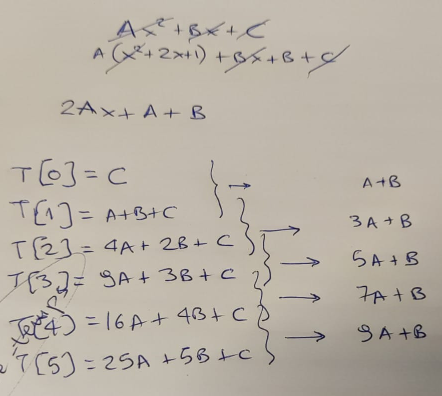

I use a piece of paper to confirm the theory, first...

...and of course, he is right - in each loop iteration we can compute the new result based on the old one, by adding a "delta". This delta starts at value "A B", and is then incremented by "2*A" on each step.

So we update the code to look like this:

void compute()

{

const double A = 1.1, B = 2.2, C = 3.3;

const double A2 = A A;

double Z = A B;

double Y = C;

int i;

for(i=0; i<LEN; i ) {

data[i] = Y;

Y = Z;

Z = A2;

}

}

In terms of operational complexity, the difference in these two versions of the function are indeed, striking. Multiplications have a reputation for being significantly slower in our CPUs, compared to additions. And we have replaced 3 multiplications and 2 additions... with just 2 additions!

So I go ahead and add a loop to execute compute a lot of times - and then keep the minimum time it took to execute:

unsigned long long ts2ns(const struct timespec *ts)

{

return ts->tv_sec * 1e9 ts->tv_nsec;

}

int main(int argc, char *argv[])

{

unsigned long long mini = 1e9;

for(int i=0; i<1000; i ) {

struct timespec t1, t2;

clock_gettime(CLOCK_MONOTONIC_RAW, &t1);

compute();

clock_gettime(CLOCK_MONOTONIC_RAW, &t2);

unsigned long long diff = ts2ns(&t2) - ts2ns(&t1);

if (mini > diff) mini = diff;

}

printf("[-] Took: %lld ns.\n", mini);

}

I compile the two versions, run them... and see this:

# gcc -O3 -o 1 ./code1.c

# gcc -O3 -o 2 ./code2.c

# ./1

[-] Took: 405858 ns.

# ./2

[-] Took: 791652 ns.

Well, that's unexpected. Since we report the minimum time of execution, we are throwing away the "noise" caused by various parts of the OS. We also took care to run in a machine that does absolutely nothing. And the results are more or less repeatable - re-running the two binaries shows this is a consistent result:

# for i in {1..10} ; do ./1 ; done

[-] Took: 406886 ns.

[-] Took: 413798 ns.

[-] Took: 405856 ns.

[-] Took: 405848 ns.

[-] Took: 406839 ns.

[-] Took: 405841 ns.

[-] Took: 405853 ns.

[-] Took: 405844 ns.

[-] Took: 405837 ns.

[-] Took: 406854 ns.

# for i in {1..10} ; do ./2 ; done

[-] Took: 791797 ns.

[-] Took: 791643 ns.

[-] Took: 791640 ns.

[-] Took: 791636 ns.

[-] Took: 791631 ns.

[-] Took: 791642 ns.

[-] Took: 791642 ns.

[-] Took: 791640 ns.

[-] Took: 791647 ns.

[-] Took: 791639 ns.

The only thing to do next, is to see what kind of code the compiler created for each one of the two versions.

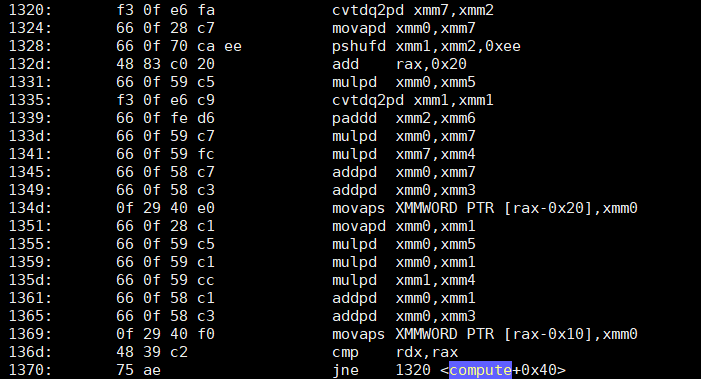

objdump -d -S shows that the first version of compute - the "dumb", yet somehow fast code - has a loop that looks like this:

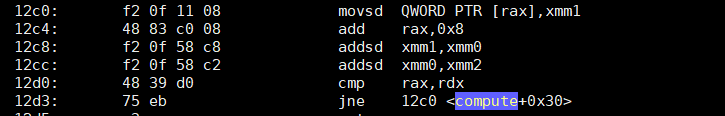

What about the second, optimized version - that does just two additions?

Now I don't know about you - but speaking for myself, I am... puzzled. The second version has approximately 4 times less instructions - with the two major ones being just two SSE-based additions (addsd). The first version, not only has 4 times more instructions... it's also full (as expected) of multiplications (mulpd).

I confess I did not expect that result.

Not because I am a fan of Agner (I am, but that's irrelevant).

Any idea what I am missing? Did I make any mistake here, that can explain the difference in speed? Note that I have done the test on a Xeon W5580 and a Xeon E5 1620 - in both, the first (dumb) version is much faster than the second one.

EDIT: For easy reproduction of the results, I added two gists with the two versions of the code: Dumb yet somehow faster and optimized, yet somehow slower.

P.S. Please don't comment on floating point accuracy issues; that's not the point of this question.

CodePudding user response:

The key to understanding the performance differences you're seeing is in vectorization. Yes, the addition-based solution has a mere two instructions in its inner loop, but the important difference is not in how many instructions there are in the loop, but in how much work each instruction is performing.

In the first version, the output is purely dependent on the input: Each data[i] is a function just of i itself, which means that each data[i] can be computed in any order: The compiler can do them forwards, backwards, sideways, whatever, and you'll still get the same result — unless you're observing that memory from another thread, you'll never notice which way the data is being crunched.

In the second version, the output isn't dependent on i — it's dependent on the A and Z from the last time around the loop.

If we were to represent the bodies of these loops as little mathematical functions, they'd have very different overall forms:

- f(i) -> di

- f(Y, Z) -> (di, Y', Z')

In the latter form, there's no actual dependency on i — the only way you can compute the value of the function is by knowing the previous Y and Z from the last invocation of the function, which means that the functions form a chain — you can't do the next one until you've done the previous one.

Why does that matter? Because the CPU has vector parallel instructions that each can perform four arithmetic operations at the same time! (AVX CPUs can do even more in parallel.) That's four multiplies, four adds, four subtracts, four comparisons — four whatevers! So if the output you're trying to compute is only dependent on the input, then you can safely do four at a time — it doesn't matter if they're forward or backward, since the result is the same. But if the output is dependent on previous computation, then you're stuck doing it in serial form — one at a time.

That's why the "longer" code wins for performance. Even though it has a lot more setup, and it's actually doing a lot more work, most of that work is being done in parallel: It's not computing just data[i] in each iteration of the loop — it's computing data[i], data[i 1], data[i 2], and data[i 3] at the same time, and then jumping to the next set of four.

To expand out a little what I mean here, the compiler first turned the original code into something like this:

int i;

for (i = 0; i < LEN; i = 4) {

data[i 0] = A*(i 0)*(i 0) B*(i 0) C;

data[i 1] = A*(i 1)*(i 1) B*(i 1) C;

data[i 2] = A*(i 2)*(i 2) B*(i 2) C;

data[i 3] = A*(i 3)*(i 3) B*(i 3) C;

}

You can convince yourself that'll do the same thing as the original, if you squint at it. It did that because of all of those identical vertical lines of operators: All of those * and operations are the same operation, just being performed on different data — and the CPU has special built-in instructions that can perform four * or four operations on different data at the same time, in a mere single clock cycle each.

Notice the letter p in the instructions in the faster solution — addpd and mulpd — and the letter s in the instructions in the slower solution — addsd. That's "Add Packed Doubles" and "Multiply Packed Doubles," versus "Add Single Double."

Not only that, it looks like the compiler partially unrolled the loop too — the loop doesn't just do four values each iteration, but actually eight, and interleaved the operations to avoid dependencies and stalls, which cuts down on the number of times that the assembly code has to test i < 1000 as well.

All of this only works, though, if there are no dependencies between iterations of the loop: If the only thing that determines what happens for each data[i] is i itself. If there are dependencies, if data from the last iteration influences the next one, then the compiler may be so constrained by them that it can't alter the code at all — instead of the compiler being able to use fancy parallel instructions or clever optimizations (CSE, strength reduction, loop unrolling, reordering, et al.), you get out code that's exactly what you put in — add Y, then add Z, then repeat.

But here, in the first version of the code, the compiler correctly recognized that there were no dependencies in the data, and figured out that it could do the work in parallel, and so it did, and that's what makes all the difference.

CodePudding user response:

The main difference here is loop dependencies. The loop in the second case is dependent -- operations in the loop depend on the previous iteration. This means that each iteration can't even start until the previous iteration finishes. In the first case, the loop body is fully independent -- everything in the loop body is self contained, depending solely on the iteration counter and constant values. This means that the loop can be computed in parallel -- multiple iterations can all work at the same time. This then allows the loop to be trivially unrolled and vectorized, overlapping many instructions.

If you were to look at the performance counters (e.g. with perf stat ./1), you would see that the first loop, besides running faster is also running many more instructions per cycle (IPC). The second loop, in contrast, has more dependency cycles -- time when the CPU is sitting around doing nothing, waiting for instructions to complete, before it can issue more instructions.

The first one may bottleneck on memory bandwidth, especially if you let the compiler auto-vectorize with AVX on your Sandybridge (gcc -O3 -march=native), if it manages to use 256-bit vectors. At that point IPC will drop, especially for an output array too large for L3 cache.

One note, unrolling and vectorizing do not require independent loops -- you can do them when (some) loop dependencies are present. However, it is harder and the payoff is less. So if you want to see the maximum speedup from vectorization, it helps to remove loop dependencies where possible.