I have a python script, which check whether APP_HOME directory is set as the environment variable or not and picks up few files in this directory to proceed with the further execution.

If it is running on windows, I am setting the environment variable pointing to APP_HOME directory. If the python script is created as a workflow in databricks, the workflow is giving me an option to set the environment variables while choosing the cluster for the task.

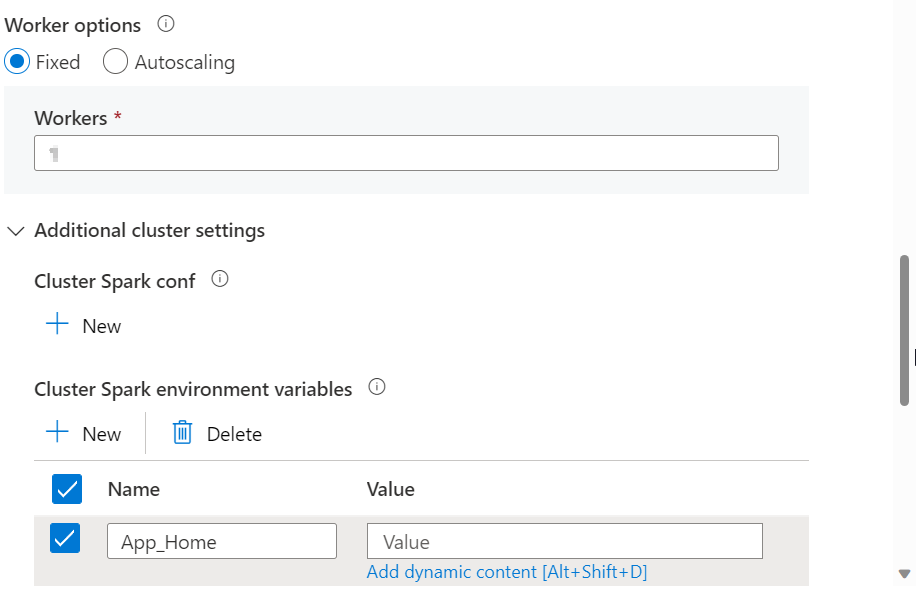

But, If the python script runs as a Databricks python activity from Azure Data factory, I have not found an option to set the environment variable for the databricks cluster that will be created by ADF. Is there a way to set up the environment variable APP_HOME in ADF for databricks cluster when Databricks python activity is used?

CodePudding user response:

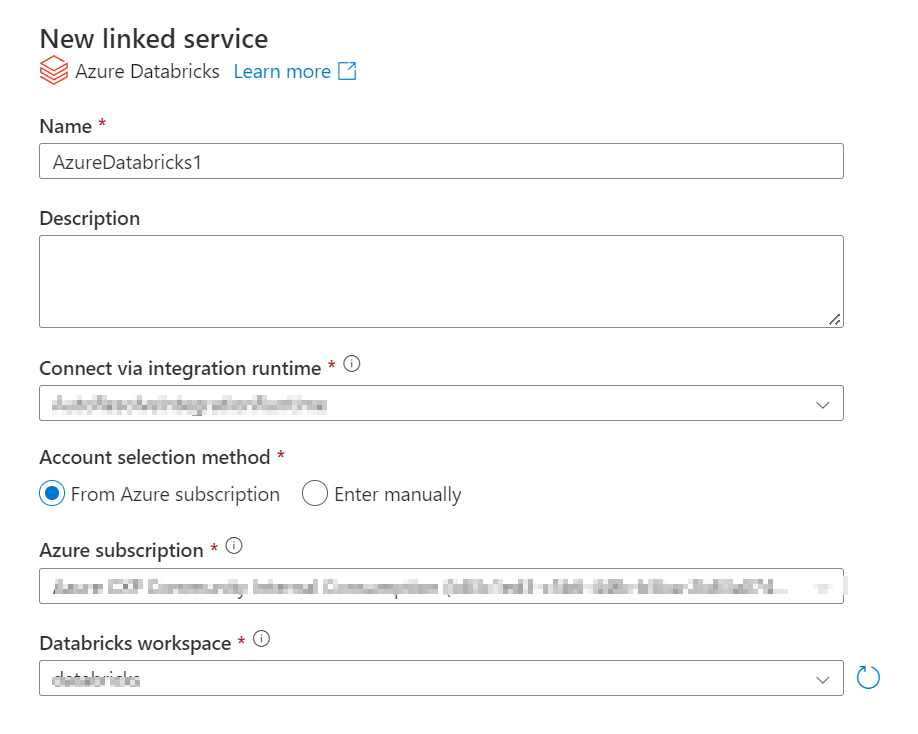

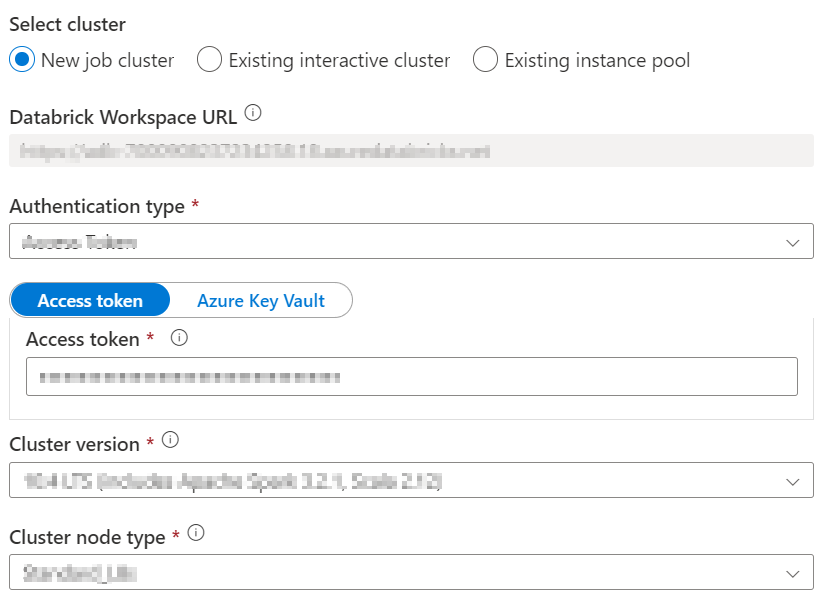

I have created data factory and created pipeline in data factory. I added data bricks as linked services linked service. fill the required fields in additional settings of cluster will find environment variable field.

Reference image: