I'm trying to send the key I got from anti captcha, an example of the key is like this

{

"errorId":0,

"status":"ready",

"solution":

{

"gRecaptchaResponse":"3AHJ_VuvYIBNBW5yyv0zRYJ75VkOKvhKj9_xGBJKnQimF72rfoq3Iy-DyGHMwLAo6a3"

},

"cost":"0.001500",

"ip":"46.98.54.221",

"createTime":1472205564,

"endTime":1472205570,

"solveCount":"0"

}

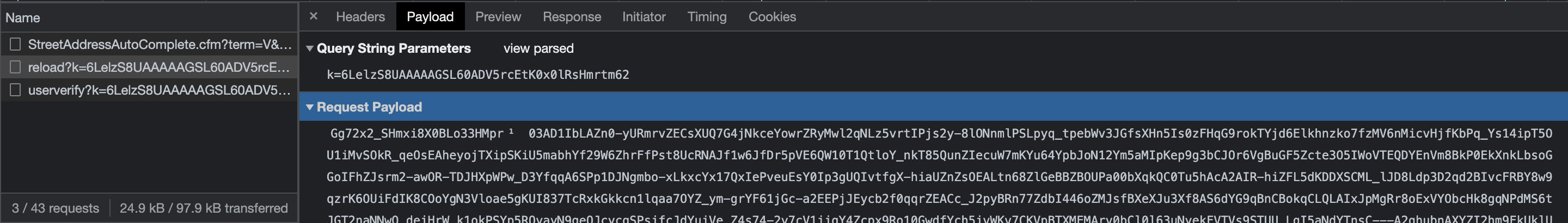

I'm trying to send the value in the g-response key (it contains bypass capcha) but I don't know how to send a payload request to the Recaptcha URL, the network description is like this

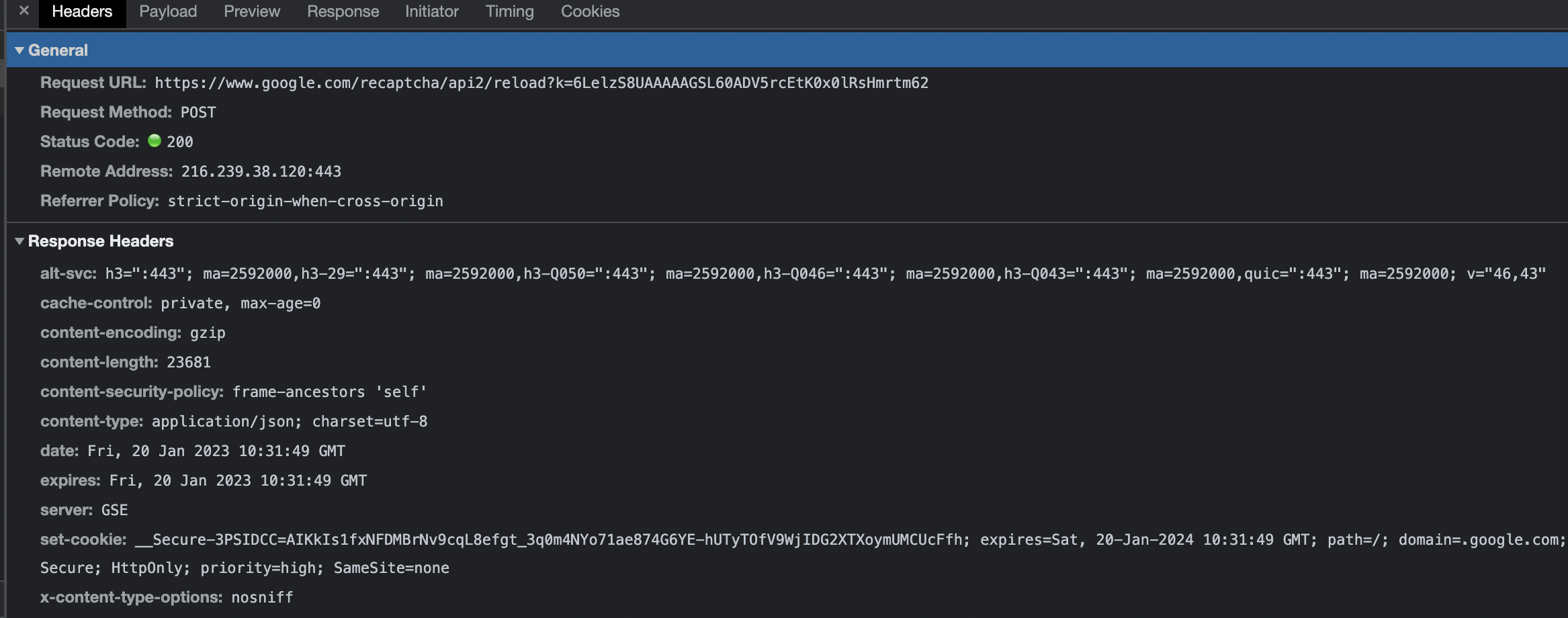

these are the headers for the captcha,

I want to use the key that I got from anticapcha to bypass, then I make a request for data search after the capcha has been bypassed, my code is like this

class Locator(object):

"""class to find school locator"""

def __init__(

self,

url: Optional[str] = "https://mybaragar.com/index.cfm?",

params: Optional[dict[str, Any]] = None,

headers: Optional[dict[str, Any]] = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

},

api_key: Optional[str] = None,

website_key: Optional[str] = None,

):

self.url: str = url

self.params: dict[str, Any] = params

self.headers: dict[str, Any] = headers

self.solver = recaptchaV2Proxyless()

# solver setup

self.api_key = api_key

self.website_key = website_key

self.client = Client()

def solve_captcha(self, sitekey: str):

"""resolve captcha v2

Args:

sitekey (str): website key for bypass capcha

"""

headers: dict = {

"content-type": "application/x-protobuffer",

"cookie": "__Secure-3PSID=SgjFUgcMhK-HVyybbLH0riteaGdyRWxselnkRXWJxq2rjwyvYXp3pavZE0ePt1obNzd12Q.; __Secure-3PAPISID=Y_7wkodD5a0ZuHN4/Ar2CPyucPiF7dfJA0; NID=511=s-gIuV4uvQNKsfuPUm6Z41MVf8EMHdBeCD0ydgE1o6bGIQ8JE0LHu2GagudzwTjPdCfI78TASrrK6Xq9XGzk6lVaLgvNl6I9SNQ5MaVQr2hDj75FqZozXKgYYAdBAMUc-6wi1xI89i6HazvhC_7SyvajPmbXdD8J6rob43zrjlERiPshgiV8oozdvh16VdSrGQzZfWYNxqFFN95iEMo6BAXj_iEWnaRv_7vWw5oHKYcvnK3B7w_-p3C2477AXkUCEmMzRCDLlzRJjWhQ78XJD5IixMImsXmYJyGMKpkUz7gPns358uq5_bSB1WjG72fuFr9YK6XT_CqoIbLaxW1a7EWeegUjGDaXkNRCwvCvzkcMqkqLaOcT6lhqxAVWTgkT64hQX5HAr1kLd2j2Of8gZozSWqsPhaGQmCwOi4jTFxzR9B_SKVQxBBTDEMPk7XPDVeRrviwOPJJAwW55AInDpkY2Tx789-jYhpUn3t--japlkbZzpjInu6GX_wXK7ABSMRVSfpeFIQky62f-BroPYLQkU8JalDmVnQ_9gO3HsKlx1pvxV96aXNkaYu9WdW0PJ8MKc__whIenFw; __Secure-3PSIDCC=AIKkIs2ZZCAwmd6CAU2iZePJJjBhda6W0UW1jQ9rQ3phpRz_zUnE3H4cXYZRAetQu1dHi34gM6Ga",

"origin": "https://www.google.com",

"referer": "https://www.google.com/recaptcha/api2/bframe?hl=en&v=Gg72x2_SHmxi8X0BLo33HMpr&k=6LelzS8UAAAAAGSL60ADV5rcEtK0x0lRsHmrtm62",

"sec-ch-ua": '"Not_A Brand";v="99", "Google Chrome";v="109", "Chromium";v="109"',

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-platform": "macOS",

"sec-fetch-dest": "empty",

"sec-fetch-mode": "cors",

"ec-fetch-site":"same-origin",

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"x-client-data": ""

}

response = self.client.post(url, headers=headers)

print(response.text)

def parse(self):

soup = self.client.get(url=self.url, params=self.params,)

index_url = soup.css_first("form#SLSearchForm[action]").attrs["action"]

base_url = "https://mybaragar.com/{}".format(index_url)

def search(self, update: bool = False):

searcher: SearchGenerator = SearchGenerator(

search_url="https://geographic.org/streetview/canada/bc/west_vancouver.html",

update_data=update,

)

reports = searcher.report()

df = pd.read_csv(reports)

datas = df.to_dict()

can anyone help?,

CodePudding user response:

You don't need anything regarding reload / verify post. That's will be handled by the anticaptcha team.

You should use the response from the anticaptcha library for submitting data into the target site, not recaptcha's verify or reload endpoint.