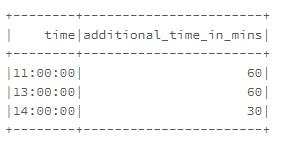

I have the below pyspark dataframe.both are string columns.

time additional_time_in_mins

11:00:00 60

13:00:00 60

14:00:00 30

I have to add the minutes in the additional time column to actual time and create an output as below in pyspark.

Expected output:

new_time

12:00:00

14:00:00

14:30:00

Is there a way to do this in pyspark

CodePudding user response:

One simple option is converting time column to bigint in seconds using unix_timestamp function, add the minutes (minutes * 60s) and then cast the result back to timestamp.

Lastly, convert to hourly format.

df = df.withColumn('new_time', F.date_format((F.unix_timestamp('time', 'HH:mm:ss') F.col('additional_time_in_mins')*60).cast('timestamp'), 'HH:mm:ss'))

df.show()

-------- ----------------------- --------

| time|additional_time_in_mins|new_time|

-------- ----------------------- --------

|11:00:00| 60|12:00:00|

|13:00:00| 60|14:00:00|

|14:00:00| 30|14:30:00|

-------- ----------------------- --------

CodePudding user response:

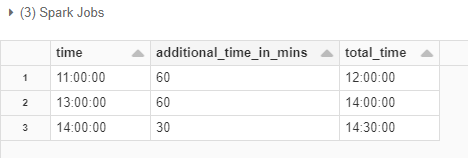

Other way to do this using UDF:

from pyspark.sql.functions import date_format, col

data = [

("11:00:00", "60"),

("13:00:00", "60"),

("14:00:00", "30"),

]

df = spark.createDataFrame(data, ["time", "additional_time_in_mins"])

df.show()

UDF logic to sum time

from pyspark.sql.types import StringType, IntegerType

from pyspark.sql.functions import udf

@udf(returnType=StringType())

def sum_time(var_time, additional_time):

# Converting var_time string to time

var_time = datetime.strptime(var_time, '%H:%M:%S').time()

#Using date to utitlise the time function

combined_time = (datetime.combine(date.today(), var_time) timedelta(minutes=additional_time)).time()

return str(combined_time)

Using UDF to get the final output:

df = df.withColumn(

"total_time", sum_time(col("time"), col("additional_time_in_mins").cast(IntegerType()))

)

display(df)