Is each run of a Neural Network different? I would like to mention I have a documented really good performance, and now the model is not performing the same each time I run it. Is there a seed command to use?

I am writing a computer science research paper on the VGG 16 Model so how do I actually report a good run. Right now I have the CSV logger, and ModelCheckpoint imported from tensorflow.keras.callbacks. What is the practice when choosing a model's output if each run is different?

train_datagen = ImageDataGenerator(

rescale = 1./255,

horizontal_flip = True,

fill_mode = "nearest",

zoom_range = 0.1,

width_shift_range = 0.1,

height_shift_range=0.1,

rotation_range=5)

test_datagen = ImageDataGenerator(

rescale = 1./255,

horizontal_flip = True,

fill_mode = "nearest",

zoom_range = 0.1,

width_shift_range = 0.1,

height_shift_range=0.1,

rotation_range=5)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size = (img_height, img_width),

batch_size = batch_size,

class_mode = "categorical")

validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size = (img_height, img_width),

batch_size = batch_size,

class_mode = "categorical"

)

lr_schedule1 = ExponentialDecay(

initial_learning_rate = .9,

decay_steps=100000,

decay_rate=0.96,

staircase=True)

model = VGG16(weights = "imagenet", include_top=False, input_shape = (img_width, img_height, 3))

for layer in model.layers[:10]:

layer.trainable = False

x = model.output

x = Flatten()(x)

x = Dense(3, activation='relu')(x)

x = Dense(3, activation='selu') (x)

predictions = Dense(num_classes, activation="softmax")(x)

model_final = Model(model.input, predictions)

model_final.compile(loss = "binary_crossentropy",

optimizer = tf_SGD(learning_rate =lr_schedule1),

metrics= ["accuracy"])

checkpoint = ModelCheckpoint("weather1.h5", monitor='val_accuracy', verbose=1, save_best_only=True, save_weights_only=False, mode='auto', period=1)

early = EarlyStopping(monitor='val_accuracy', min_delta=0, patience=10, verbose=1, mode='auto')

filename = str(input("What is the desired filename? \n \t"))

csv_logger = CSVLogger(f'{filename}.log')

history_object = model_final.fit(

train_generator,

#steps_per_epoch = 42, #nb_train_samples,

epochs = 100, #epochs=15,

validation_data = validation_generator,

#validation_steps = nb_validation_samples,

callbacks = [checkpoint, early,csv_logger])

CodePudding user response:

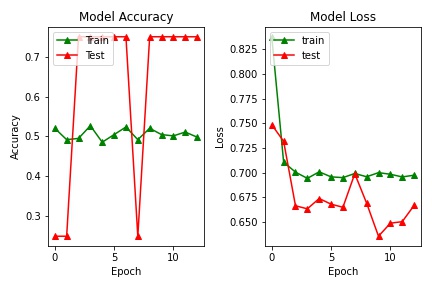

So there are several problems with this code that leads to the performance issues that you are seeing:

- Your

ImageDataGeneratorfor your validation dataset introduces random augmentations. You should not do any augmentations as the performance evaluation would not be reproducible and you wouldn't have a base of comparison from previous epochs. You wouldn't know if the performance was due to the network learning or if it was something in the batch that made it get better. You will need to remove these and just add therescaleoperation instead. You can certainly leave the augmentations for the training generator, but you should not do random augmentations for the validation / test:

test_datagen = ImageDataGenerator(rescale = 1./255)

- Your final activation for the output layer is a ReLU variant. However, you are specifying

binary_crossentropyas your loss function. You should be using an output layer that is suitable for classification. Any ReLU variant is not suitable. Because you are using 3 labels,softmaxwould be more appropriate:

x = Dense(3, activation='relu')(x)

x = Dense(3, activation='softmax')(x)

- In addition, the last Dense layer before your final layer is doing a huge latent space vector compression down to three dimensions. I don't believe this semantically captures the classes correctly so I would suggest using more neurons. Maybe 128? 256? This you will need to experiment with:

x = Dense(128, activation='relu')(x)

x = Dense(3, activation='softmax') (x)

- Back to the loss function, please make sure you are using the proper loss function for multi-class classification.

sparse_categorical_crossentropyhere would be a better fit:

model_final.compile(loss = "sparse_categorical_crossentropy",

optimizer = tf_SGD(learning_rate =lr_schedule1),

metrics= ["accuracy"])

- Finally, you may want to use an optimizer that is more aggressive, such as

Adam. I would try both SGD (what you have now) and Adam to see what fits.

Hope this helped in some way. Let us know if you resolve the performance errors you are getting!