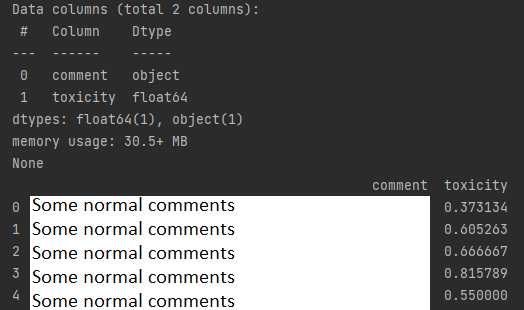

I have a basic dataset with one object named 'comment', one float named 'toxicity'. My dataset's shape is (1999516, 2)

I'm trying to add a new column named 'tokenized' with nltk's word tokenized method and create bag of words like this :

dataset = pd.read_csv('toxic_comment_classification_dataset.csv')

dataset['tokenized'] = dataset['comment'].apply(nltk.word_tokenize)

as mentioned in "IN [22]"

I don't an get error message until i wait like 5 minutes after that i get this error :

TypeError: expected string or bytes-like object

How can I add tokenized comments in my vector (dataframe) as a new column?

CodePudding user response:

It depends on the data in your comment column. It looks like not all of it is of string type. You can process only string data, and just keeep the other types as is with

dataset['tokenized'] = dataset['comment'].apply(lambda x: nltk.word_tokenize(x) if isinstance(x,str) else x)

The nltk.word_tokenize(x) is a resource-consuming function. If you need to parallelize your Pandas code, there are special libraries, like Dask. See Make Pandas DataFrame apply() use all cores?.